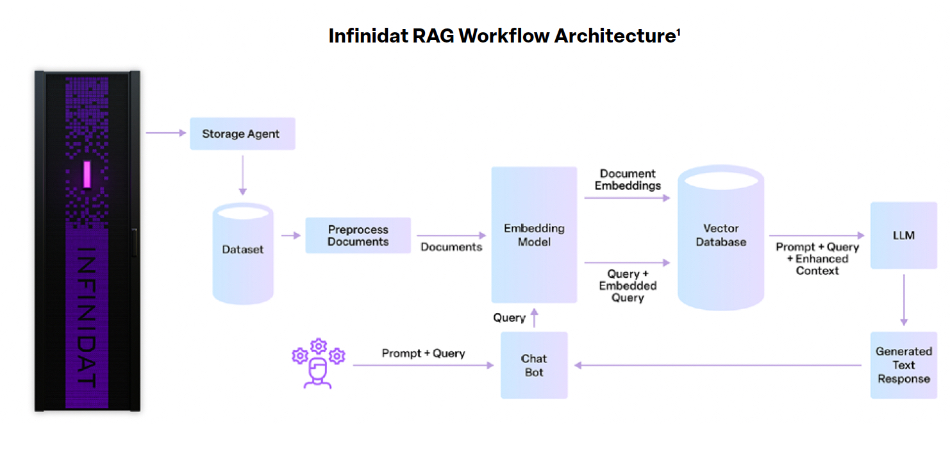

Storage array supplier Infinidat has devised a RAG workflow deployment architecture so its customers can run generative AI inferencing workloads on its InfiniBox on-premises and InfuzeOS public cloud environments.

The HDD-based InfiniBox and all-flash InfiniBox SSA are high-end, on-premises, enterprise storage arrays with in-memory caching. They use the InfuzeOS control software. InfuzeOS Cloud Edition runs in the AWS and Azure clouds to provide an InfiniBox environment there. Generative AI (GenAI) uses large language models (LLMs) trained on general-purpose datasets, typically in massive GPU cluster farms, with the trained LLMs and smaller models (SLMs) used to infer responses (inferencing) to user requests without needing massive GPU clusters for processing.

However, their generalized training is not good enough to produce accurate responses for specific data environments, such as a business’s production, sales, or marketing situation, without their response retrievals using augmented generation (RAG) from an organization’s proprietary data. This data needs transforming into computer-generated vectors, dense mathematical representations of pieces of data, that need storing in vector databases and made available to LLMs and SLMs using RAG inside a customer’s own environment.

Infinidat has added a RAG workflow capability to its offering, both on-premises and in the cloud, so that Infinidat-stored datasets can be used in GenAI inferencing applications.

Infinidat CMO Eric Herzog stated: “Infinidat will play a critical role in RAG deployments, leveraging data on InfiniBox enterprise storage solutions, which are perfectly suited for retrieval-based AI workloads.

“Vector databases that are central to obtaining the information to increase the accuracy of GenAI models run extremely well in Infinidat’s storage environment. Our customers can deploy RAG on their existing storage infrastructure, taking advantage of the InfiniBox system’s high performance, industry-leading low latency, and unique Neural Cache technology, enabling delivery of rapid and highly accurate responses for GenAI workloads.”

Vector databases are offered by a number of vendors such as Oracle, PostgreSQL, MongoDB, and DataStax Enterprise, whose databases can run on Infinidat’s arrays.

Infinidat’s RAG workflow architecture runs on a Kubernetes cluster. This is the foundation for running the RAG pipeline, enabling high availability, scalability, and resource efficiency. Infinidat says that with AWS Terraform, it significantly simplifies setting up a RAG system to just one command to run the entire automation. Meanwhile, the same core code running between InfiniBox on-premises and InfuzeOS Cloud Edition “makes replication a breeze. Within ten minutes, a fully functioning RAG system is ready to work with your data on InfuzeOS Cloud Edition.”

Infindat says: “When a user poses a question (e.g. ChatGPT), their query is converted into an embedding that lives within the same space as the pre-existing embeddings in the vector database. With similarity search, vector databases quickly identify the nearest vectors to the query to respond. Again, the ultra-low latency of InfiniBox enables rapid responses for GenAI workloads.”

Marc Staimer, president of Dragon Slayer Consulting, commented: “With RAG inferencing being part of almost every enterprise AI project, the opportunity for Infinidat to expand its impact in the enterprise market with its highly targeted RAG reference architecture is significant.”

NetApp has also added RAG facilities to its storage arrays, including integrating Nvidia’s NIM and NeMo Retriever microservices, which is not being done by Infinidat. It and Dell are also supporting AI training workloads.

If AI inferencing becomes a widely used application, all storage system software – for block, file, and object storage – will have to adapt to support such workloads.

Read more in a Bill Basinas-authored Infinidat blog, “Infinidat: A Perfect Fit for Retrieval-augmented Generation (RAG): Making AI Models More Accurate”. Basinas is Infinidat’s Senior Director for Product Marketing.