DDN says it shipped more AI storage in the first quarter of this year than in all of 2022 and claims it expects to boost revenues further.

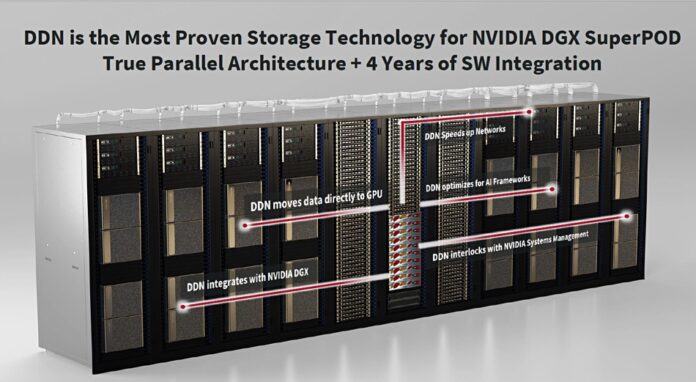

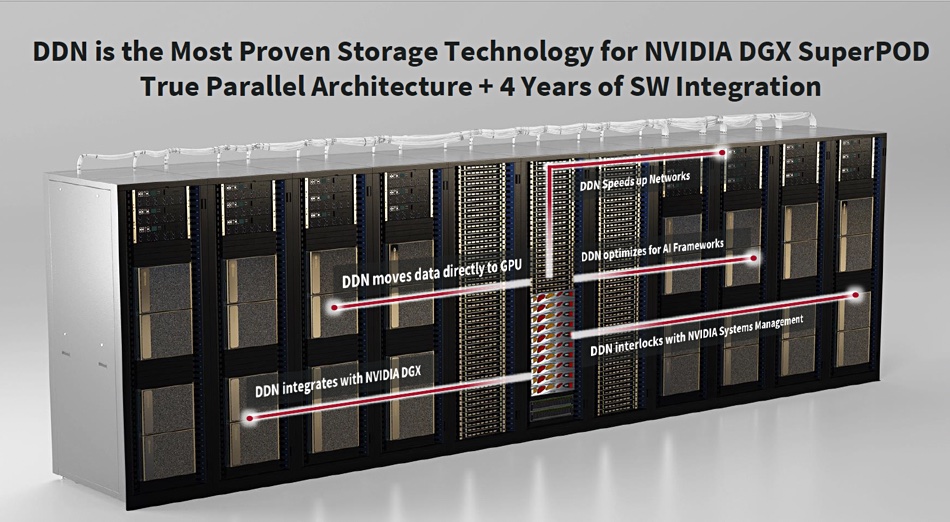

The company has a long history of supplying storage arrays for supercomputing and high-performance computing. AI-type applications need GPU processing, which means enterprises have started using HPC-class storage. DDN has ridden this wave and has been working with Nvidia and its GPUs, like the DGX, for four years or more.

Kurt Kuckein, DDN’s VP for Marketing, told an IT Press Tour: “We’re behind over 5,000 DGX systems today.”

Previously B&F has learned that DDN has about 48 AI400X2 arrays supporting Nvidia’s largest SuperPODs. DDN has reference architecture papers for Nvidia’s POD and SuperPOD coming out in the next couple of weeks

Generative AI is, Kuckein said, driving DDN’s expansion.

DDN has recently launched an all-flash EXAScaler array, the AI400 X2, using SSDs with QLC (4bits/cell) flash and hopes its combination of flash speed and capacity – 1.45PB in a 2RU chassis – will prove popular for generative AI use cases. The AI400 X2 provides up to 8PB of TLC (3 bits.cell) and QLC NAND in 12RU with a head chassis containing TLC drives, and 2 x QLC drive expansion chassis.

There is linear scaling to 900GBps reads from 24RU of storage – 12 x AI400X2 appliances with 90GBps/appliance. These 12 appliances also provide 780GBps writes. DDN says that, with large language model work, compute overlaps data transfer and faster transfer means compute completes faster.

Startup VAST Data has been showing off its SuperPOD credentials by announcing that Nvidia has certified its QLC flash array as a SuperPOD data store. It claims that its Universal Storage system is the first enterprise network-attached storage (NAS) system approved to support the Nvidia DGX SuperPOD.

DDN’s customers, however, also include data-intensive organizations and enterprises.

Kuckein told his audience that “DDN gives you 39x more writes per RU than a prominent newcomer to Nvidia data supply,” without naming the newcomer. He added that DDN provides “50x more IOPS/RU, 10x more writes/rack and 6x more capacity/watt.”

DDN says its EXAScaler can provide up to 8PB in 12 RU of space. A VAST Data Ceres-based system would need 10PB for 10 x 807.8TB 1 RU storage enclosures and, say, 4U more for 4 x CNodes (Controller nodes), making 14RU in total. When the Ceres box gets 60TB SSDs later in the year, that rackspace total will drop to 5 x 1.6PB 1RU enclosures plus 4RU for the controllers again – 9RU in total and just one more than DDN.

A VAST source suggested that arguing about who can fit the most flash in a rack unit doesn’t make any more sense than arguing over how many angels can dance on the head of a pin because customers don’t care. They run out of power and cooling at each rack long before all the rack slots are filled. If I have 12KW per rack do I care If that’s 24U or 32U of kit in a 42U rack?

DDN’s density comes at the price of having a lot of SSDs behind a small number of controllers. In 12U they probably only have six controllers in three HA pairs. That’s going to bottleneck the 130 60TB SSDs they need to get to 8PB.

A VAST system is going to need fewer SSDs because it claims to have more efficient erasure codes and better data reduction, and VAST customers can vary the number of CNodes to match the cluster’s performance to their applications. If that flexibility means VAST uses a few more rack units, that seems a good trade to VAST.

DDN says it will provide new search and tagging in the AI fabric in the second half of 2023. This software is technology to understand the representation of data in different modalities used by AI applications.

VAST wants to knock DDN off its Nvidia DGX perch and reckons its Universal Storage has the edge over the Lustre-powered EXAScaler. DDN, claiming to be the world’s largest privately owned storage supplier, does not want to let that happen. Pure Storage also has skin in this QLC-based storage for AI game, as does NetApp. We’re entering a multi-way fight for AI storage customers. Expect claim and counter-claim as supplier marketing departments become hyperactive.