Pinecone has made its AWS-supporting vector database available on the Azure and Google clouds and added a bulk object storage insert capability.

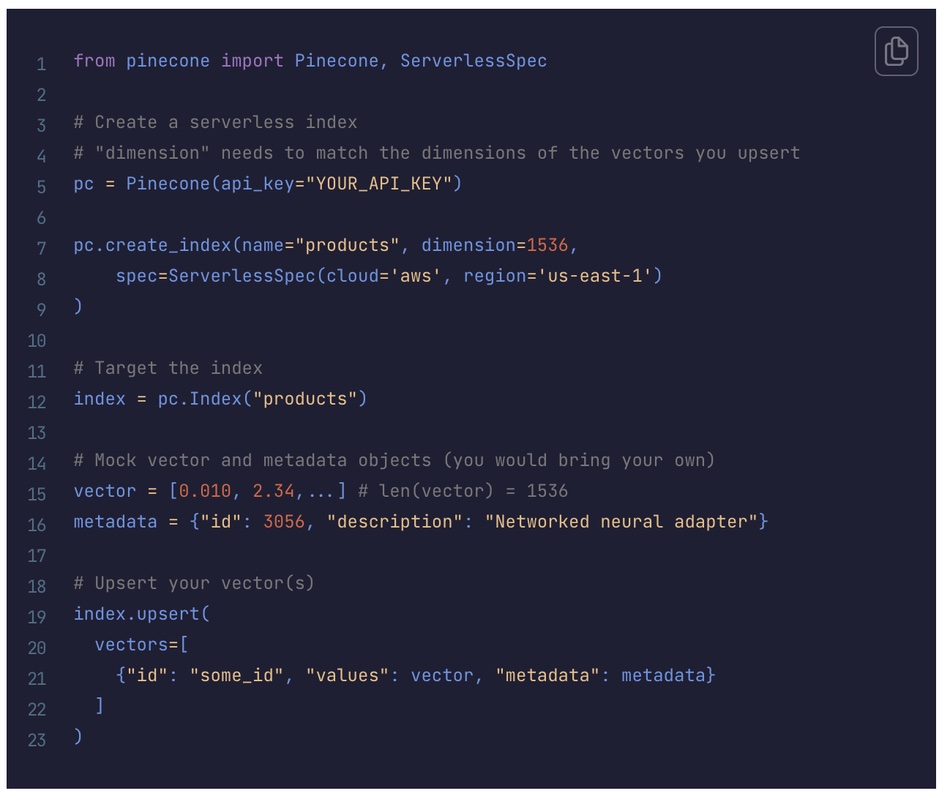

Startup Pinecone has developed a database specifically to store vector embeddings, the symbolic representations of multiple dimensions of text, image, audio, and video objects used in semantic search by generative AI’s large language models (LLMs) to build responses to users’ requests. Its vector database, already available on AWS, is serverless, meaning users don’t need to be concerned about the underlying server instance infrastructure across the now three public clouds.

Pinecone’s Director of Product Management, Jeff Zhu, writes in a blog post: “With our vector database at the core, Pinecone grounds AI applications in your company’s proprietary data.” A customer’s own data can be used to help with RAG (Retrieval-Augmented Generation), enabling a generally trained LLM to base its responses on a customer’s proprietary data, making them more accurate and less prone to erroneous results or “hallucinations.”

Cisco’s Sujith Joseph, Principal Engineer, Enterprise AI & Search, writes that by using Pinecone’s “vector database on Google Cloud, our enterprise platform team built an AI assistant that accurately and securely searches through millions of our documents to support our multiple orgs across Cisco.”

Pinecone has released Pinecone Assistant in beta as a managed service on Google Cloud. This, Zhu says, “delivers high-quality and dependable answers for text-heavy technical data such as financial and legal documents.” Pinecone points out that “all the infrastructure, operations, and optimization of a complex Q&A system are handled for you” through a simple API.

Zhu writes: “Along with AWS and GCP, you can now build with serverless on the cloud and region that suits you best [and] we’re introducing new features to give you greater control and protection over your data. This includes backups for serverless indexes and more granular access controls.”

The backups, he adds, “enable seamless backup and recovery of your data.” Available to all Standard and Enterprise users, these features allow you to:

- Protect your data from system failures or accidental deletes

- Revert bad updates or deletes and restore an index to a known, good state

- Meet compliance requirements (e.g. SOC 2 audits)

“You can manually backup and restore your serverless indexes via a simple API. Backups for serverless are now in public preview for all three clouds.”

The granular access controls come from an API Key Roles feature, also in public preview. These “enable Project Owners to set granular access controls – NoAccess, ReadOnly, or ReadWrite – for both the Control Plane and Data Plane within Pinecone serverless.”

Pinecone will “be introducing more User Roles at the Organization and Project levels in the coming weeks. Organization-level User Roles including Org Owner, Billing Admin, Org Manager, and Org Member will let you determine access to managing projects, billing, and other users in the organization. Project-level User Roles including Project Owner, Project Editor, and Project Viewer will let you determine access to API keys, the Control and Data planes, and other users in the project.”

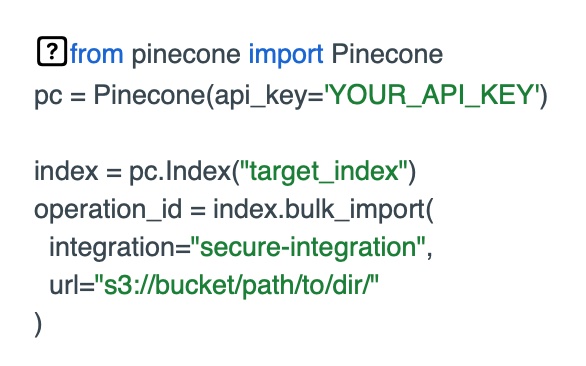

Object storage upload

The company has also enabled bulk insert of records from object storage, which – we’re told – is up to six times cheaper than doing normal record-by-record updates and inserts (upserts, in Pinecone’s terminology). It’s intended for the insertion of millions of objects. Data is read from a secure bucket in a customer’s object storage. This is an asynchronous, background, long-running batch-like process with, Pinecone says, “no need for performance tuning or monitoring the status of your import operation.”

As a cost example, Pinecone suggests that “ingesting 10 million records of 768-dimension will cost $30 with bulk import.”

Customers first need to integrate their object store (e.g. Amazon S3) with Pinecone. This integration lets the customer store IAM credentials for their object store, which can be set up or managed via the Pinecone console. Import is done from a new API endpoint that supports Parquet source files.

We asked if Pinecone vectorizes the object data during the insert from object storage. Zhu told us: ”The current implementation requires that the content has already been vectorized. We are exploring vectorization during import for a future release.”

Startup Onehouse has a vector embeddings generating capability and integrates with Pinecone.

We understand that by developing a specialized and serverless vector database, Pinecone reckons it will have both a performance (search time, latency) edge on competitors, including other dedicated vector databases and multi-protocol databases such as SingleStore.

Availability

Pinecone serverless is available for all Standard and Enterprise customers and currently supports the “eastus2” (Virginia) region on Azure, and Google Cloud’s “us-central1” (Iowa) and “europe-west4” (Netherlands), with more regions coming soon for both. Customers can start building on Pinecone serverless using one of Pinecone’s sample notebooks or subscribe through the Azure or Google Cloud marketplaces.

Import from object storage is now available in early access mode for Standard and Enterprise users at a flat rate of $1.00/GB. It is currently limited to Amazon S3 for serverless AWS regions. Support for Google Cloud Storage (GCS) and Azure Blob Storage will follow in the coming weeks.

Bulk imports are limited to 200 million records at a time during early access and import operations are restricted to writing records into a new serverless namespace; you currently cannot import data into an existing namespace.

Bootnote

Pinecone’s VP of R&D, Ram Sriharsha, has provided a technical deep dive on the company’s serverless database, writing: “Traditionally, vector databases have used a search engine architecture where data is sharded across many smaller individual indexes, and queries are sent to all shards. This query mechanism is called scatter-gather – as we scatter a query across shards before gathering the responses to produce one final result. Our pod-based architecture uses this exact mechanism.

“Before Pinecone serverless, vector databases had to keep the entire index locally on the shards. This approach is particularly true of any vector database that uses HNSW (the entire index is in memory for HNSW), disk-based graph algorithms, or libraries like Faiss. There is no way to page parts of the index into memory on demand, and likewise, in the scatter-gather architecture, there is no way to know what parts to page into memory until we touch all shards.

“We need to design vector databases that go beyond scatter-gather and likewise can effectively page portions of the index as needed from persistent, low-cost storage. That is, we need true decoupling of storage from compute for vector search.”

His blog then describes Pinecone’s serverless approach and provides performance numbers.