Analysis: For 17 years, a small California firm called IC Manage quietly toiled away as it built up a business catering to the specialist requirements of integrated circuit (IC) and electronic design automation (EDA) design vendors. For one of its co-founders, Dean Drako, IC Manage was, for many years, just a side gig while he concentrated on running and exiting from another company he founded – Barracuda Networks.

Today, the company thinks that Holodeck, the hybrid cloud data management software it has developed for EDA companies, is equally applicable to other industries where tens of millions of files are involved.

Holodeck provides public cloud P2P file caching of an on-premises NFS filer – and IC Manage says that any on-premises file-based workloads that need to burst to the public cloud can use it. To this end it is working with several businesses, including a major Hollywood film studio, on potential film production, special FX, and post-processing applications.

Let’s take a look at IC Manage Holodeck and see why it might be extensible from semiconductor design companies to the general enterprise market.

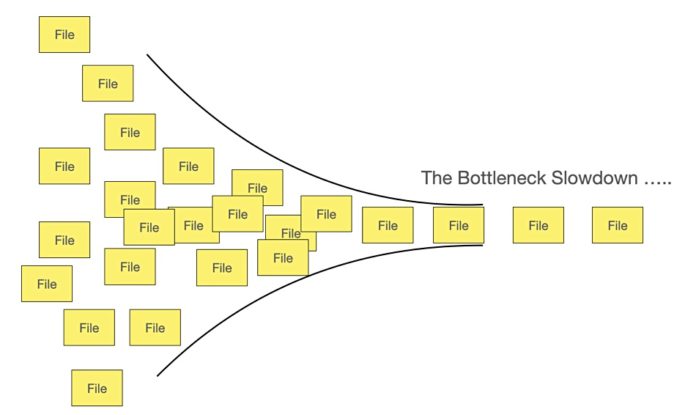

For EDA firms, tens of millions of files may be involved in IC circuit simulations run on their on-premises compute server farm. The NFS filer – NetApp or Isilon, for example – could be a bottleneck, slowing down file access at the million-plus file level. IC Manage EVP Shiv Sikand told us he has little respect for NFS file stores: “NFS has terrible latency; it doesn’t scale… I never met a filer I liked.”

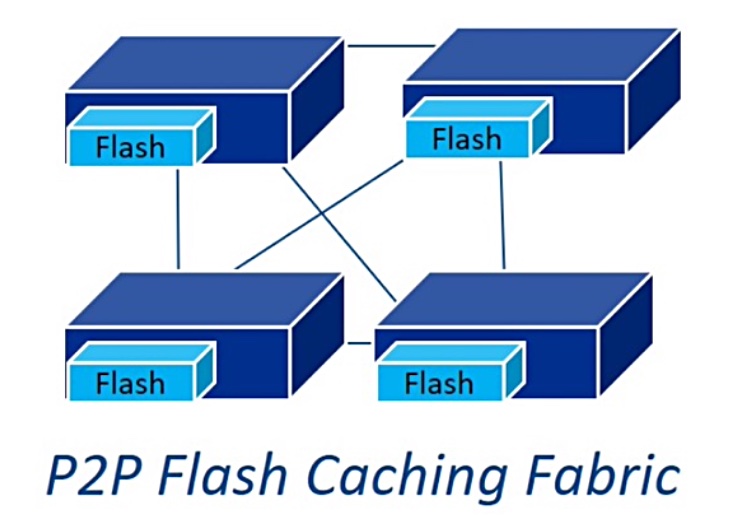

Holodeck accelerates file access workloads by caching file data in a peer-to-peer network. The cache uses NVMe SSDs in on-premises data centres. It also builds a similar cache in the public cloud so that on-premises workflows bursts to the cloud. In this scenario, the Holodeck in-cloud cache acts an NFS filer.

The company

IC Manage was founded in 2003 by CEO Dean Drako and Shiv Sikand. The company says it is largely self-funded. Drako founded and ran Barracuda Networks from 2003 to 2012, with an IPO in 2013, and he lists himself as an investor. He has invested in the file metadata acceleration startup InfiniteIO.

Drako also runs Eagle Eye Networks which acts as a video surveillance management facility in the public cloud. Together with Sikand he co-founded Drako Motors which is building the limited edition 4-seat Drako GTE electric supercar. Just 25 will be built and sold for $1.25m apiece.

That means neither Drako nor Sikand are full-time on IC Manage; this is not a classic Silicon Valley startup with hungry young entrepreneurs struggling in a garage. But Sikand shrugs thus off: “Is Elon Musk part-time on Tesla?”

Holodeck on-premises

In a DeepChip forum post in 2018, Sikand said IC Manage’s customers “have 10’s to 100’s of millions of dollars and man-hours invested in on-premise workflows and EDA tools and scripts and methodologies and compute farms” that they know work. They don’t want to interfere with or upset this structure.

The “big chip design workflows,” he wrote, are “typically mega-connected NFS environments, comprised of 10’s of millions of files, easily spanning 100 terabytes.” These can include:

- EDA vendor tool binaries – with years of installed trees

- 100s of foundry PDKs, 3rd party IP, and legacy reference data

- Massive chip design databases containing timing, extracted parasitics, fill, etc.

- A sea of set-up and run scripts to tie all the data together

- A nest of configuration files to define the correct environment for each project.

Sikand told us: “These are 10’s of millions of horrifically intertwined on-premise files.”

Holodeck speeds file data access partly because it “separates out data from whole files and only transfers extents (sections),” Sikand wrote in his forum post. Holodeck accelerates workloads by building a scale-out peer-to-peer cache using 50GB to 2TB NVMe SSDs per server node. These sit in front of the bottlenecked NFS filer and enable parallel access to file data in the cache. All nodes in the compute grid share the local data – both bare metal and virtual machines. More NVMe flash nodes can be added to scale out performance.

According to IC Manage, this ensures consistent low latency and high bandwidth parallel performance, and it can expand to thousands of nodes.

The P2 cache can be set up on a remote site – and it can also be set up in the public cloud, using a Holodeck gateway to support compute workload bursting.

Sikand told us people criticise the P2P approach on the grounds that “no-one else is doing this. You must be wrong.” His response to the critics – who “not the sharpest knives in the box” – is that this works for semiconductor design and manufacturing shops.

Holodeck in the public cloud

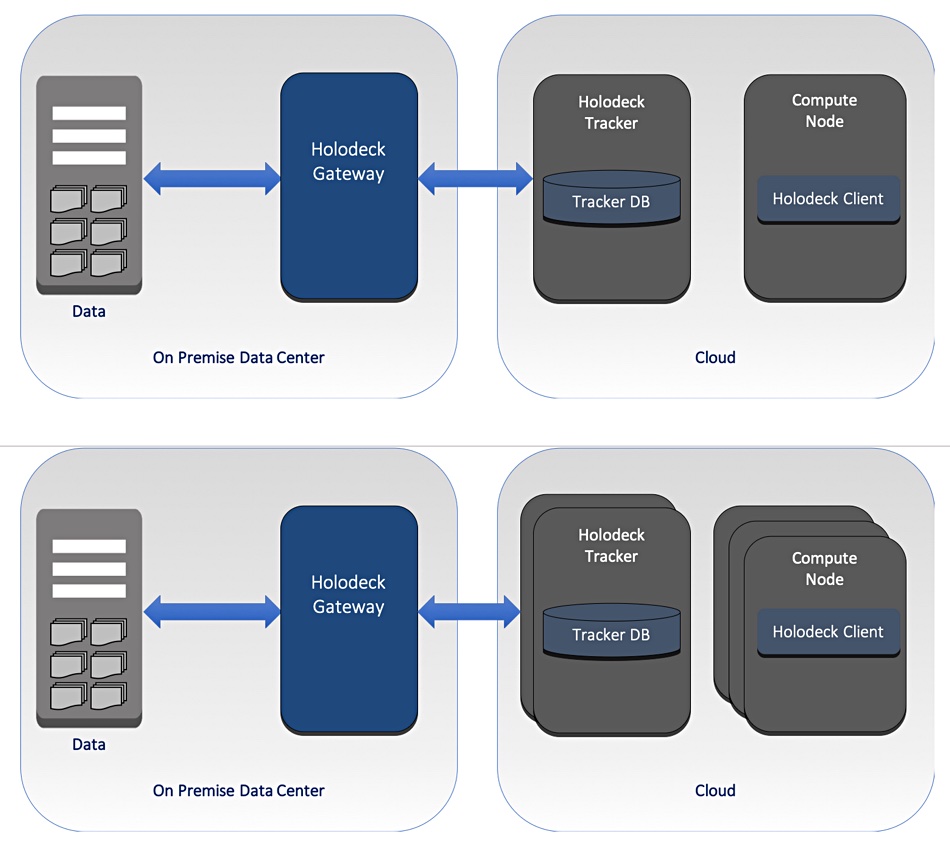

The P2P cache approach is used in the public cloud but Holodeck is using it on-premises. The Holodeck software sets up a cloud Gateway system to take NFS filer information and send it to a database system, called ‘Tracker’. Public cloud peer nodes in the P2P facility use Tracker, and compute instances – called Holodeck clients – access these peer nodes. The compute instances and the peer nodes both scale out.

The Tracker database can be provisioned on block storage; the peer nodes use only small amounts of temporary storage using high performance NVMe devices, such as AWS i3 instances.

The Holodeck Client compute nodes access the P2P cache as if it were an NFS filer and job workflows execute in the cloud exactly as they do on premises. Holodeck’s peer-to-peer sharing model allows any node to read or write data generated on any other node. This sharing model has the same semantics as NFS, and so ensures full compatibility with all the on-premises workflows.

The good thing here is the on-premises file data is never stored outside the caches in the public cloud. There is no second copy of all the on-premises files in the clouds, which reduces costs. Any Tracker database changes made as a result of the cloud compute jobs are tracked by Holodeck and only these changes are sent back on-premises, meaning that cloud egress charges are low.

Holodeck supports selective writeback control to prevent users from inadvertently pushing unnecessary public cloud data back across the wires to the on-premises base.

Data coherency between the peer nodes is maintained using the RAFT distributed consensus protocol, which can withstand node failures. Basically, if a majority of the peer nodes do the same thing such as update a file metadata value, then that is the single version of the truth and a failed node can be worked around.

Competition

Panzura’s Freedom Cloud NAS offering runs on-premises and in the public clouds. This enables file transfer between distributed EDA systems to accomplish remote site access to another site’s files. It uses compression and deduplication to reduce file transfer data amounts.

Nasuni also provides a NAS in the cloud with on-premises access.

As soon as an EDA customer has a scale-out file system which supports flash drives, such as one from Qumulo or WekaIO, the need for Holodeck on-premises is reduced. If that file system runs in the public cloud the need for Holodeck hybrid cloud reduces further.

However, Holodeck’s return of only updated data from the cloud (delta changes) minimises cloud egress charges, and that could be valuable. Sikand has this to say about WekaIO: “WekaIO is blindingly fast but it makes your nose bleed when you pay the bill.”