Disk drive IO limitations will cripple the use of larger disk drives in petabyte-scale data stores, according to VAST Data’s Howard Marks, who says Flash is the only way to go as it can keep up with IO demands.

Marks is a well-regarded data storage analyst who these days earns his crust as “Technologist Extraordinary and Plenipotentiary” at VAST Data, the all-flash storage array vendor. Happily for the industry at large, he continues to publish his insights via the VAST company blog.

In his article The Diminishing Performance of Disk Based Storage, Marks notes that disk drives have a single IO channel which is fed by a read/write head skittering about on the disk drive platters’ surface. This takes destination track seek and disk rotation time, resulting in 5ms to 10ms latency.

This is “20-40 times the 500μs SSD read latency” and limits the number of IOs per second (IOPS) that a disk drive can sustain and also the bandwidth in MB/sec that it can deliver.

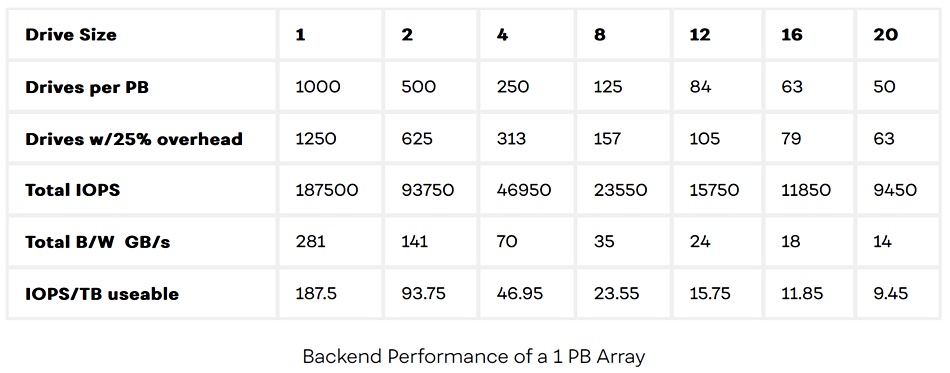

“As disk drives get bigger, the number of drives needed to build a storage system of any given size decreases and fewer drives simply deliver less performance,” Marks writes. He illustrates this point about overall IOPS and bandwidth with a table based on a 1PB array with 225MB/s of sustained bandwidth and 150 IOPS per drive:

This table shows 1,000 x 1TB drives are needed for a 1PB array, dropping to 50 x 20TB drives. He concludes: “As disk drives grow over 10 TB, the I/O density falls to just a handful of IOPS per TB. At that level, even applications we think of as sequential, like video servers, start to stress the hard drives.”

I-O. I-O. It’s off to work we go (slow)

He says disk drives cannot get faster by spinning faster because the power requirements shoot up. Using two actuators (read/write heads or positioners in his terms) per disk effectively splits a disk drive in half and so can theoretically double the IOPS and bandwidth. “But dual-positioners will add some cost, and only provide a one-time doubling in performance to 384 IOPS/drive; less than 1/1000th what an equal sized QLC SSD can deliver.”

Marks also points to the ancillary or slot costs of a disk drive array – the enclosure, its power and cooling, the software needed and the support.

He sums up: “As hard drives pass 20TB, the I/O density they deliver falls below 10 IOPS/TB which is too little for all but the coldest data.”

It’s no use constraining disk drive capacities either.

“When users are forced to use 4 TB hard drives to meet performance requirements, their costs skyrocket with systems, space and power costs that are several times what an all-flash system would need.”

“The hard drive era is coming to an end. A VAST System delivers much more performance, and doesn’t cost any more than the complex tiered solution you’d need to make big hard drives work.”

Comment

As we can see from the 1PB data store example that Marks provides, 50 x 20TB drives pumps out 14GB/sec total while 500 x 2TB drives pumps out 141GB/sec. Ergo using 50 x 20TB drives is madness.

But today 1PB data stores are becoming common. They will be tomorrow’s 10PB data store. Such large arrays will need more drives. Therefore, as night follows day, and using Marks’ own numbers, the overall IOPS and bandwidth will grow in proportion.

A 10PB store would need 500 x 20TB drives and its total IOPS and bandwidth can be read from the 2TB drive size column in Marks’ table above – i.e. 141GB/sec. Data could be striped across the drives in the arrays that form the data store and so increase bandwidth further.

Our caveats aside, Marks raises some interesting points about disk drive scalability for big data stores. Infinidat, which builds big, fast arrays incorporating high-capacity hard drives, is likely to have a very different take on the matter. We have asked the company for its thoughts and will follow up in a subsequent article.