Data search supplier Elastic is attempting to make it easier for developers to stop large language models (LLMs) from generating hallucinatory (false) responses by adding proprietary data and LLM/RAG testing.

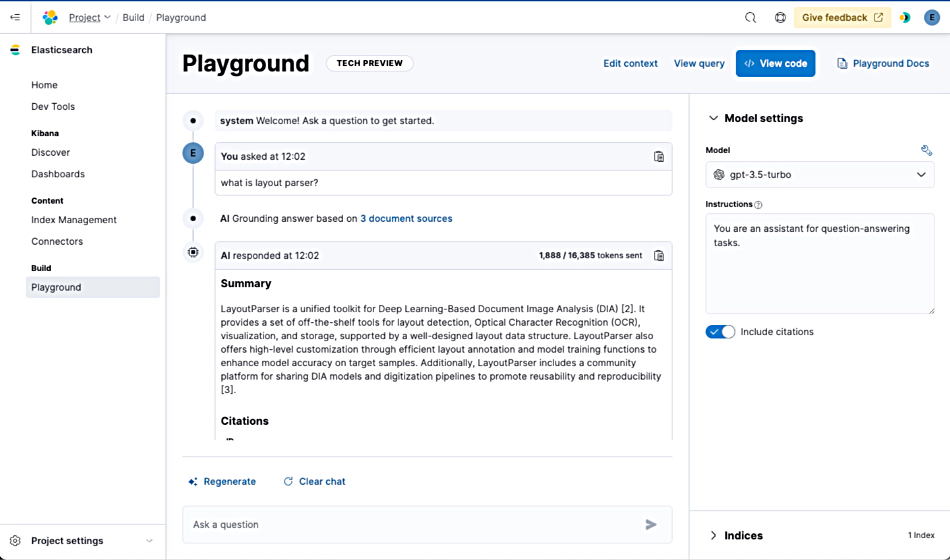

It has announced its Playground facility for such developers to bring proprietary data, indexed in the company’s Elasticsearch, to LLMs in retrieval-augmented generation (RAG) of model responses to user input. The aim is to speed iterative testing and development of RAG/LLM combos by comparing response results from two versions of an LLM, the A and B versions, choosing the best one, and then developing it again, tuning prompts and chunking data for another round of A/B testing.

Matt Riley, global VP and GM, Search at Elastic, said: “While prototyping conversational search, the ability to experiment with and rapidly iterate on key components of a RAG workflow is essential to get accurate and hallucination-free responses from LLMs.”

All this takes place in an Elasticsearch environment based on its AI platform, which contains a vector database. This includes Elasticsearch indices of proprietary data. A blog says: ”Any data can be used, even BM25-based indices. Your data fields can optionally be transformed using text embedding models (like our zero-shot semantic search model ELSER), but this is not a requirement.”

ELSER contains a sparse vector embedding model to enable plain text to be accessed through semantic (vector-based) search.

LLMs can be run directly inside this AI platform or external ones referenced through an Open Inference API. This API supports Cohere, AI Studio, and “a growing list of inference providers,” Elastic says.

The developers use a low-code interface, such as GUI with click-and-select or drag-and-drop elements. The vector database supports hybrid search, meaning vector-based and plain text-based search. The latter uses Elastic’s Retriever functions.

They can also use LLMs, referred to as chat completion models, from OpenAI (GPT-4o) and the Azure OpenAI Service or Anthropic via Amazon Bedrock.

Riley added: “Developers use the Elasticsearch AI platform, which includes the Elasticsearch vector database, for comprehensive hybrid search capabilities and to tap into innovation from a growing list of LLM providers. Now, the Playground experience brings these capabilities together via an intuitive user interface, removing the complexity from building and iterating on generative AI experiences, ultimately accelerating time to market for our customers.”

Our understanding is that GenAI critically depends upon responses being accurate, complete, and containing no false information. It has yet to be proved that RAG LLMs can do this. Elastic’s Playground will help to show whether RAG techniques can make LLMs more accurate and promote their use by the Elastic customer base.

An Elastic blog discusses how to use the Playground in some detail.