A Panmnesia scheme to bulk up a GPU’s memory by adding fast CXL-accessed external memory to a unified virtual memory space has won a CES Innovation award.

Panmnesia says large-scale GenAI training jobs can be memory-bound as GPUs, limited to GBs of high-bandwidth memory (HBM), could need TBs instead. The general fix for this problem has been to add more GPUs, which gets you more memory but at the cost of redundant GPUs. Panmnesia has used its CXL (Computer eXpress Link) technology, which adds external memory to a host processor across the PCIe bus mediated by Panmnesia’s CXL 3.1 controller chip, which exhibits controller round-trip times less than 100 ns, more than 3x less than the 250 ns needed by SMT (Simultaneous Multi-Threading) and TPP (Transparent Page Placement) approaches.

A Panmnesia spokesperson stated: “Our GPU Memory Expansion Kit … has drawn significant attention from companies in the AI datacenter sector, thanks to its ability to efficiently reduce AI infrastructure costs.”

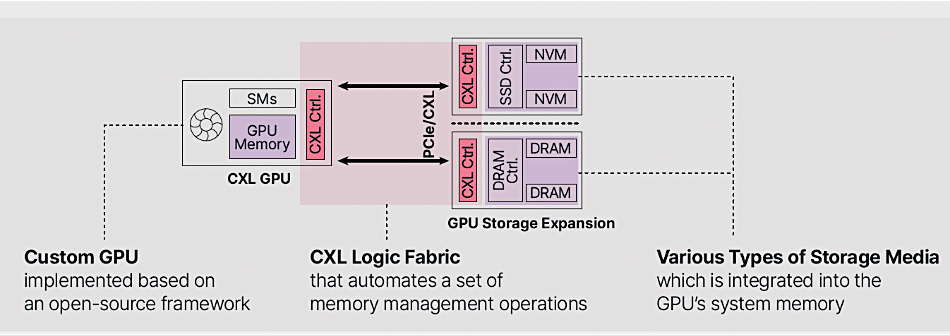

The technology was revealed last summer and shown at the OCP Global Summit in October. The company has a downloadable CXL-GPU technology brief, which says its CXL Controller has a two-digit-nanosecond latency, understood to be around 80 ns. A high-level diagram in the document shows the setup with either DRAM or NVMe SSD endpoints (EPs) hooked up to the GPU:

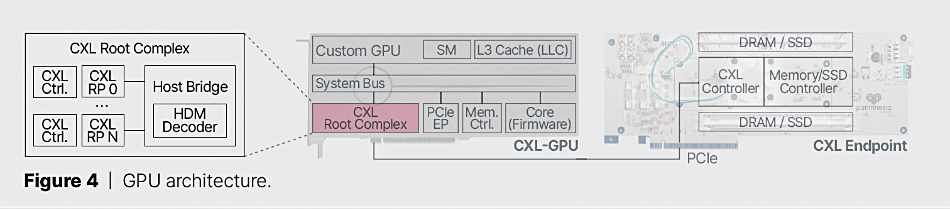

In more detail, a second Panmnesia diagram shows the GPU is linked to a CXL Root Complex or host bridge device across the PCIe bus which unifies the GPU’s high-bandwidth memory (host-managed device memory) with CXL endpoint device memory in a unified virtual memory space (UVM).

This host bridge device “connects to a system bus port on one side and several CXL root ports on the other. One of the key components of this setup is an HDM decoder, responsible for managing the address ranges of system memory, referred to as host physical address (HPA), for each root port. These root ports are designed to be flexible and capable of supporting either DRAM or SSD EPs via PCIe connections.” The GPU addresses all the memory in this unified and cacheable space with load-store instructions.

A YouTube video presents Panmnesia’s CXL-access GPU memory scheme in simplified form here.