Volumez has updated its cloud-delivered block storage provisioning service for containerized applications to GenAI with its Data Infrastructure-as-a-Service (DIaaS) product.

The company says its technology can maximize GPU utilization and automate AI and machine learning (ML) pipelines. Existing pipelines to deliver data and set up AI/ML infrastructure are sabotaged by storage inefficiencies, underutilized GPUs, over-provisioned resources, unbalanced system performance, increased cost, complex management, and poorly integrated tooling, which drains the AI pipeline team’s bandwidth and delays projects.

John Blumenthal, Volumez’s Chief Product and Business Officer, noted at a January IT Press Tour session in Silicon Valley that AI/GenAI workloads demand concentrated compute and storage power in a dense infrastructure. That way they can achieve sustainability, cost efficiency, and energy optimization while reducing hardware sprawl.

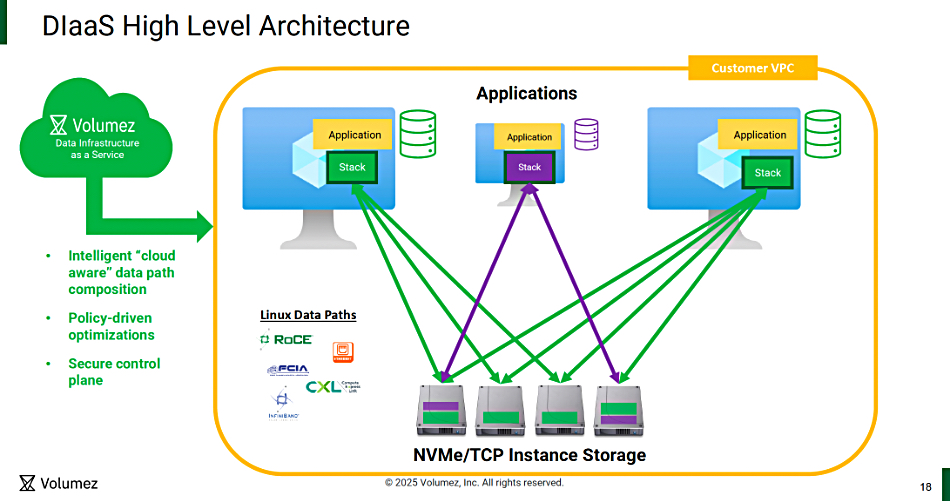

Volumez DIaaS is based on an understanding of cloud providers’ Infrastructure-as-a-Service (IaaS) products and is aimed at creating a balanced infrastructure for AI workloads. This includes a storage infrastructure based on declarative and composed NVMe/TCP instances. It uses intrinsic Linux services and there is no storage controller in the data path.

The context here is that public clouds such as AWS and Azure rent out compute and storage resources and are not incentivized to optimize how customers efficiently and evenly consume these resources. Volumez makes its money by operating in the zone between the public clouds’ optimization of their own resources for their own benefit, and customers’ need to optimize the efficient use of these resources in terms of performance, cost, and simple operations for their own benefit.

Volumez offers better price performance for its block storage than AWS instances, as a chart indicates:

It can set up the AWS or Azure data infrastructure from a data scientist’s notebook, with Volumez imported as a PyTorch library and auto-calculating the storage infrastructure requirements.

Volumez says the MLPerf Storage benchmark shows its infrastructure performing well.

Blumenthal said that AI workloads require a balance of high capacity, high bandwidth, and high performance at an affordable cost, and claims Volumez can provide this. He claimed it increases GPU utilization – they don’t wait so long for data – and so provides improved training and inference yields from the infrastructure.

Check out various Volumez white papers and blogs for more information.