Startup Enfabrica is developing a technology it claims will be “able to scale across every form of distributed system – across cloud, edge, enterprise, 5G/6G, and automotive infrastructure – and be adaptable to however these workloads evolve over time.”

Enfabrica was started in September 2019 by CEO Rochan Sankar and chief development officer Shrijeet Mukherjee. Sankar was previously a senior director of product management and marketing at Broadcom. Mukherjee has an engineering background in Silicon Graphics, Cisco, Cumulus Networks (acquired by Nvidia) and Google, where he was involved in networking platforms and architecture.

The company has had an initial $50 million funding round from Sutter Hill Ventures.

The initial product is a server fabric interconnect ASIC, a massively distributed IO chip. It will be part of an integrated hardware and software stack to interconnect compute, storage and networking to get applications executing in servers faster. This sounds like a Data Processing Unit (DPU) or SmartNIC device.

The envisaged workload is to interconnect hyperscalers’ scaled-out and hyper-distributed servers running AI and machine learning applications, and the scale of this workload is increasing at a high rate. In a October 2022 blog by Sankar, he said: “AI/ML computational problem sizes will likely continue to grow 8 to 275-fold every 24 months.”

Another blog by Sankar and Alan Weckel, principal analyst at 650 Group, says: “An architectural shift is needed because the growth in large-scale AI and accelerated compute clusters – to serve evolving workloads as part of, for instance, AdSense, iCloud, YouTube, TikTok, Autopilot, Metaverse and OpenAI – creates scaling problems for multiple layers of interconnect in the datacenter.”

The need is to get data into the servers faster so that overall runtimes decrease. In Sankar’s view: “The choke point occurs at the movement of enormous amounts of data and metadata between distributed computing elements.This issue applies equally to compute nodes, memory capacity that must be accessed, movement between compute and storage and even movement between various forms of compute, whether it is CPUs, GPUs, purpose-built accelerators, field-programmable gate arrays, and the like.”

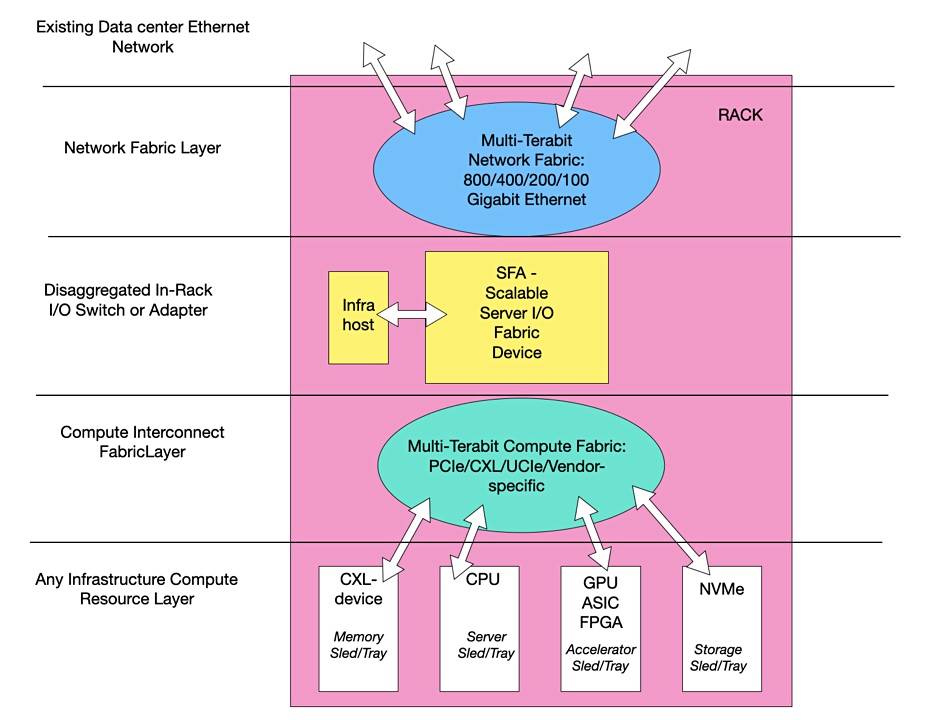

Enfabrica claims: “The key to scaling distributed compute performance and capacity rests in the ability to expand, optimize and scale the performance and capacity of the I/O that exists within servers, within every data center compute rack and across compute clusters.” It’s talking about developing a new distributed computing fabric across many, many nodes in a warehouse-scale computing system.

It says: “Innovations and technology transitions in interconnect silicon will drive substantially higher system I/O bandwidth per unit of power and per unit of cost to enable volume and generational scaling of AI and accelerated computing clusters.

“These technology transitions apply to multiple levels of data center interconnect: network, server I/O and chip-to-chip; multiple layers of the interconnect stack: physical, logical and protocol; and multiple resource types being interconnected: CPUs, GPUs, DRAM, ASIC and FPGA accelerators and flash storage.”

Its view is that “further disaggregation and refactoring of interconnect silicon will deliver greater performance and efficiencies at scale, in turn creating new system and product categories adopted by the market.”

This disaggregation refers to the “functions of network interface controllers (NICs), data processing units (DPUs), network switches, hostbus switches and I/O controllers and fabrics for memory, storage, GPUs and the like.”

Standardization with CXL, Ethernet and PCIe is needed if new technology is to be successfully adopted. Sankar and Weckel say: “Enfabrica is building interconnect silicon, software and systems designed to these same characteristics to provide best-in-class foundational fabrics – from chiplet, to server, to rack, to cluster scale computing.”

Enfabrica wants its SFA universal data moving ASIC and fabric to replace SmartNICs and DPUs by providing a much faster interconnect fabric than any SmartNIC/DPU combination.

Comment

This startup, somewhat like the Microsoft-acquired Fungible, wants to replace existing interconnect schemes in warehouse-scale, hyper-distributed datacenters. The number of potential datacenter customers will be relatively small and Enfabrica will have to develop its product in conjunction with potential customers to be certain its technology will match market needs and deliver the benefits it promises. We think it is some years away from general availability.