AI server developer Graphcore is setting up a reference architecture with four external storage suppliers to get data into its AI processors faster.

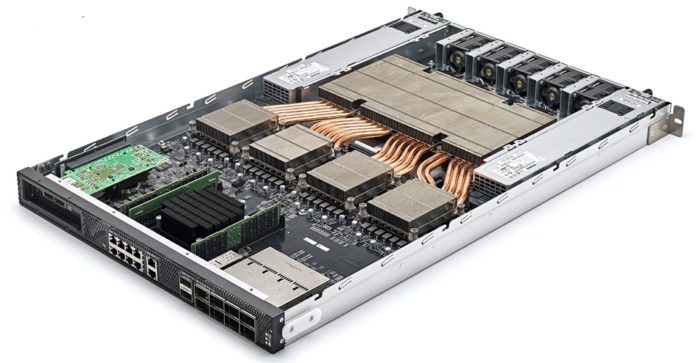

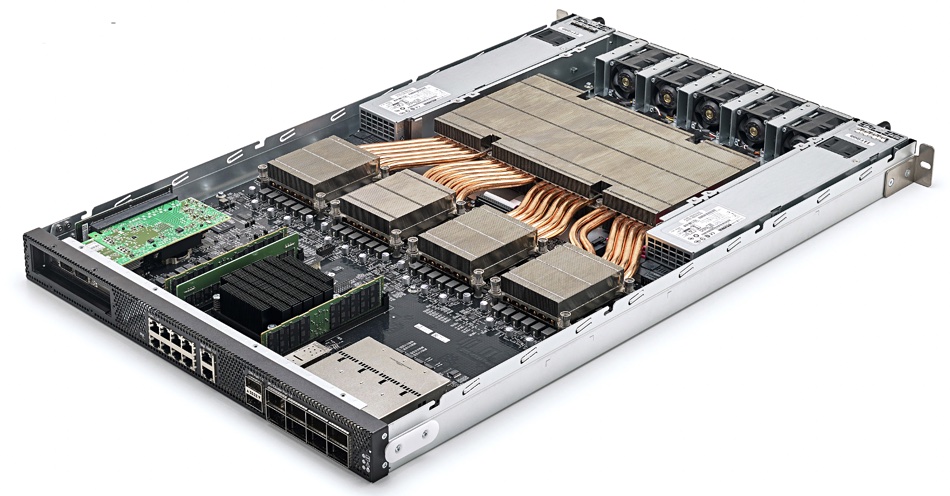

DDN, Pure Storage, Vast Data, and WekaIO will provide Graphcore-qualified datacenter reference architectures. Graphcore produces IPU (Intelligence Processing Unit) AI compute chips which power its IPU-Machine M2000 server and IPOD multiple server systems. These are claimed to be better in price/performance terms than Nvidia GPUs at running AI applications such as model training.

The M2000 is basically a compute and DRAM box and needs fuelling with data to process. It has four IPUs and a PCIe Gen-4 RoCEv2 NIC/SmartNIC Interface for host server communication. A dual 100Gbit RNIC (RDMA-aware Network Interface Card) is fitted. Stored data will be shipped to the IPU-M2000 from the host server across the Ethernet link.

The system is controlled by Poplar software, which has a PCIe driver. As the system supports RoCE (RDMA over Converged Ethernet), NVMe-over-Fabrics interconnection to external storage arrays is possible.

Vanilla quotes

The four announcement quotes from DDN, Pure, VAST and Weka are pretty vanilla-like in flavour — none revealing much about how they will send data to the M2000s.

“DDN and Graphcore share a common goal of driving the highest levels of innovation in artificial intelligence. DDN’s industry-leading AI storage, combined with the strength of the Graphcore IPU, brings a powerful new solution to organisations looking for a whole AI infrastructure and data management solution with outstanding performance and unlimited scalability,” said James Coomer, SVP for Products at DDN.

“Turning unstructured data into insight lives at the core of an organisation’s effort to accelerate every aspect of AI workflows and data analytic pipelines. Pure FlashBlade, the leading unified fast file and object (UFFO) storage platform was built to meet the demands of AI. It is purpose-built to blend bigger, faster analytics capabilities,” commented Michael Sotnick, VP, Global Alliances, Pure Storage. “Customers want efficient, reliable infrastructure solutions which enable data analytics for AI to deliver faster innovation and extend competitive advantage.”

“The Graphcore IPU, coupled with VAST’s Universal Storage, will help customers achieve unprecedented accelerations for large and complex machine learning models, furthering adoption of AI across the enterprise data centre,” said Jeff Denworth, Co-Founder and CMO at VAST Data. “VAST Data is breaking the tradeoff between performance and capacity by rethinking flash economics and scale, making it possible to afford flash for the entirety of a customer’s dataset. This is especially important in the new era of machine intelligence where fast and easy access to all data delivers the greatest pipeline efficiency and investment return.”

“WekaIO’s approach to modern data architecture helps equip AI users to build and scale highly performant, flexible, secure and cost-effective datacentre systems. Working with Graphcore allows us to extend next-generation technologies to our customers, keeping them at the forefront of innovation,” said Shailesh Manjrekar, Head of AI and Strategic Alliances at WekaIO.

Comment

Nvidia has its GPUDirect program to encourage storage suppliers to ship data as quickly as possible to its GPU servers. That’s accomplished through bypassing the host server CPU, its DRAM and storage IO software stack, and setting up a direct connection between the external storage system and the GPU server.

The four storage suppliers partnering with Graphcore here are all supporting GPUDirect. We would hope, and expect, a similar program for the Graphcore system — IPUDirect if you like — to appear. Asked about this, a Graphcore spokesperson said: “We probably need to wait for the first reference architectures to be released to answer that. We’ve said that this will be happening throughout the remainder of 2021.”

That was unexpected. We thought Graphcore would be driving this.