Optane DIMM performance looks good for large databases and virtual machines (VMs) in memory mode and could be useful for metadata storage and write-back caching in app-direct mode. But better hypervisor support is needed and Intel’s pricing means 128GB version will be the most-used, with larger variants just for niche apps.

So says Storpool, the Bulgarian storage startup, which also thinks the Optane DIMM business case for large VMs is unclear.

Light the DIMMs

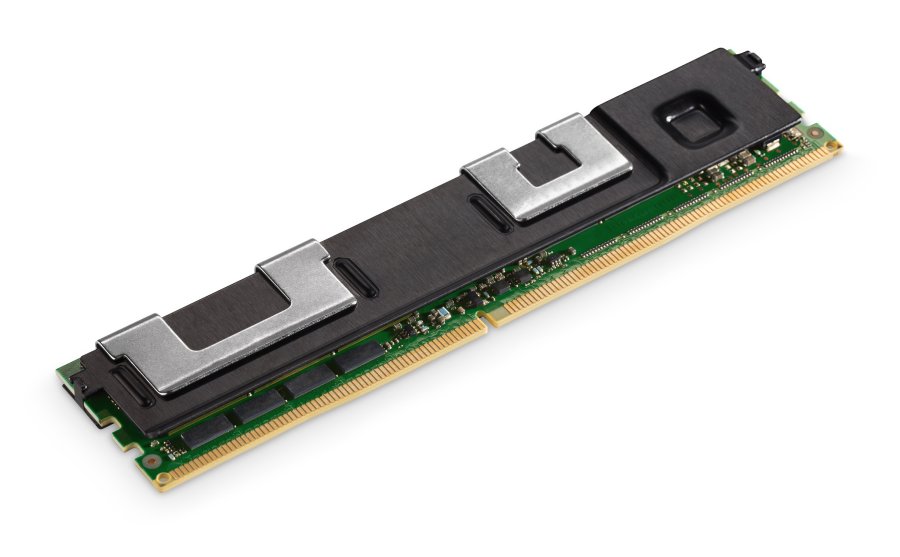

Intel this month launched Optane DIMM (DC Persistent Memory) with two main access modes:

- Memory mode in which the Optane is twinned with DRAM and data in the two is not treated as persistent but volatile.

- App-direct mode is the fastest mode and has the DIMM’s byte-accessible data contents persist and the DIMM is written to with load:store semantics.

Boyan Krosnov, StorPool Chief of Product, has analysed the Optane DC Persistent Memory and gen 2 Xeon SP announcement. He has sent Blocks & Files his thoughts about the Optane DIMM’s throughput, latency, CPU support and pricing, which we publish below.

Optane DIMM in Memory mode

The throughput and latency numbers actually make sense for large databases and VMs in Memory mode. Performance expectation is that active set will be smaller than DRAM (typical 384GB per hypervisor, 12x 32GB DRAM DIMMs) which would allow 4x memory size for the VM (typical 1.5 TB per server, 12x 128GB Optane DIMMs).

Most VMs have a fairly small active set so a 1:4 or 1:8 ratio between DRAM and Optane PM make sense in practice.

Performance of Optane PM is high enough that we are pretty confident it will work well in this mode.

App Direct mode

We are considering it for storing metadata and write-back in front of otherwise “slower” devices (SATA SSDs, QLC (4bits/cell) NVMe SSDs). I.e. write operations will go to Optane PM first and to the “slower” device later.

For us in this space it competes with

- NVDIMM-N devices (DRAM+Flash), which have the disadvantage of requiring a supercapacitor and being higher cost than DRAM

- Optane NVMe SSD devices like the P4801X, which have higher latency and lower throughput than Optane PM DIMMs.

Over time, as applications gain support for it, exposing Optane PM directly to applications in VMs might become popular. The KVM software stack is almost ready to do this in production. We don’t know what the situation is in VMWare and Hyper-V.

Intel has done a fairly good job of preparing the Linux kernel for persistent memory over the last couple of years.

Compatibility

It is a pity that Intel decided that Xeon Bronze and Silver CPUs would not support Optane PM (except for Xeon Silver 4215). We would have though that Intel wanted wider adoption of the product and would make it widely compatible.

Pricing

By using this source and reverse-engineering Intel’s public proof point we see that an Optane 128GB DIMM costs $577 (around $4.5/GB.)

A cost of $4.5-$5.5 per GB (for the 128GB DIMM) is higher than what we expected, so the business case for larger VMs is on balance.

At this time it is not clear if the business case will work, which means it is not clear if we’ll see wide adoption of Optane PM for memory with hypervisors.

Larger DIMMs come at a higher cost per GB. 256GB is on par with DRAM and 512 GB is 2x more expensive than DRAM. This means that Intel has decided that these two SKUs – 256GB and 512GB – are meant only for niche use-cases that really require 3TB+ RAM per node at any cost, and not for the wider public and private cloud market.

Larger DIMMs also require more expensive -M and -L variants of the CPUs.

The end result of this price tiering is that 99 per cent of Optane PM deployed will be using the 128GB SKU (because it makes sense compared to DRAM).