AWS and Google Cloud virtual machine instances – and as of this month, Azure’s – have NVMe flash drive performance, but user be warned: drive contents are wiped when the VMs are killed.

NVMe-connected flash drives can be accessed substantially faster than SSDs with SAS or SATA interfaces.

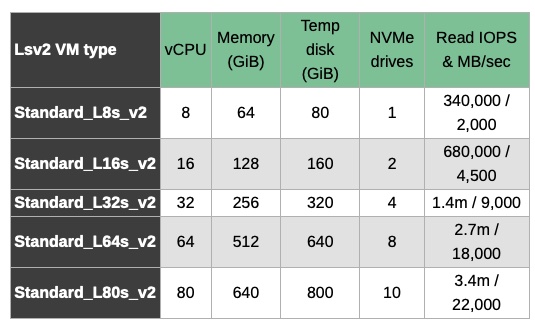

The Azure drives – which have been generally available since the beginning of February – are 1.92TB M.2 SSDs, locally attached to virtual machines, like direct-attached storage (DAS). There are five varieties of these Lsv2 VMs with 8 to 80 virtual CPUs:

The temp disk is an SCSI drive for OS paging/swap file use.

The stored data on the NVMe flash drives, laughably called disks in the Azure documentation, is wiped if you power down or de-allocate the VM, quite unlike what happens in an on-premises data centre. In other words Azure turns non-volatile storage into volatile storage – quite a trick.

That suggests you’ll need a high-availability/failover setup to prevent data loss and a strategy for dealing with your data when you turn these VMs off.

AWS and GCP

Six AWS EC2 instances support NVMe SSDs – C5d, I3, F1, M5d, p3dn.24xlarge, R5d, and z1d.

They are ephemeral like Azure VMs. AWS said: “The data on an SSD instance volume persists only for the life of its associated instance.”

Google Cloud Platform also offers NVMe-accelerated SSD storage, with 375GB capacity and a maximum of eight per instance. The GCP documentation warns intrepid users: “The performance gains from local SSDs require certain trade-offs in availability, durability, and flexibility. Because of these trade-offs, local SSD storage is not automatically replicated and all data on the local SSD may be lost if the instance terminates for any reason.”

All three cloud providers treat the SSDs as scratch volumes so you have to preserve the data on them once it has been loaded and processed.

This article was first published on The Register.