StorPool, a Bulgarian storage startup, has matched an Optane DIMM-accelerated Microsoft Storage Spaces Direct 12-node cluster and its 13.7 million IOPS record for a hyperconverged system, by just 1,326 IOPS.

StorPool technology

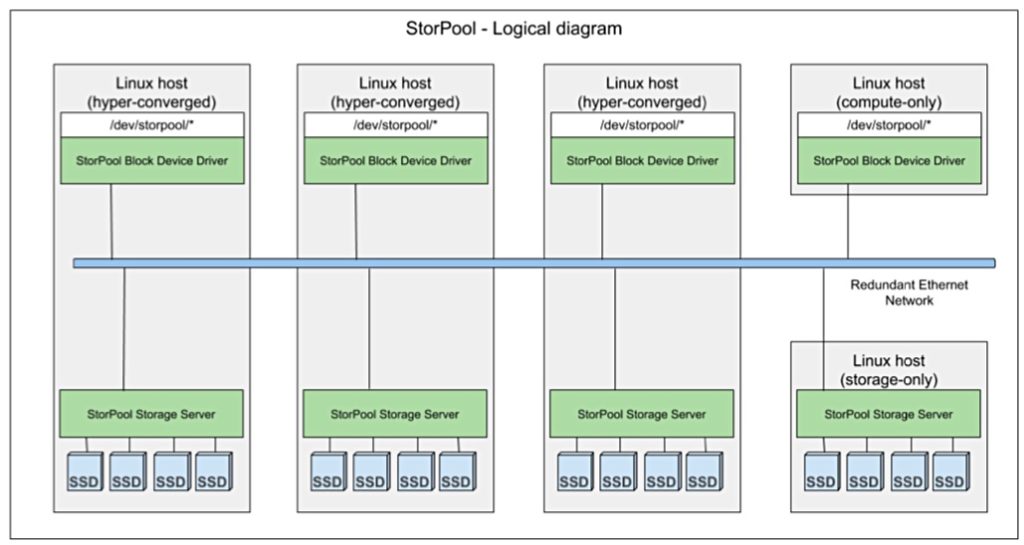

StorPool produces scale-out block storage software with a distributed shared-nothing architecture. It claims its shared external storage system is faster than using local, direct-attached SSDs. It has its own on-drive format, protocol, quorum and client software features – about which we know nothing.

StorPool is distributed storage software, installed on each X86 server in a cluster, and pooling their attached storage (hard disks or SSDs) to create a single pool of shared block storage.

The software consists of two parts – a storage server and a storage client that are installed on each physical server (host, node). Each host can be a storage server, a storage client, or both. To storage clients, StorPool volumes appear as block devices under /dev/storpool/* and behave identically to the dedicated physical alternatives.

Data on volumes can be read and written by all clients simultaneously and consistency is guaranteed through a synchronous replication protocol. Volumes can be used by clients as they would use a local hard drive or disk array.

StorPool says its software combines the space, bandwidth and IOPS of all the cluster’s drives and leaves plenty of CPU and RAM for compute loads. This means virtual machines, applications, databases or any other compute load can run on the same server as StorPool in a hyper-converged system.

The StorPool software combines the performance and capacity of all drives attached to the servers into a single global namespace, with a claimed access latency of less than 100 µs.

The software is said to feature API access, end-to-end data integrity, self-healing capability, thin-provisioning, copy-on-write snapshots and clones, backup and disaster recovery.

Redundancy is provided by multiple copies (replicas) of the data written synchronously across the cluster. Users set the number of replication copies, with three copies recommended as standard and two copies for less critical data.

Microsoft’s super-speedy Storage Spaces Direct

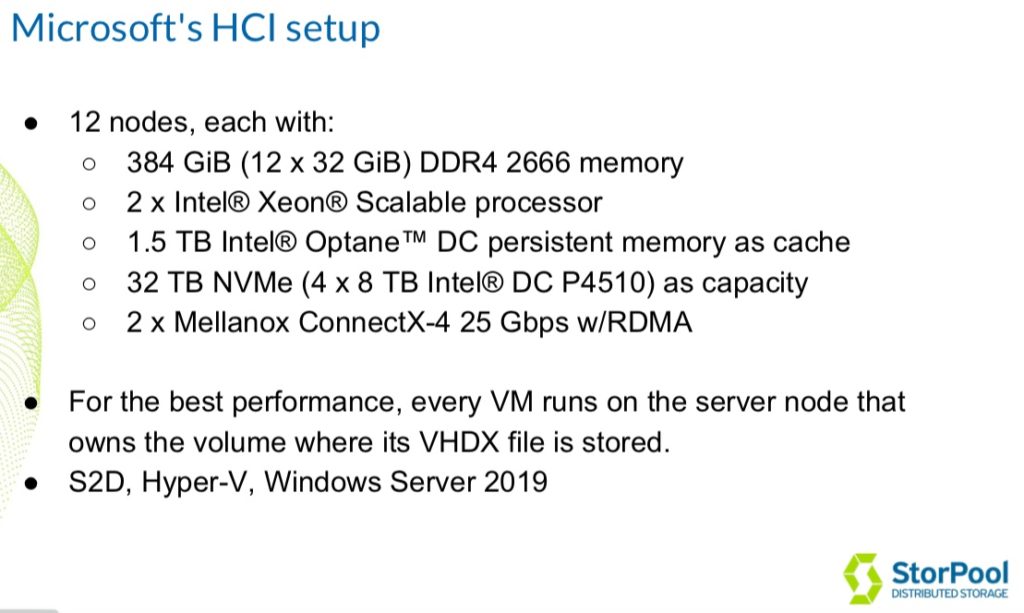

Microsoft set the HCI record in October 2018 and used servers with Optane DC Persistent memory; Optane DIMMs with 3D XPoint media. Their host servers ran Hyper-V and Storage Spaces Direct (S2D) in Windows Server 2019 software.

A dozen clustered server nodes used 28-core Xeons with 1.5TB Optane DIMM acting as a cache and 4 x 8TB DC P4510 Optane SSDs as the capacity store. They were hooked up to each other over dual 25Gbit/s Ethernet. The benchmark test used random 4 KB block-aligned IO.

We understand the CPUs were 28-core Xeon 8200s.

With 100 per cent reads, the cluster delivered 13,798,674 IOPS with a latency consistently less than 40 µs. Microsoft said: “This is an order of magnitude faster than what typical all-flash vendors proudly advertise today…this is more than double our previous industry-leading benchmark of 6.7M IOPS. What’s more, this time we needed just 12 server nodes, 25 per cent fewer than two years ago.”

With 90 per cent reads and 10 per cent writes, the cluster delivered 9,459,587 IOPS. With larger 2 MB block size and sequential IO, the cluster can read 535.86 GB/sec.

Okay, StorPool. What can your storage software do?

StorPool’s Hyper-V and Optane DIMM beater

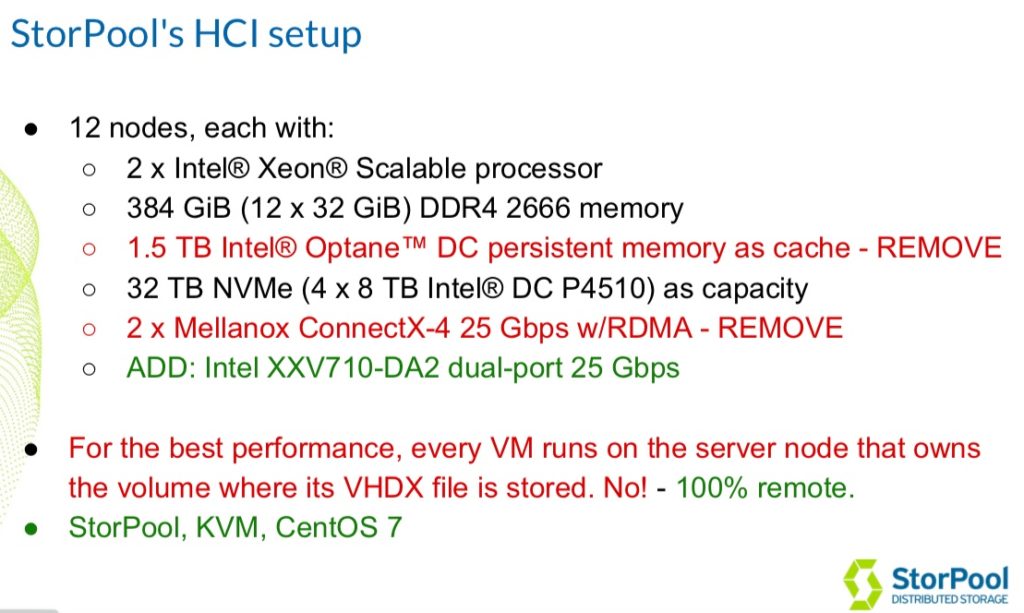

In essence StorPool took the Microsoft config and swapped out components such as the Optane DIMM cache, and the RDMA-capable Mellanox NICs:

The clustered servers accessed a virtual StorPool SAN running on CentOS 7 and the KVM hypervisor using Intel 25Gbit/s links.

In each of these 12 nodes, 4 cores were allocated to the StorPool storage server, 2 cores for the StorPool block service, and 16 cores for the load-generating virtual machines. That totals 24 cores, with actual usage at full load clocking in at about 14 cores.

This setup achieved:

- 13.8 million IOPS, 1.15 million per node, at 100 per cent random reads

- 5.5 million IOPS with a 70/30 random read/write workload and a 70 µs write latency

- 2.5 million IOPS with a 100 per cent random write workload

- 64.6 GB/sec sequential read bandwidth

- 20.8 GB/sec sequential write bandwidth

StorPool CEO BOyan Ivanov said: “Idle write latency is 70 µs. Idle read latency is 137 µs. Latency under the 13.8M IOPS random read was 404 µs, which is mostly queuing latency. … It was 404 µs under full load.”

Basically the StorPool design matched the Optane DIMM-accelerated S2D configuration on IOPS, producing 13.8 million vs Microsoft’s 13.798 million.

The results indicate S2D pumps out more GB/sec read bandwidth; 535.86 GB/sec vs StorPool’s 64.6 GB/sec. However S2D is using local cache and not getting data from the actual drives to get to this number.

Ivanov said the two numbers are “not comparable because one is from local cache (cheating) and the other one (StorPool) is from real “pool” drives over the network (not cheating). The StorPool number is 86 per cent of the theoretical maximum.” That maximum network bandwidth is75GB/sec.

We can safely assume a StorPool system will cost less than the equivalent IOPS-level Storage Spaces Direct system as no Optane DIMMs are required.

Find out more from StorPool and get a tech fact sheet here.