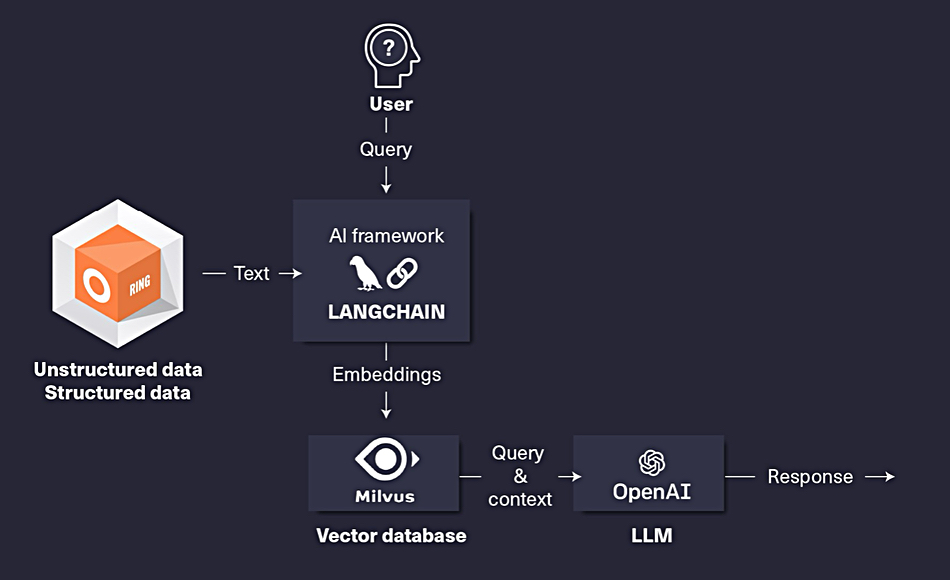

Analysis: Scality says its RING object storage can be combined with a vector database and LangChain framework to fuel RAG workflows for AI models like GPT.

LangChain provides tools to integrate external data, memory, and tools into AI workflows. The external data in this case is Scality’s RING object storage. A vector database stores the mathematically calculated vectors that represent the multiple aspects, aka dimensions, of tokenized chunks of unstructured data. A GenAI model such as GPT or Llama can only use a customer’s proprietary unstructured data when generating its responses if that data has been converted to vectors and made available to it.

Without such vectorization, RING-stored data is invisible to the model. The AI models are trained on static, generic data. RAG (retrieval-augmented generation) gives them access to a customer’s proprietary data that can be either static (old) or current (real time) or both. This means the model can respond to requests with a better contextual understanding of the request’s background and data environment.

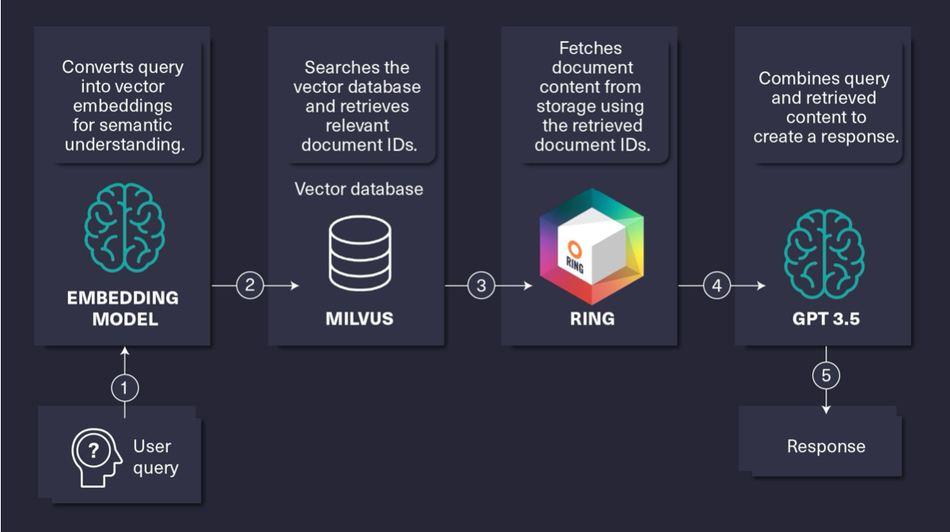

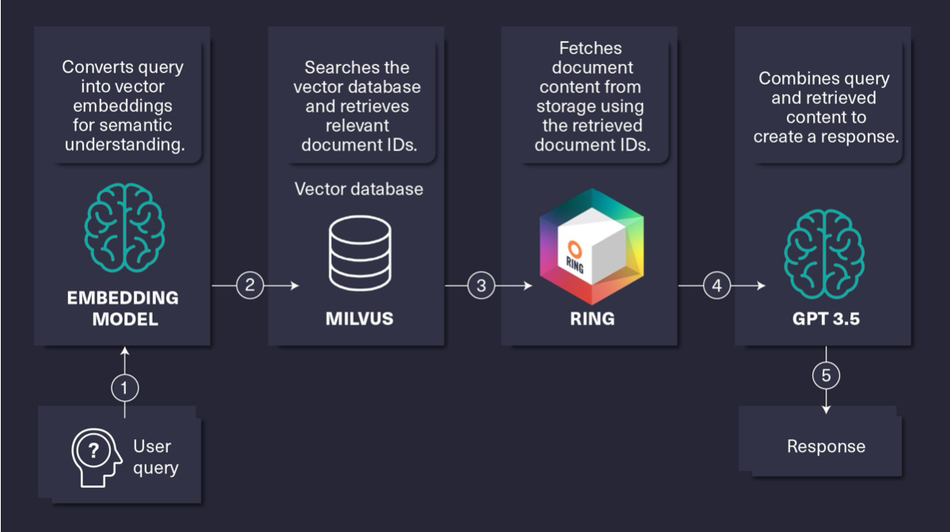

Scality can use LangChain tools to vectorize selected RING content, store it in a Milvus vector database, and make it available to AI models, such as GPT-3.5, GPT-4 models, Llama, and others.

A Scality article explains how the vector embeddings represent unstructured source data in a way that represents its meaning. An input request is itself vectorized and the input set of vectors provides an abstraction of the input request’s meaning. The large language model (LLM) or AI agent then searches for similar vectors in the Milvus database, which stores vectors generated from the Scality RING system.

Scality says: “This enables the system to retrieve content that matches the user query semantically.” An input query asking “How to manage type 2 diabetes?” could enable the model to detect and use documents on “insulin sensitivity” or “low-GI diets,” because, in semantic search terms, they are close to the set of vectors generated from the input request.

The vector database provides search functions such as an approximate nearest neighbor (ANN), which is based on indexing techniques. ANN looks for the approximate closest pattern to an input pattern in very large search spaces. So large that an exhaustive check of every data point to find the absolute closest match would be impractical, taking far too long. Find out more about ANN here.

Scality prefers Milvus over other vector databases because of its query speed, scalability, direct LangChain integration, and precision across different types of datasets.

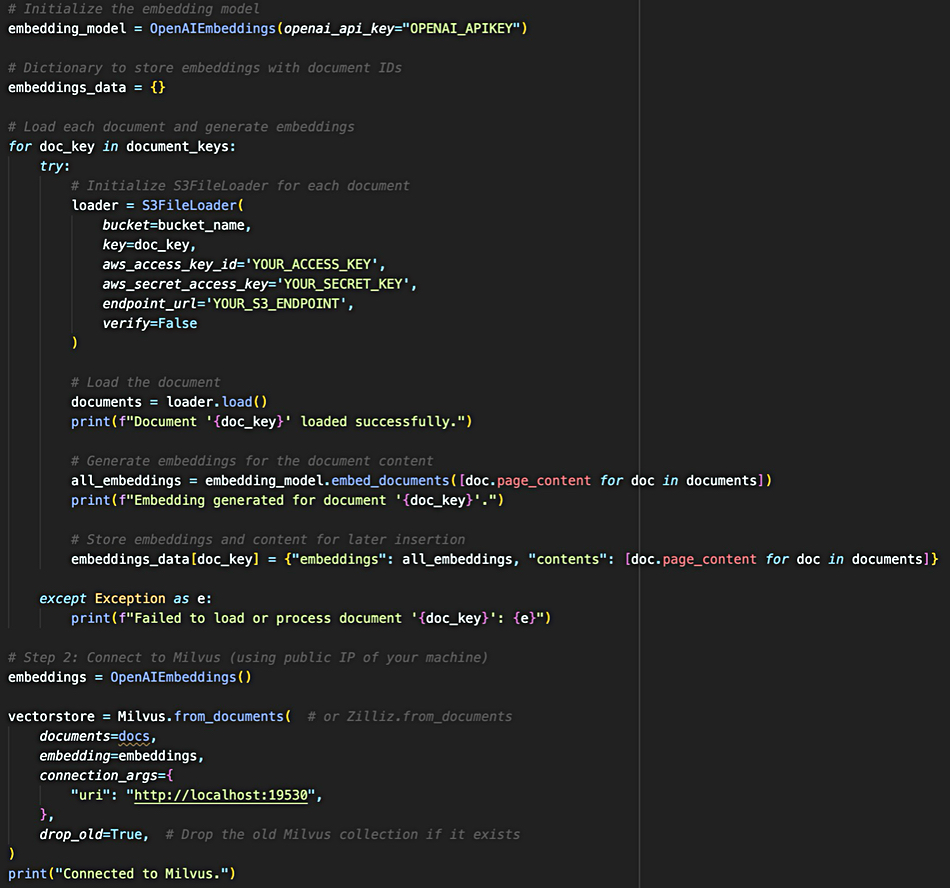

It has provided a code example of the RING-LangChain-Milvus-GPT 3.5 interaction:

AI inferencing and training focus increasingly on object storage in addition to, or instead of, file storage, and suppliers such as Cloudian, DDN, MinIO, and VAST Data are emphasizing their strengths as RAG source data stores and AI pipeline support functions. We might expect Scality to develop this further, with a deeper and extended integration of the functional components.