Contextual AI is using WEKA’s Data Platform parallel filesystem software to speed AI training runs as it develops counter-hallucinatory retrieval augmented generation (RAG) 2.0 software.

Contextual AI’s CEO and co-founder Douwe Kiela was part of the team that pioneered RAG at Facebook AI Research (FAIR) in 2020, by augmenting a language model with a retriever to access data from external sources (e.g. Wikipedia, Google, internal company documents).

A typical RAG system uses a frozen off-the-shelf model for embeddings, a vector database for retrieval, and a blackbox language model for generation, stitched together through prompting or an orchestration framework. And it can be unreliable, producing misleading, innacurate and false responses (hallucinations).

Kiela and his team are developing RAG 2.0 to address what Contextual says are the inherent challenges with the original RAG system. RAG 2.0 end-to-end optimizes the language model and retriever as a single system, we’re told. It pretrains, fine-tunes, and aligns with human feedback (RLHF – Reinforcement learning from human feedback) all components as a single integrated system, back-propagating through both the language model and the retriever to maximize performance.

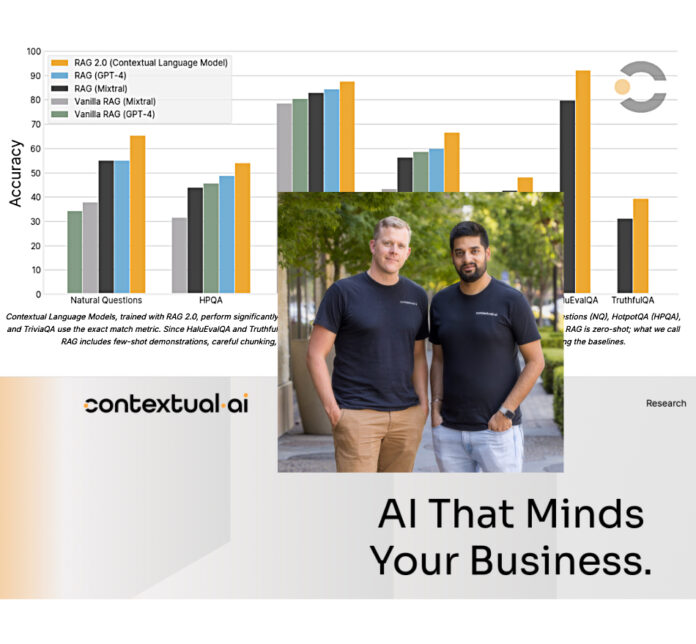

Contextual claims: “Using RAG 2.0, we’ve created our first set of Contextual Language Models (CLMs), which achieve state-of-the-art performance on a wide variety of industry benchmarks. CLMs outperform strong RAG baselines based on GPT-4 and the best open-source models by a large margin, according to our research and our customers.”

It produced test results by comparing its Contextual Language Models (CLMs) with frozen RAG systems across a variety of axes:

- Open domain question answering: Contextualizes the canonical Natural Questions (NQ) and TriviaQA datasets to test each model’s ability to correctly retrieve relevant knowledge and accurately generate an answer. It also evaluates models on the HotpotQA (HPQA) dataset in the single-step retrieval setting. All datasets use the exact match (EM) metric.

- Faithfulness: HaluEvalQA and TruthfulQA are used to measure each model’s ability to remain grounded in retrieved evidence and hallucinations.

- Freshness: It measures the ability of each RAG system to generalize to changing world knowledge using a web search index and showing accuracy on the recent FreshQA benchmark.

Each of these axes is important for building production-grade RAG systems, Contextual says. CLMs significantly improve performance over a variety of strong frozen RAG systems built using GPT-4 or state-of-the-art open source models like Mixtral.

Contextual builds its large language model (LLM) on the Google Cloud, with a training environment consisting of A3 VMs featuring NVIDIA H100 Tensor Core GPUs, and runs it there. It originally used Google’s Filestore but this wasn’t fast enough and nor did it scale to the extent required, it said.

Its AI training used Python which utilized a large amount of tiny files, causing it to be extremely slow to load files within Google FileStore. Also long model checkpointing times meant the training would stop for up to 5 minutes while the checkpoint was being written. Contextual needed a file store to move data from storage to GPU compute faster, with quicker metadata handling, training run checkpointing, and data preprocessing.

With its consultancy partner Accenture, Contextual looked at alternative filesystems from DDN (Lustre), IBM (Storage Scale) and WEKA (Matrix – now the Data Platform), also checking out local SSDs on the GPU servers, comparing them in a proof-of-concept environment in Google’s Cloud.

We’re told WEKA outperformed Google Filestore, with 212 percent higher aggregate read bandwidth, 497 percent higher aggregate write bandwidth, 212 percent higher aggregate read IOPs, 282 percent higher aggregate write IOPs, 70 percent lower average latency and reducing model checkpoint times by 4x times. None of the other contenders came close to this.

Contextual uses WEKA to manage its 100TB AI training data sets. The WEKA software runs on a 10-node cluster of GCE C2-std-16 VMs, providing a high-performance data layer built on NVMe devices attached to each VM, for a total of 50 TB of flash capacity. The single WEKA namespace extends to an additional 50 TB of Google Object Storage, providing a data lakelet to retain training data sets and the final production models.

Overall Contextual cloud storage costs have dropped by 38 percent per TB, it says, adding that its developers are more productive. Contextual, which was founded in 2023, raised $80 million earlier this month in a Series A round.

There’s more detail about RAG 2.0 and CLMs in a Contextual AI blog which we’ve referenced for this article.