An outfit called Astronomer has landed $213 million in funding to continue developing its data operations engineering software so users get cleaner data assembled and organised faster for analytic runs. It has also bought data lineage company Datakin.

The software is based on Apache Airflow – code originally developed by Airbnb to automate what it called its data engineering pipelines. These fed data gathered from the operation of its global host accommodation booking business to analytics routines, so Airbnb could track its business performance. The data came from various sources in various formats and needed collecting, filtering, assembling, cleaning, and organizing into sets that could be sent for analysis according to schedules. In effect, this is an Extract, Transform and Load (ETL) process with multiple data pipelines which needed creating, developing, maintaining and scheduling.

Astronomer CEO Joe Otto blogged: “Astronomer delivers a modern data orchestration platform, powered by Apache Airflow, that empowers data teams to build, run, and observe data pipelines. … Airflow’s comprehensive orchestration capabilities and flexible, Python-based pipelines-as-code model [have] rapidly made it the most popular open-source orchestrator available.”

Airbnb used Airflow for:

- Data warehousing – cleansing, organizing, quality checking, and publishing data into its data warehouse;

- Growth analytics – computing metrics around guest and host engagement and growth accounting;

- Email targeting – applying rules to target users via email campaigns;

- Sessionization – computing clickstream and time spent datasets;

- Search – computing search ranking-related metrics;

- Data infrastructure maintenance – folder cleanup, applying data retention policies, etc.

Analytics needs are evolving all the time, and many companies have multiple systems that need co-ordinating and integrating.

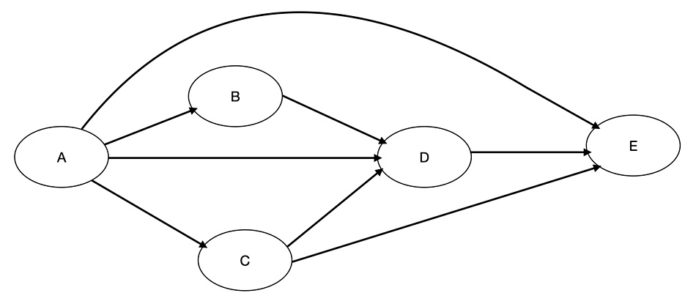

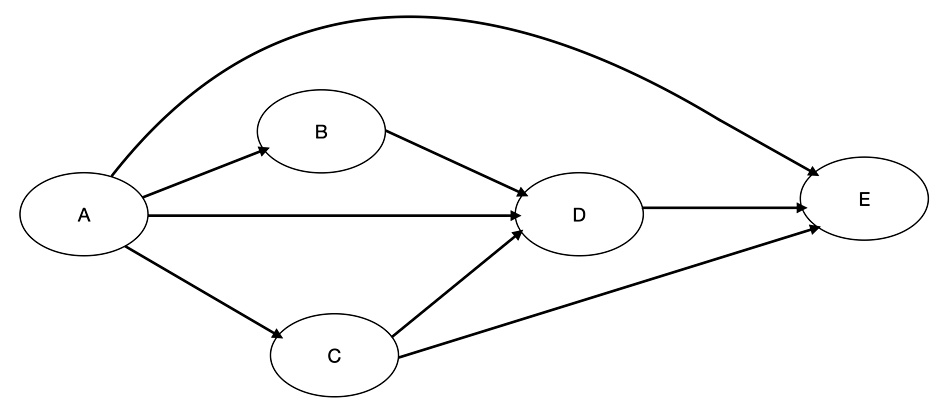

Getting raw data from its storage on disk or flash drives requires customer ETL procedures, aka data pipelines, which are crafted by data engineers using directed acyclic graphs or DAGs to visualize what’s going on. These link procedures or processing steps in a sequence, with interdependencies between the steps.

The diagram shows an example DAG, with B or C procedures dependent on the type of data in A and the desired end-point analytic run. If the D step is left out of the sequence than the overall procedure will fail. DAGs enable pipelines to be planned and instantiated. Airbnb created Airflow, using Python (procedure-as-code), to automate its own data pipeline engineering, and donated it to the Apache Foundation.

There are more than eight million Airflow downloads a month and hundreds of thousands of data engineering teams across a whole swaths of businesses use it – such as Credit Suisse, Condé Nast, Electronic Arts, and Rappi. It’s almost the industry-standard way of doing DataOps.

Astronomer effectively took over its development and maintains it as an open source product while also shipping its own Astro product – a data engineering orchestration platform that enables data engineers, data scientists, and data analysts to build, run, and observe pipelines-as-code.

There is a need to discover, observe and verify the lineage – the sequence – of the data involved and the Datakin acquisition enables Astronomer to have an end-to-end method to achieve it, using lineage metadata. Datakin’s website promises: “With lineage, organizations can observe and contextualize disparate pipelines, stitching everything together into a single navigable map.”

Astronomer has now gained $283 million in total funding. The latest round was led by Insight Ventures with Meritech Capital, Salesforce Ventures, J.P. Morgan, K5 Global, Sutter Hill Ventures, Venrock and Sierra Ventures.