Intel has announced two more IPUs and a SmartNIC at an Architecture Day event, as it disses the DPU idea and doubles down on the IPU term.

These Intel IPUs (Infrastructure Processing Units) process IT infrastructure-related events for cloud service providers, as opposed to their tenant CPUs which process client applications and directly generate revenue. Intel’s first publicly revealed IPU is code-named Big Springs Canyon. It is FPGA-based and features a Xeon-D CPU, and Ethernet support.

Intel’s Patricia Kummrow, VP Network and Edge Group inside the GM Ethernet Products Group, has blogged about these three new products, writing: “CPU cycles spent on … infrastructure overhead do not generate revenue for the Cloud Service Providers (CSP).” Offloading infrastructure overhead from the (predominantly X86) tenant CPUs to specialised Infrastructure Processing Units (IPUs) means the work can get done more efficiently, and revenue from extra client work done on the tenant CPUs exceeds the IPU cost.

IPU canyoneering

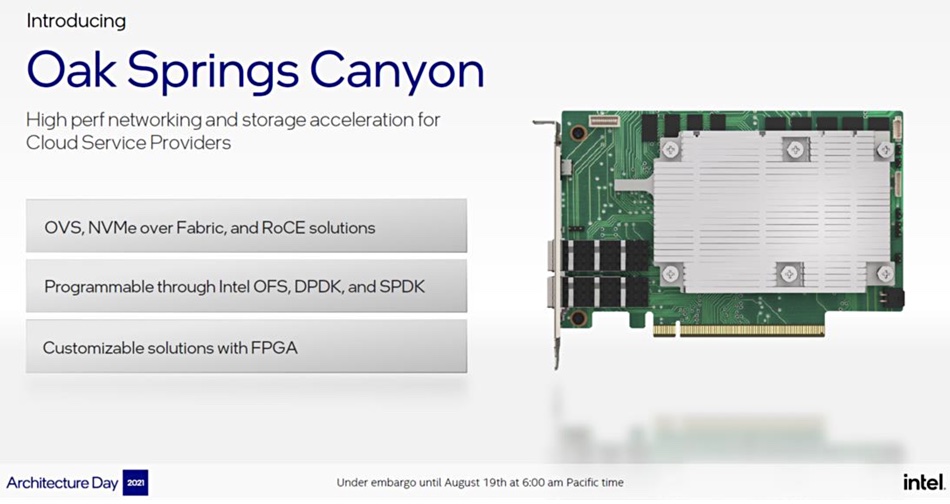

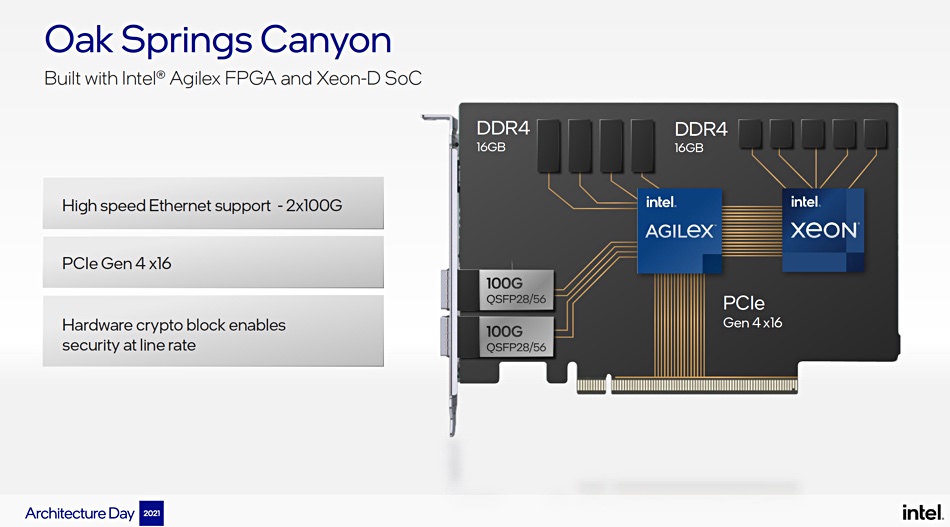

The Big Springs Canyon successor is called Oak Springs Canyon, and is based on Intel’s Agilex FPGA (download a Product Brief [PDF]) and a Xeon-D SoC. Intel claims this is the best FPGA in existence in terms of performance, power consumption, and workload efficiency. It supports PCIe Gen-4, 2 x 100Gbit Ethernet networking, and Intel’s Open FPGA Stack hardware and software can be used with it to develop applications.

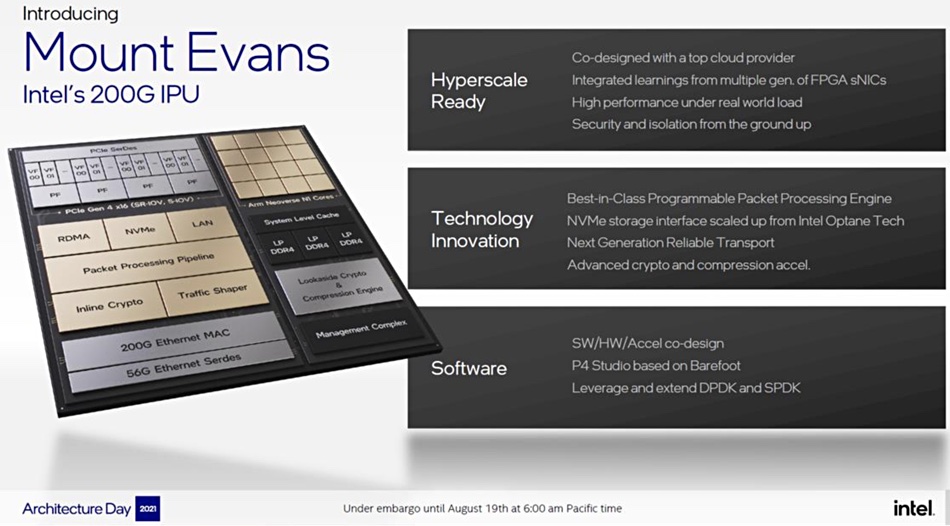

Intel’s second new IPU is called Mount Evans and employs an ASIC instead of an FPGA. In general, FPGAs are multi-purpose, programmable and flexible, whereas ASICs feature silicon-based hardware designed for a specific application. They should be faster than an FPGA used for the same application.

The Mount Evans ASIC is designed for packet processing, which is also an aspect of the Fungible and Pensando DPU chips. Intel claims Mount Evans, co-designed with a CSP, is “a best-in-class packet-processing engine” but doesn’t say what that class is. Mount Evans has up to 16 Arm Neoverse N1 cores. It can support up to four host Xeons with 200Gbit/sec full duplex. All this means it supports vSwitch offload, firewalls, and virtual routing and has headroom for future applications.

Intel says it “emulates NVMe devices at very high IOPS rates by leveraging and extending the Intel Optane NVMe controller”. That sounds, well, impressive, but it’s hard to know what it means in practice. A slide note says it is scaled up from the Optane implementation — which suggests it goes faster.

Both Oak Springs Canyon and Mount Evans run the same Intel IPU operating system.

SmartNIC

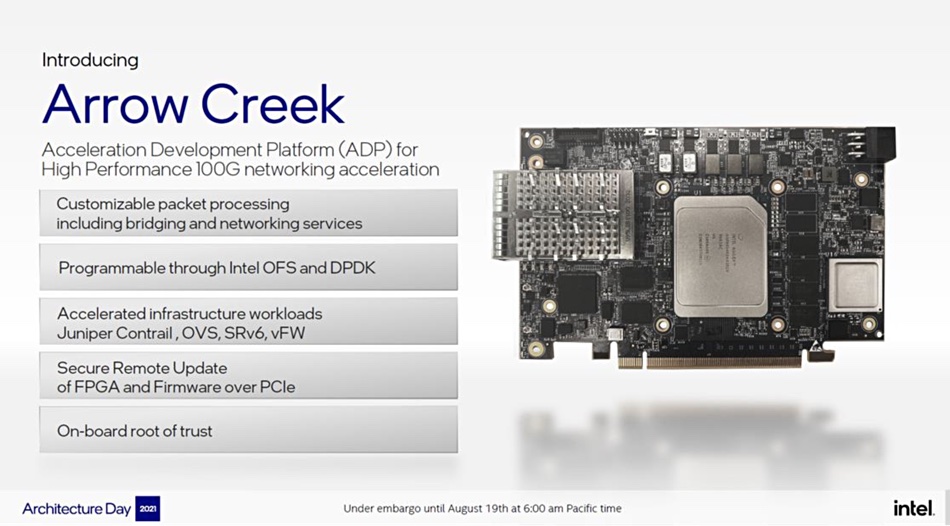

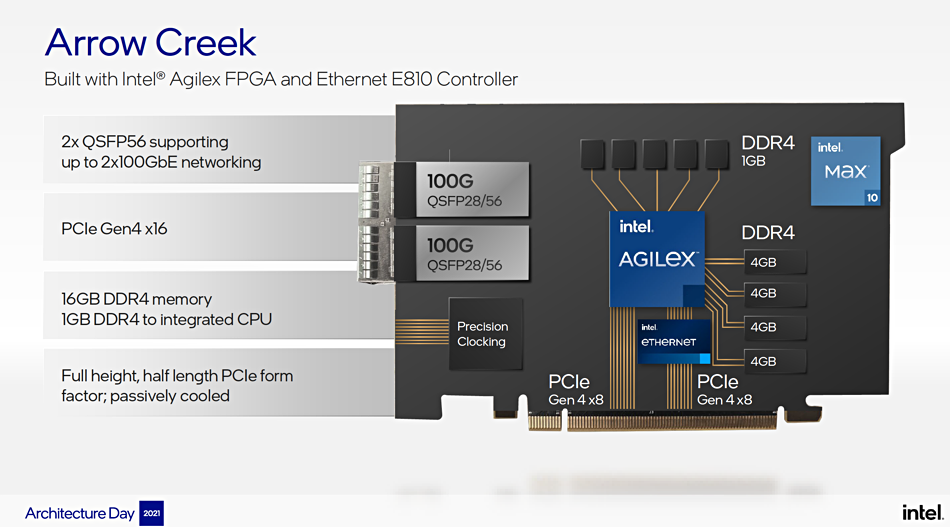

The N6000 is called an Acceleration Development Platform and is also based on the Agilex FPGA plus Intel’s E810 Ethernet controller. The N6000, code-named Arrow Creek, is based on Intel’s N3000 FPGA Programmable Acceleration Card (Intel FPGA PAC) N3000. This is deployed in Communications Service Providers’ (CoSPs) data centres.

The N6000, which supports PCIe Gen-4, is designed to help telco providers accelerate Juniper Contrail, OVS, SRv6 and similar workloads. It and the DPUs work with, naturally, Xeon CPUs.

Comment

Intel has not supplied any performance numbers enabling comparisons to be made between its three IPUs, nor between them and DPUs from Fungible and Pensando. N6000 performance numbers are not publicly visible either.

Intel says the DPU term is nonsensical, as a Xeon CPU processes data. In its view, it’s far better to call these accelerators IPUs because that’s what they accelerate: infrastructure-oriented processing.