Big beast Intel has come crashing out of the semiconductor jungle into the Data Processing Unit gang’s watering hole, saying it’s the biggest DPU shipper of all and it got there first anyway.

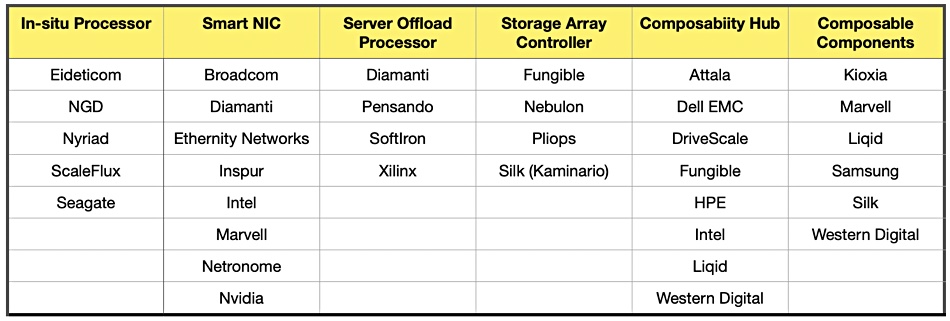

Data Processing Units (DPUs) are programmable processing units dedicated to running data centre infrastructure-specific operations such as security, storage and networking — offloading them from existing servers so they can run more applications. DPUs are built from specific CPU chips, FPGAs and/or ASICs by suppliers such as Fungible, Nvidia, Pensando, and Nebulon. It’s a fast-developing field and device types include in-situ processors, SmartNICs, server offload cards, storage processors, composability hubs and components.

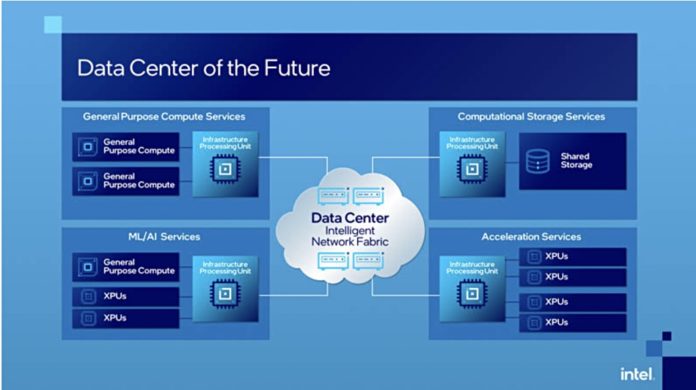

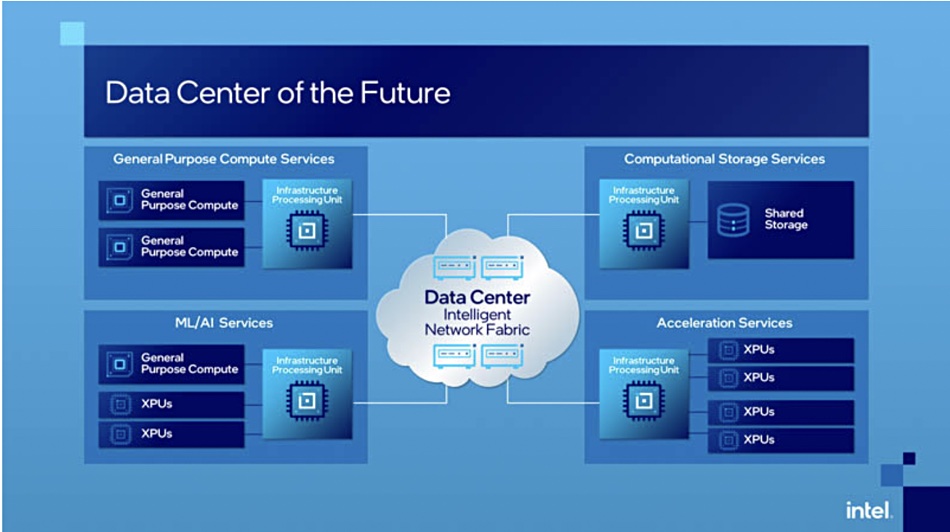

Navin Shenoy, Intel EVP and GM of its Data Platforms Group, said at the Six Five Summit that Intel has already developed and sold what it called Infrastructure Processing Units (IPUs) — what everyone else calls DPUs. Intel designed them to enable hyperscale customers to reduce server CPU overhead and free up cycles for applications to run faster.

Guido Appenzeller, Data Platforms Group CTO at Intel, said in a statement: “The IPU is a new category of technologies and is one of the strategic pillars of our cloud strategy. It expands upon our SmartNIC capabilities and is designed to address the complexity and inefficiencies in the modern data centre.”

An IPU, he said, enables customers to balance processing and storage, and Intel’s system has dedicated functionality to accelerate applications built using a microservice-based architecture. That’s because Intel says inter-microservice communications can take up from 22 to 80 per cent of a host server CPU’s cycles.

Intel’s IPU can:

- Accelerate infrastructure functions, including storage virtualisation, network virtualisation and security with dedicated protocol accelerators;

- Free up CPU cores by shifting storage and network virtualisation functions that were previously done in software on the CPU to the IPU;

- Improve data centre utilisation by allowing for flexible workload placement (run code on the IPU);

- Enable cloud service providers to customise infrastructure function deployments at the speed of software (composability).

Patty Kummrow, Intel’s VP in the Data Platforms Group and GM of the Ethernet Products Group, offered this thought: “As a result of Intel’s collaboration with a majority of hyperscalers, Intel is already the volume leader in the IPU market with our Xeon-D, FPGA and Ethernet components. The first of Intel’s FPGA-based IPU platforms are deployed at multiple cloud service providers and our first ASIC IPU is under test.”

The Xeon-D is a system-on-chip (SoC) microserver Xeon CPU, not a full-scale server Xeon CPU. Fungible and Pensando have developed specific DPU processor designs instead of relying on FPGAs or ASICs.

There are no DPU benchmarks, so comparing performance between different suppliers will be difficult.

Intel says it will produce additional FPGA-based IPU platforms and dedicated ASICs in the future. They will be accompanied by a software foundation to help customers develop cloud orchestration software.