Analysis: Dell has said it will add a parallel file system capability its scaleout clustered PowerScale storage arrays. How might this be done?

At its recent Dell Technologies World 2024 event in Las Vegas, Dell’s Varun Chhabra, SVP for ISG Marketing, told his audience: “We’re excited to announce Project Lightning which will deliver a parallel file system for unstructured data in PowerScale. Project Lightning will bring extreme performance and unparalleled efficiency with near line rate efficiency – 97 percent network utilisation and the ability to saturate 1,000s of data hungry GPUs.”

Update: VAST Data nconnect multi-path note added. 28 May 2024.

PowerScale hardware, the set of up to 256 clustered nodes, is operated by the OneFS sequential file system software. When files are read or written the activity is carried out on the nodes on which the files are stored. With a parallel file system, large files or sets of small files have to be striped across the nodes with each node answering a read or write request in parallel with the others to accelerate the IO.

Dell has several options for adding parallel file system functionality to PowerScale, none of which are easy.

- Retrofit a parallel filesystem capability to OneFS,

- Write a parallel filesystem from the beginning,

- Use an open-source parallel filesystem – Lustre or BeeGFS say, or DAOS,

- Buy a parallel filesystem product or supplier,

- Resell or OEM another supplier’s filesystem product.

We have talked confidentially to industry sources and experts about this to learn about Dell’s options. Its first decision will be whether to add parallel file system capability to OneFS, and retain compatibility with its installed base of thousands of OneFS PowerScale/Isilon systems, or to start from scratch with a separate parallel file system-based OS. The latter would be a cleaner way to do it but has significant problems associated with it.

If it resells an existing supplier’s parallel filesystem, striking a deal say with VDURA (Panasas as was) for PanFS or IBM for Storage Scale then this gives its installed base a migration issue and risks ceding account control to the parallel filesystem supplier. OneFS customers would have to obtain new PowerScale boxes and transfer files to them; giving themselves a new silo to manage and operate. This is perhaps a little messy.

If Dell buys a parallel file system or supplier then it would have the same problems, in addition to spending a lot of money.

Were Dell to use open-source parallel filesystem software then it wouldn’t have to spend that cash but it would have to set up an internal support and development shop. It would also cede account control to its customers who could walk away from Dell to another supplier of that software or do it themselves, and it would be adding another silo to their data centers and losing compatibility with OneFS.

Account control and a clean software environment would be advantages for Dell writing its own parallel filesystem software. But this would be an enormous and lengthy undertaking. One source suggested it would take up to 5 years to produce a solid, reliable and high-performing system.

Even with is own parallel file system software, Dell would still be adding another silo to its customer’s storage environment and would lose OneFS compatibility, giving its customer base migration and management problems plus adding the risk of them not wanting to adopt a brand new and unproven piece of software.

All these points bring us to option number one in our list, adding a parallel filesystem capability to OneFS.

Coders could set about gutting the heart of OneFS’ file organization and access software and replacing it or, somehow, layer a parallel access capability onto the existing code. The former choice would be like doing a brain transplant on a human; taking so much effort that writing a clean operating system from scratch would be easier.

Fortunately OneFS supports NFS 4.1 and its session trunking, with support added in October 2021. OneFS v9.3 and later also support NFS v4.2.

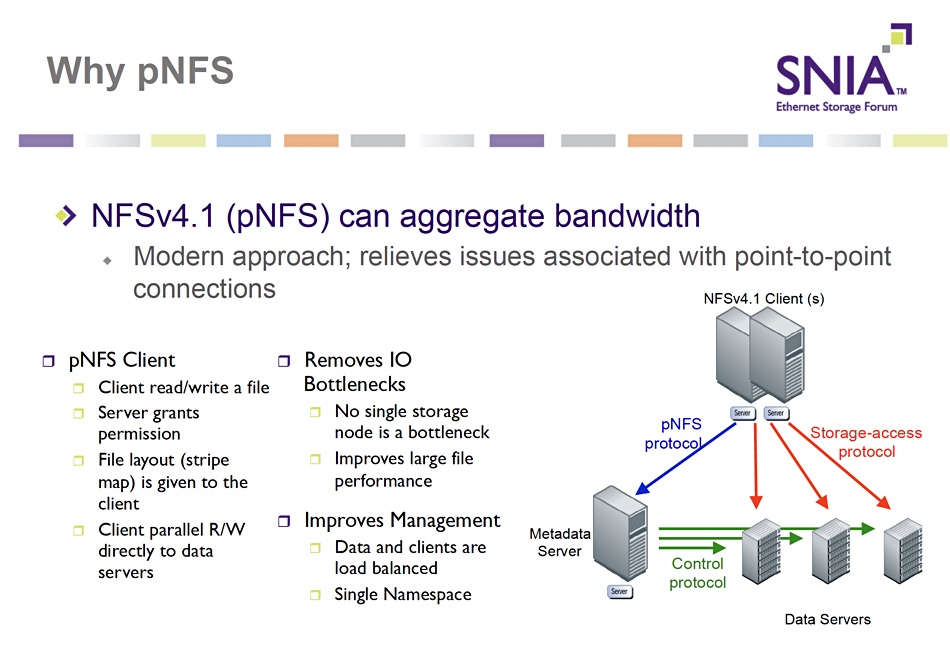

The NFS v4.2 client has support for parallel NFS (pNFS) using a Flex Files feature. The pNFS functionality is dependent on session support, which became available in NFS 4.1. According to an SNIA slide deck, the Flex Files pNFS layout provides “flexible, per-file striping patterns and simple device information suitable for aggregating standalone NFS servers into a centrally managed pNFS cluster.”

Effectively, existing OneFS cluster nodes read and write NFS files as standalone servers. In the pNFS world the cluster nodes have an added metadata server. Access clients talk to this server using a specific pNFS protocol. It talks to the cluster nodes, called data nodes, using a control protocol, and they and the clients send and receive data using a storage access protocol as before.

The metadata server provides a file layout or stripe map to the client and this enables it to have parallel read and write access to the data servers (cluster nodes). The next slide in the SNIA deck makes a key point about the pNFS metadata server:

It need not be a separate physical or virtual server and can in fact run as separate code in a data server.

This means that if OneFS was given a pNFS makeover then one of the existing cluster nodes could run the metadata server function and we have a road to an in-place upgrade of an existing OneFS cluster.

It is interesting that we can see a parallel [sorry] to what Hammerspace is doing with its data orchestration software. This is based in pNFS and uses Anvil metadata service nodes.

Also, as we wrote in November 2023: “The Hammerspace parallel file system client is an NFS4.2 client built into Linux, using Hammerspace’s FlexFiles (Flexible File Layout) software in the Linux distribution.”

This approach of activating the nascent pNFS capability already supported in principle by OneFS would enable Dell to carry its OneFS franchise forward into the high-performance parallel access world needed for AI training. It wouldn’t be a true parallel file system from the ground up but it would enable a profound increase in OneFS file transfer bandwidth. Existing installed PowerScale clusters would become parallel access clusters.

Such a performance increase would immediately differentiate PowerScale from competitors Pure Storage (FlashBlade) and Qumulo, match NetApp, give it anti-Hammerspace ammunition, provide a counter to VAST Data overtures to its customers and hold the fort against encroachment by HPE OEM’d VAST Data, and also IBM’s StorageScale. The advantages of this approach just keep mounting up.

Note that any NFS standards supporting supplier could do the same, meaning in particular NetApp, which already has, and Qumulo. The latter’s CTO, Kiran Bhageshpur, said: “Qumulo is focused exclusively on a standards based system, end-to-end. Commodity platforms; on-premises, at the edge and in public clouds; no special hardware, standards based interconnectivity (Ethernet and TCP/IP) and standards based access protocols (NFS, SMB, FTP, S3, etc.)”

“We know, we at Qumulo can outperform any real-work workload in a standards based environment without requiring specialized hardware, specialized interconnectivity (Infiniband) or specialized clients ( “parallel file systems”) and at a much better total cost of ownership for our customers.”

Qumulo’s Core software added NFS 4.1 support at the end of 2021 but has not yet added 4.2 support. NetApp’s ONTAP supports both NFS 4.1 and 4.2. Qumulo could add parallel access by adding NFS .2 support and using pNFS. NetApp has already done this.

NetApp principal engineer Srikanth Kaligotla blogged about pNFS and AI/ML workloads: “In NetApp ONTAP, technologies such as scale-out NAS, FlexGroup, NFS over RDMA, pNFS, and session trunking – among several others – can become the backbone in supporting AI/ML workloads.”

ONTAP users can enable pNFS operations now. We could say NetApp is showing a path into parallelism for OneFS.

All-in-all, our bet is that Dell is adding a pNFS capability to OneFS, so that it becomes as we might say, “pOneFS”.

Bootnote

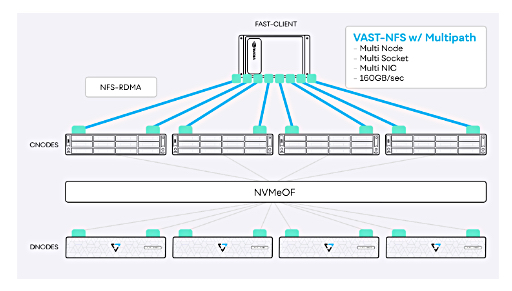

An October 2021 blog by VAST Data’s Subramanian Kartik about NFS enhancements from VAST Data mentions nconnect multi-path which enables the ability to have multiple connections between the NFS client and the storage. It says: “The performance we are able to achieve for a single mount far exceeds any other approach. We have seen up to 162 GiB/s (174 GB/s) on systems with 8×200 Gb NICs with GPU Direct Storage, with a single client DGX-A100 System. Additionally, as all the C-nodes participate to deliver IOPS, an entry level 4 C-node system has been shown to deliver 240K 4K IOPS to a single client/single mount point/single client 100 Gb NIC system. We are designed to scale this performance linearly as more C-nodes participate.”

It is a highly informative blog about adding parallelism to a VAST Data NFS system and well worth a read.