Enterprise data services supplier CTERA envisions a future where office workers create their own virtual employee assistants – domain-specific AI agents that work with curated private datasets using the Model Context Protocol (MCP).

CTERA emerged from the enterprise file sync and share era and has evolved into an intelligent data services platform. The company offers a software-defined, multi-protocol system with a globally addressable namespace spanning both file and object storage. Data remains accessible in real-time from any location, over any protocol, while being stored economically in object storage – whether Amazon S3, Azure Blob Storage, or on-premises S3-compatible targets. The platform extends access through intelligent caching, ensuring frequently accessed data stays local.

After introducing its data intelligence platform a year ago, CTERA is now revealing more details about its AI-focused vision.

Outlining the fundamental issue plaguing enterprise AI initiatives, CTO Aron Brand told an IT Press Tour audience: “We’ve seen that there’s a naive approach right now when companies or organizations try to train AI on their private data, and they’re saying … we’ll just point our AI tools at all our data. We’ll vectorize everything. We’ll add a RAG layer, and we’ll plug in GPT 5, the smartest model that we can find, and then we sit back and watch the magic happen.”

The reality is far less magical. “Gen AI is very good at producing very confident mistakes when you provide low quality data,” Brand warned. Poor data quality doesn’t improve through AI processing – it just produces more convincing errors.

VP for alliances Saimon Michelson set the scene by saying three steps are needed to deal with data growth, security and AI.

- Location intelligence – understand what data data exists across the enterprise through a globally-addressable namespace.

- Metadata intelligence – Index it and create and organize metadata to create a secure data lake

- Enterprise intelligence – analyze and process data using AI and other tools.

He likens this to three waves: “Wave number one is we’ve created a library. A library of all the content that we have everywhere. Wave number two is, we’ve sorted this library, based on the indexes, and we can find things. Wave number three is actually opening the books and seeing what is written in that book, what is reliable and how it can better help our business.”

“That’s really how we envision this kind of road that inevitably, every organization is going to take.”

CTERA recognizes data lives everywhere: distributed on-prem data centers, edge sites, and the public cloud regions, and with remote office workers. “The only model we can think of is hybrid,” Michelson said. “That’s why we’re seeing hybrid becoming kind of a bigger share in the in the market. We’re not here saying that cloud is decreasing or that on-premise is decreasing. I think the whole pie is becoming bigger and bigger.”

It is inevitable, according to CTERA, that enterprises and organizations will need a unified data fabric, with a natural language interface. Michelson said: “There’s all these large language models that can help me.

“It’s natural language. We can talk to it like we talked to another human. That’s the Holy Grail.”

Brand says the enterprise intelligence area is a new focus for CTERA: “something that we believe will become an essential part of any storage solution in the next few years. This is what we call enterprise intelligence, or turning your data into an asset by actually peering into the content.”

He identified the root causes of AI project failures: bad data trapped in separated silos with poor access controls and inadequate security. The solution requires two elements. First, AI-assisted tools for data classification and metadata enrichment to impose order on messy data. Second, an unstructured data lake that consolidates information from disparate locations into a unified format, converting PDFs, Word files, and other documents into something Gen AI can understand and store in an indexed data lake.

“[We’re] providing new tools that allow you to classify your data, to enrich the metadata, and to split your data into meaningful data sets that are high quality in order to feed your AI Agent,” Branded revealed. “You need the tools to help you to curate [your data].”

Traditional approaches copy enterprise data into Gen AI tools – a security nightmare. CTERA takes a different approach.

“The key element here, I believe, is not avoiding the copying of the data from enterprise sensitive systems into the Gen AI tools, but having the Gen AI tools have the ability to connect to the source data, without a copy, while at the same time enforcing the permissions on the source file system. So if you have files and documents that have permissions or ACLs, as long as the users that are querying this AI system, the system is fully aware of their credentials and their group membership and it’s enforcing the same permissions, then we’re relatively safe.”

“I think this is currently the holy grail of the industry; having an intelligent data fabric that consolidates all the data from different parts of the organization, from different data repositories of different data formats, bringing this all into a data fabric that allows Gen AI to have access to high quality, curated and safe.”

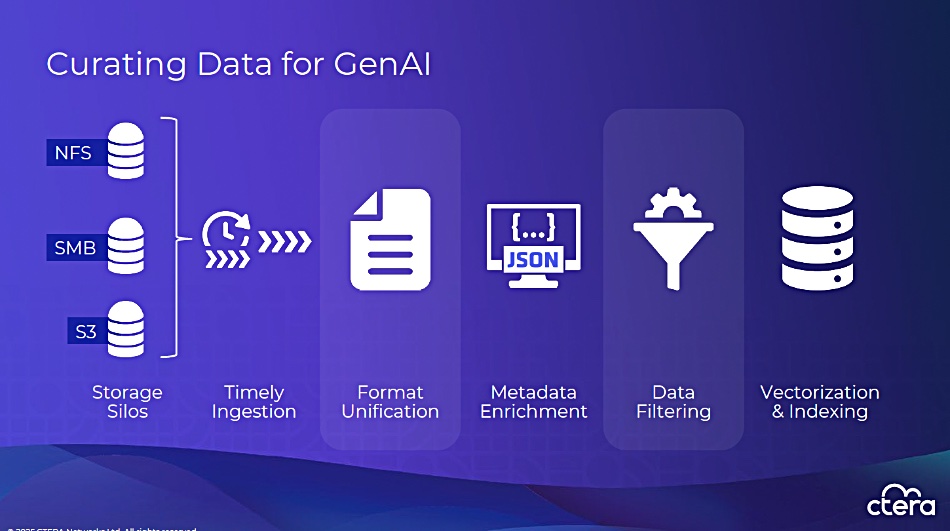

CTERA is providing a pipeline that transforms messy, uncurated information into something that is Gen AI-ready.

The system ingests documents from storage silos (NFS exports, SMB shares, S3 buckets, OneDrive, SharePoint) and extracts textual information into a unified markdown format. This includes transcribing videos and audio, converting images to text, and performing OCR.

AI analyzes content to create a semantic layer. For contracts, this might extract signatories, dates, amounts, and other conceptual information, enabling more accurate searches and analytics queries, such as how many contracts exceed $1 million? The system scans for sensitive data – personal information, health records, confidential material – and removes risky content. After curation, data is vectorized and inserted into searchable indexes (vector or full-text). Users can retrieve data relevant to their specific inquiries and insights.

The end game is what CTERA refers to as “virutal employees” – AI agents that serve as subject matter experts on specific business aspects.

“I don’t want this necessarily to work in a chat interface. Maybe if the users are in Microsoft Teams. I want this virtual employee to join our meetings. I want this virtual employee to be in my chapter,” Brand said. “I want them to be in my corporate data systems, everywhere that I am. I want these virtual employees to be to be there and help me do my work more efficiently, and allow me as an employee to focus on things that are more interesting and more creative.”

Some years ago CTERA added a notification service to its global file system: “which is really the foundation for this third wave. It’s a publish, subscribe system that allows various consumers to register to data that is in the global file system and to understand when a file is created, when a file is deleted, renamed, and to be able to respond to this.”

“And now as part of our CTERA data intelligence offering, which is an optional add on to our platform, we’re providing another client for the notification service, which is creating a permission-aware repository of curated enterprise knowledge,

“You can think of it as a system that includes all the steps that I mentioned before, the data curation, the consolidation of the data, to unify formatting of the data, the guardrails for security and the ability to deploy agents or virtual employees. All are provided within the system.”

The intent is to provide trustworthy answers to any question an employee might have on their area of the business, with the ability for it to be highly secure. Brand claims many systems provide RAG and provide search capabilities, but few do so in a way that is suitable for the most sensitive enterprise, or military or federal or government companies. Underpinning this system is MCP, or model context protocol.

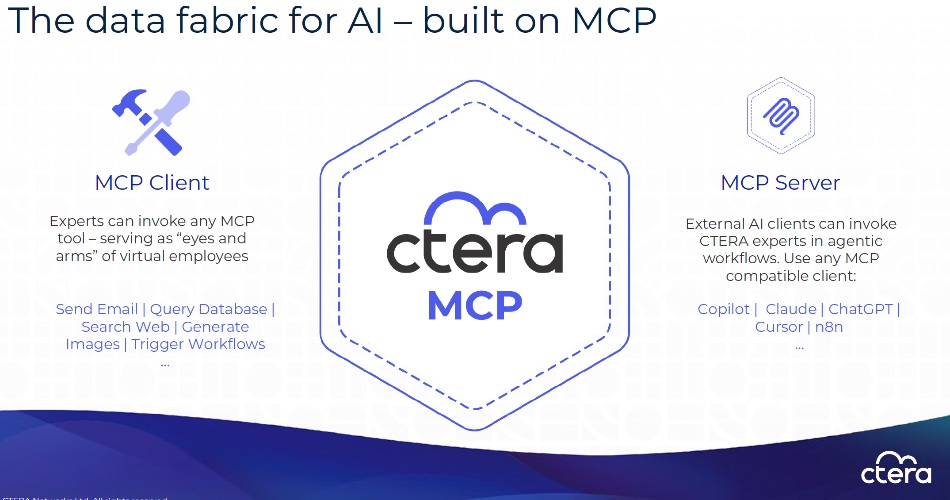

Brand said: “We have decided to go all in on MCP… We think that this is more than a trend – this is a fundamental shift. We believe that any enterprise storage solution that won’t have MCP in the next year will essentially make your data, your AI blind to your data.

CTERA uses both MCP client and server components. “The MCP client allows our system to invoke essentially any external tool. There are hundreds or 1,000s of MCP tools available today, starting for anything from sending emails, querying databases, searching the web, generating images, triggering workflows so that our system can connect to any enterprise data source or output to any enterprise output destination,” he told the IT Press Tour audience. “We have an MCP server in various levels in our product.

The MCP server is built into the global file system at the metadata layer, enabling AI tools (OpenAI, Anthropic, Copilot) to interact with the global file system. The data intelligence component works at the content level on curated datasets, allowing clients like Copilot, Claude, ChatGPT, and Cursor to create insights from the semantic layer.

The data intelligence component is separate from CTERA’s basic global file system and can be deployed independently. “We made efforts to make sure we’re not in the data path,” Brand said. “When data intelligence reads data, it can go directly to the object storage, and that’s why it doesn’t pose as much load on our system. We bypass it, basically CTERA Direct; you could think of it as RDMA for object storage.”

The system isn’t limited to CTERA data. It’s built as a multi-source platform capable of reading from Confluence, wikis, websites, SharePoint, and other NAS systems.

He provided a customer example: “One project we’re working on with a medical firm. Today, they use doctors in order to do medical analysis of insurance claims, and so essentially, they have hundreds of documents, and it could cost 1,000s of dollars per case to analyze all these documents, and to produce a report about the case.

“We’re working with them to dramatically cut the effort that they’re spending on that and streamline this preparation by using the metadata extraction … from each of these documents. They automatically get the relevant and important fields and things and a medical summary of what happened in each of these cases. They’re able to produce this report, and they could save perhaps 80 percent of the effort.”

Comment

CTERA is demonstrating domain-specific AI agents (virtual employees) interacting with cleaned data in an entirely private platform, and reckons it is delivering reliable outcomes faster than human employees. The company is implementing an AI data pipeline on top of its storage infrastructure while keeping it architecturally separate.

As basic hybrid data storage becomes commoditized – a reality VAST Data recognized previously – the value shifts to the data access and AI-aware pipeline stack built on top. Pure Storage is pursuing a similar strategy.

The question for enterprises is no longer whether to adopt AI, but how to do so securely with high-quality, curated data. CTERA’s answer: empower employees to build their own virtual assistants using trusted, permission-aware enterprise data.

Contact CTERA here to find out more about its data intelligence offering.