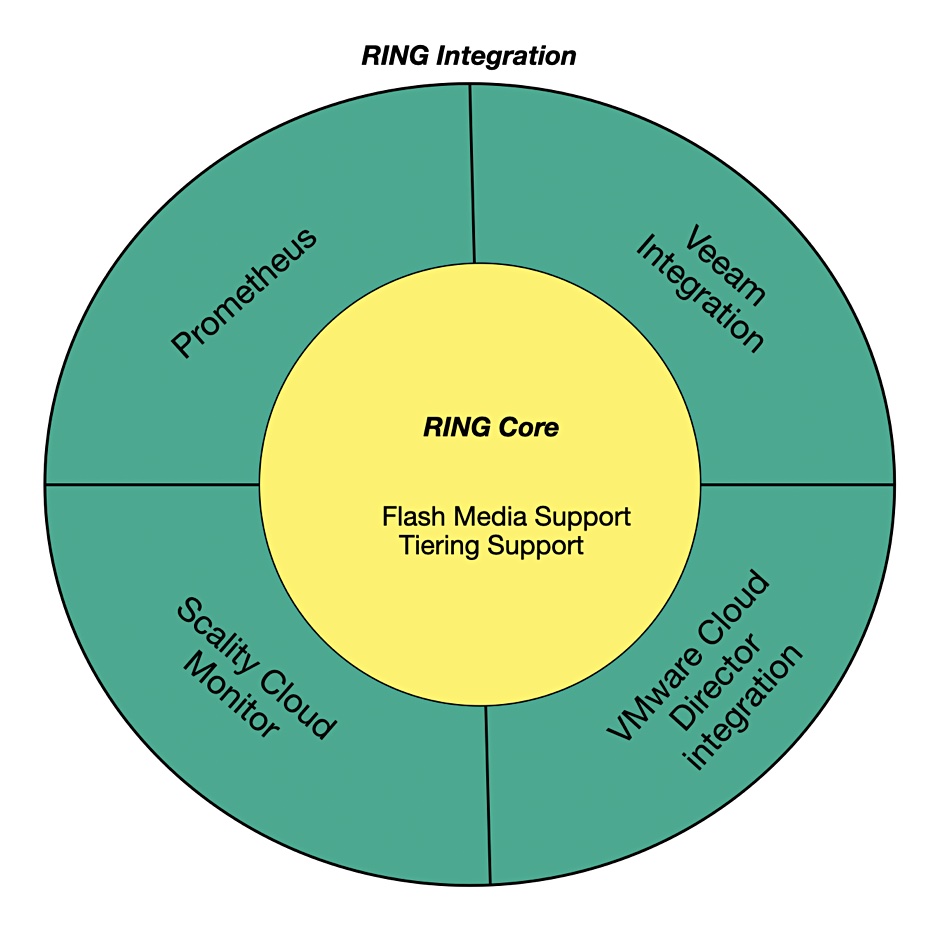

Scality has added NVMe flash and tiering support to its RING object storage product and extended its integrations at API level with Veeam backup, VMware Cloud Director, and Prometheus management systems.

RING object storage, with its added file support, gained SSD media support in 2020. Now it can support storage media tiers through a Storage Accelerator feature which dynamically moves data between NVMe flash, slower QLC (4 bits/cell) flash, and disk drive storage to optimize data access speed requirements and cost.

Randy Kerns, senior strategist and analyst at Evaluator Group, commented: “This new feature enables high performance with low-latency flash and increases storage efficiency with tiering as data ages. This will improve usages such as backups and medical imaging, as well as new latency-sensitive workloads in media by automatically optimizing data placement.”

Scality says this expands its addressable markets and use cases to media streaming and big data analytics as well as medical imaging. All three, it says, previously required a separate storage resource.

It has also added API-level integrations to enable RING to play nicer in enterprise environments. It has a new management and monitoring facility plus dashboard built on Prometheus and AlertManager. A Scality Cloud Monitor enhances remote monitoring and observability.

Prometheus is Apache-licensed software for event monitoring and recording events in real-time in a time-series database. It is a popular monitoring code stack with visualization features.

RING can now integrate with the Veeam backup product at an API level. The vCloud Director integration aids production use in service provider VMware deployments. The product also has more comprehensive AWS Identity and Access Management (IAM) bucket policies to tighten security and access control.

Scality CMO Paul Speciale said: “Users gain improvements in storage efficiency through internal flash-to-disk tiering and dynamic data protection policies. For modern cloud-based data centres, RING9 fits naturally into the monitoring and observability ecosystem with support for Prometheus and Elastic Cloud.” We asked him some questions abut the new release.

Scality Q&A

Blocks & Files: How many tiers can RING9 support? The release identifies three: fast NVMe flash, QLC flash, and disk.

Paul Speciale: Storage Accelerator (the new multi-tiering feature) can support any number of tiers. Since RING now supports many different storage media types, cloud storage, and even tape for end-to-end data lifecycle management, we see practical uses for two, three or more tiers such as:

- fast flash (NVMe)

- high-density flash (such as QLC)

- HDD

- cold storage (tape or cloud archives)

Users might consider other tiers (for example, one of our customers has two tape tiers: one single and one replicated tape).

Blocks & Files: What criteria are used to move objects between tiers? Are these criteria settable via policies?

Paul Speciale: Current policies are age (time) based, the value is configurable via policies. This will be broadened in the future to include policies based on data type, size, etc.

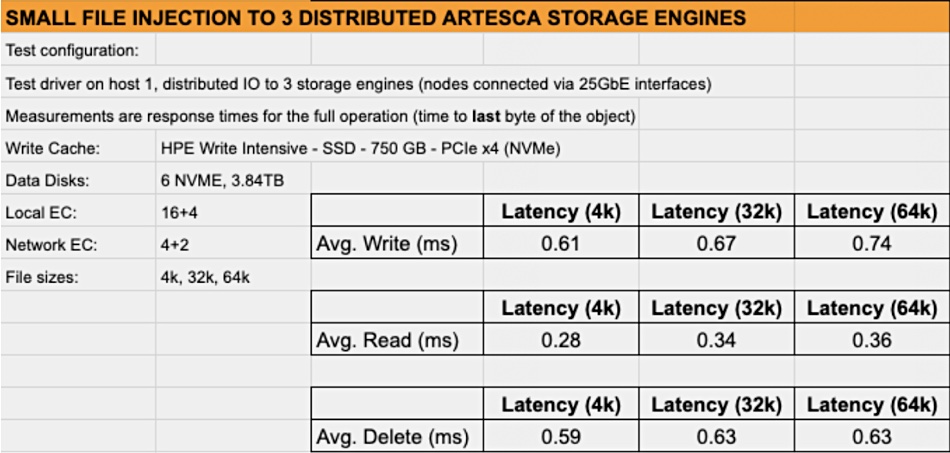

Blocks & Files: Can Scality provide performance numbers for accessing an object on NVMe flash, QLC flash and disk? How does Scality RING9 performance compare to that of MinIO?

Paul Speciale: In the next few months we will publish the latest RING9 performance for key customer applications in big data analytics and for backup/restore workloads. On small object workloads, note that our products have already demonstrated sub-millisecond latencies for 4Kb, 32Kb, 64Kb workloads as documented here:

Blocks & Files: The release says RING9’s Storage Accelerator (SA) enables dynamic tiering and data protection policies. What data protection policies are these?

Paul Speciale: Each tier in Storage Accelerator is defined as a combination of:

(a) A storage media type (as explained above, fast flash, high-density flash, HDD, etc)

(b) Any RING replication or erasure-coding policy (all tiers use the usual reliable RING protection methods)

Since replication is faster for writes than erasure-coding, it will be common to have a replication policy on the first tier, then to stage it off to more cost-effective tiers with more storage efficient EC policies. This alone can increase the storage efficiency from 200 percent overhead (three copies) to 33 percent overhead (using an erasure coding policy with 9 data and three parity stripes).

Blocks & Files: What partners does Scality have for the APIs for enhanced monitoring, reporting, and data placement apart from Veeam? What functionality do these API integrations enable?

Paul Speciale: Just to be clear, the statement “Streamline integration with API extensions to ecosystem partners such as Veeam and VMware Cloud Director (VCD)” refers to APIs published by Veeam and VMware. Scality has integrated with their APIs, not published new APIs of our own.

Blocks & Files: RING9 fits naturally into the monitoring and observability ecosystem with support for Prometheus and Elastic Cloud. What does support for Elastic Cloud enable?

Paul Speciale: Cloud Monitor on Elastic Cloud provides us with access to Elastic tools for ML-driven AIOps/observability features. Note that the integration of Prometheus for monitoring provides a popular and open API for admins to develop their own tools against.