Jupiter, Europe’s first exascale supercomputer, looks like it could be using NVMe SSDs, disk, IBM’s Spectrum Scale parallel file system, TSM backup, and LTO tape for its storage infrastructure.

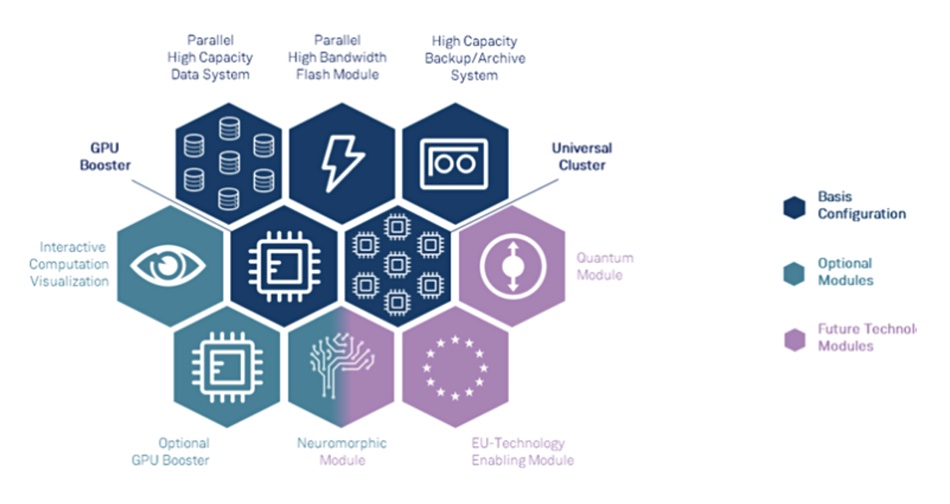

The Jupiter system will have a modular design with three storage components: a parallel file system, a parallel and high-bandwidh flash module, and a backup/archive system. The system will be housed in Germany’s Forschungszentrum Jülich (FZJ) Supercomputing Centre in North Rhine-Westphalia.

Jupiter stands for “Joint Undertaking Pioneer for Innovative and Transformative Exascale Research”.

Dr Thomas Eickermann, head of the communications system division at the Jülich Supercomputing Centre (JSC), told B&F: “The details of the storage configuration are not yet fixed and will be determined during the procurement of the exascale system.

“The parallel high bandwidth flash module will be optimized for performance and will therefore be tightly integrated with the compute modules via their high-speed interconnect. For the high capacity parallel file system, capacity and robustness will be further important selection criteria. This file system will most likely mainly be based on (spinning) hard disks. For the high capacity backup/archive system, we target a substantial extension of JSC’s existing backup/archive that currently offers 300PB of tape capacity.”

JSC already operates the existing JUWELS and JURECA supercomputers. JUWELS is a multi-petaflop modular supercomputer and currently consists of two modules. The first deployed in 2018 is a Cluster module; a BullSequana X1000 system with Intel Xeon Skylake-SP processors and Mellanox EDR InfiniBand. The second, deployed in 2020, is a Booster module; a BullSequana XH2000 system with second-generation AMD EPYC processors, Nvidia Ampere GPUs, and Mellanox HDR Infiniband.

JURECA (pronounced “Eureka” and short for Juelich Research on Exascale Cluster Architectures) started in 2015. It uses Intel’s 12-core Xeon E5-2680 Haswell CPUs, deployed in a total of 1,900 dual-CPU nodes. The processors are equipped with Nvidia K80 GPU accelerators and connected by Mellanox 100Gbit/s Infiniband interconnects across 34 water-cooled cabinets. The nodes run a CentOS Linux distribution.

The Next Platform thinks “Atos will almost certainly be the prime contractor on the exascale machine going into FZJ. And if that is the case, Jupiter will probably be a variant of the BullSequana XH3000 that was previewed back in February.” These will support HDR 200Gbit/s and NDR 400Gbit/s InfiniBand from Nvidia and also Atos’s BXI v2 interconnect. Jupiter’s flash modules will have to hook up to XH300’s compute nodes with InfiniBand or the BXI interconnects will be used.

According to a cached Google search result, JSC uses several GPFS (Spectrum Scale) scale-out, parallel file systems and disk drive media for some user data. The data is backed up to tape using TSM-HSM (Tivoli Storage Manager – Hierarchical Storage Manager).

In March 2018, JSC tweeted about its 17-frame IBM TS4500 tape library with 20 LTO-8 drives installed and more than 20,000 LTO-7 M8 tape cartridges inside. This extends its 2 x Oracle SL8500 tape libraries by 180PB capacity for backing up and archiving HPC user data.

It looks as if the easy button for JSC’s parallel file system is Spectrum Scale, and the one for the backup/archive is Spectrum Protect and an IBM tape library, using modern LTO-9 tapes and drives. There doesn’t appear to be a pre-existing flash module though. We suspect boxes of NVMe SSDs will be used.