Dell says it is working with industry standards groups and partners to evolve computational storage technologies and deliver “integrated solutions for customers.”

Gaurav Chawla of Dell’s Infrastructure Solutions Group (ISG) and has penned a blog in which he identifies computational storage as drives with a programmed CPU within the drive enclosure. He singles out Computational Storage Drives (CSD), Computational Storage Processors (CSP), and Data Processing Units (DPU) as the “underlying technology enablers.”

A Computational Storage Array (CSA) would include “one or more CSDs, CSPs and the software to discover, manage and utilize the underlying CSDs / CSPs.”

The standards groups include NVM Express and the SNIA, which are working on an architectural model and block storage command set. Chawla anticipates file and object storage command sets being developed as well.

He places computational storage as a development in data processing acceleration which started with hardware such as GPUs. These could process certain kinds of instructions, such as complex image or video rendering, much faster than commodity x86 CPUs. Specific AI/ML processors, such as Google’s Tensor Processing Unit (TPU), are continuing this trend.

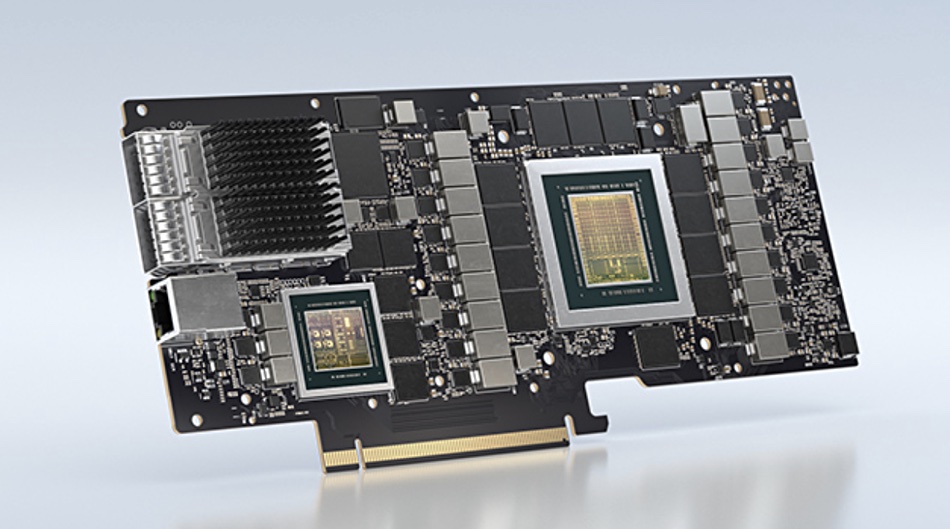

The second acceleration development Chawla identifies is network and storage acceleration. He says it started with FPGAs and SmartNICs, and evolved over the last two years with a broader industry focus on DPUs (Data Processing Units) and IPUs (Infrastructure Processing Units). Nvidia makes the BlueField SmartNIC, Fungible is shipping DPUs, and Intel IPUs.

Data acceleration will help bring data-aware processing into IT, he says. This means processing that takes place because of a data event, such as an incoming data stream or because data of a certain type is recognized, like an image of a particular object.

Why do compute in storage?

The three main reasons for doing computational storage are, first, to offload a server CPU so it spends more time processing applications and less time doing repetitive low-level storage operations such as compression, video file transcoding, and object metadata tagging.

Secondly, having such computation done at the drive level obviates the need to move the data into a host server’s DRAM, supposedly saving electricity. But the data has to move from the drive’s media into the CSP’s DRAM and we are not yet aware of detailed investigations into the relative energy costs of computational storage versus doing the same calculations in a host server’s CPU+DRAM complex.

Thirdly, computational storage can be done before the results of such computation are needed; in parallel with host server application processing, and also in parallel across storage drives. The results can be ready as soon as an application needs them rather than have that application do the processing itself which would take longer.

CSD suppliers

CSDs are being developed by NGD with its Newport drive, ScaleFlux with a CSD 2000 product, Eideticom, and Samsung.

In general, CSDs use Arm CPUs, often included in an FPGA setup. RISC-V processors may also be used.

IBM added compute-in-storage to its third-generation Flash Core Modules (FCM) in February. The FCM has an onboard RISC-V processor and in-line compression hardware to handle background storage tasks.

Analyst Thomas Coughlin says parts of storage applications can be offloaded from the CPU to the FCM. Such processing can be distributed among many FCMs to perform replication, snapshots, data reduction, encryption/decryption, and monitoring. He told newsletter subscribers that IBM sees the FCMs as compute elements for Spectrum Virtualize and they can perform filtering, searching, and scanning to assist in analytics and machine learning e.g. to be used for malware detection. The search function can look at log files to look for various strings.

IBM said that work is being done in standards bodies to define APIs needed for host system or databases to determine which parts of volumes need scanning in the FCM.

Dell and computational storage

Dell’s mid-range PowerStore unified block and file arrays have an AppsON feature enabling them to run applications as virtual machines inside the array, using its dual-controller CPUs and DRAM. At the PowerStore launch in May 2020, Dell said AppsON was a good fit for data-intensive workloads with analytics run locally on the array from streamed-in data sources such as Splunk, Flink, and Spark.

We can consider such a system as a variant of a hyperconverged infrastructure appliance (HCI), with compute embedded in the storage rather than storage converged with the compute. Of course, the amount of compute resources are limited with PowerStore compared to a proper HCI system such as VxRail, and the storage is a physical array (controlled by array software running in a VM in the controllers) rather than a virtual SAN operating across a cluster of HCI server nodes.

There is no standard way for a host server system to know if computational storage tasks have been executed, either at drive or array level. We can envisage a SNIA scheme for a server or a data IO event to start a computational storage session and for such a session to tell the server software that it has run and completed. This would avoid customers each having to develop customized software to do the same and so keep reinventing the wheel.

Such storage computation start and end messages could also be applied to a server host and CSD situation.

If such a model existed, Dell could sell integrated computational storage systems to customers knowing that there was a standard framework for their use, with readily available system software support. Chawla writes: “We will see future databases and data lake architectures take advantage of computational storage concepts for more efficient data processing, data discovery and classification.”