Fungible has announced GPU-Connect with its DPU dynamically composing GPU resources across an Ethernet network, enabling cloud and managed service providers to offer GPUs-as-a-service.

The startup has specially designed data processing unit (DPU) chip hardware to deal with east-west traffic across a datacenter network, handling low-level all-flash storage and network processing to offload host server CPUs and enable them to process increased application workloads. Fungible DPU cards fit into servers and storage arrays – and now a GPU enclosure – and are connected across a TruFabric networking link with Composer software dynamically composing server, storage, networking and GPU resources for workloads. The aim is to avoid having stranded resource capacity in fixed physical servers, storage and GPU systems, and increase component utilization.

Toby Owen, Fungible’s VP of product, is quoted in Fungible’s announcement: “Fungible GPU-Connect empowers service providers to combine all their GPUs into one central resource pool serving all their clients. Service providers can now onboard new data-analysis intensive clients quickly without adding expensive servers and GPUs. By leveraging FGC, datacenters can benefit from the collective computing power of all their GPUs and substantially lower their TCO with the reduction of GPU resources, cooling and physical footprint needed.”

The Fungible DPU creates a secure, virtual PCIe connection between the GPU and the server, managed by hardware, that is transparent to the server and to applications. No special software or drivers are needed and Fungible GPU-Connect (FGC) can be retrofitted into existing environments. Fungible research indicates that GPUs typically sit idle while accessing hosts digest their results – with average GPU utilization per user around 15 per cent.

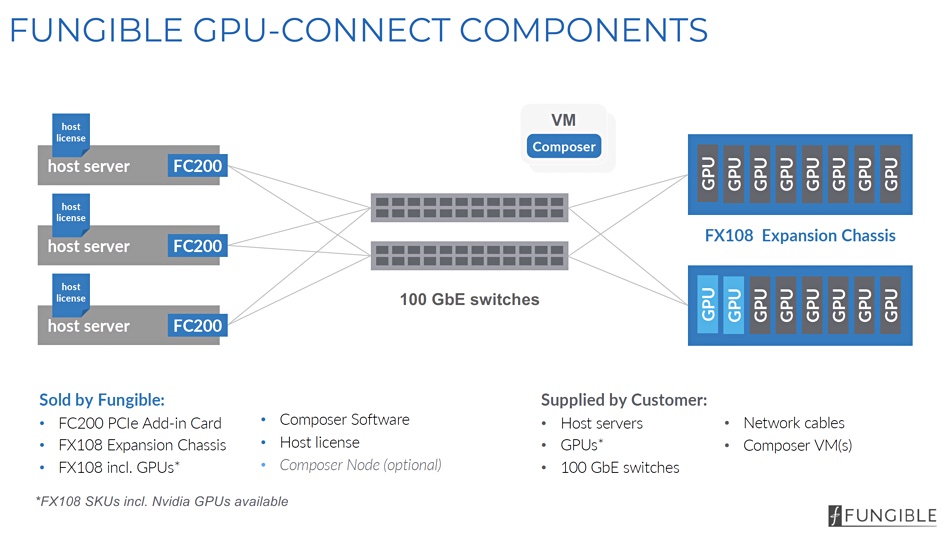

FGC includes an FX-108 GPU card chassis, an FC200 accelerator card for the host server, and Fungible’s Composer software.

The 4U chassis can house up to eight double- or single-width PCIe 3 or 4 16-lane connected Nvidia GPU cards – A2, A10, A16, A30, A40, A100-40G, and A100-80G – plus an optional NVLink Bridge. It has 100GbitE connectivity and supports Top-of-Rack only, Spine-Leaf network topology. There can be up to four F200 cards.

The Composer software runs on physical or virtual x86 host servers.

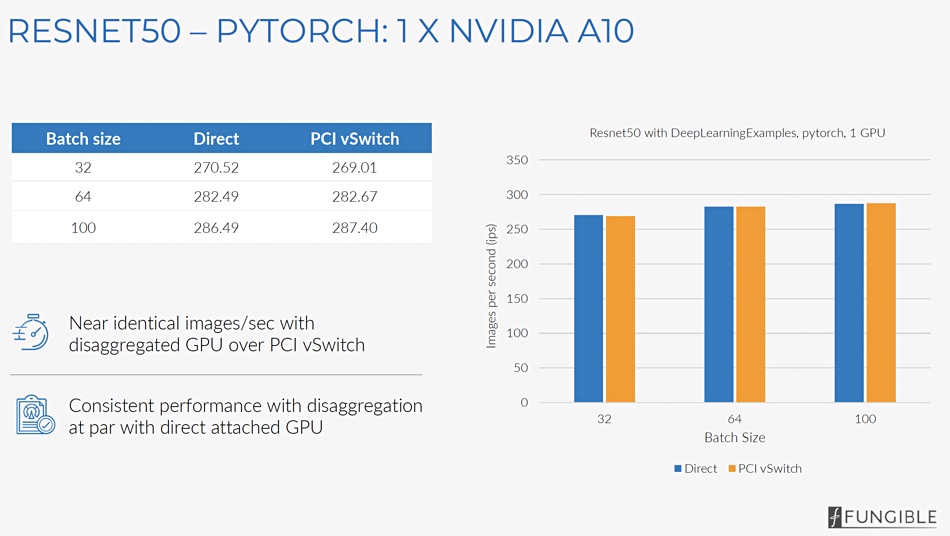

The system’s performance compared to directly connected GPUs is near identical or even identical within margin-of-error terms. Here is a RESNET50 example:

Fungible is the sole DPU supplier combining its accelerator chips and cards with composability across its own network. Intel’s IPU is not a composability offering. Neither is Nvidia’s BlueField product line nor AMD’s Pensando chips.

A person close to Fungible said, regarding Pensando: “The main difference [is] that we have built products based on our DPUs instead of just trying to shove them into servers. Two different approaches. we are more focused on the composable infrastructure and the datacenter as a whole.”

The two main composable system startups, Liqid and GigaIO, both use physical PCIe as their connectivity medium. Fungible provides a virtual PCIe facility across Ethernet.

The intent is that tier-2 MSPs and CSPs will use FGC as a base for their own GPUaaS offerings – a hitherto untapped market. Some large or specialized enterprises may use FGC as well. We have been told that Fungible is getting a lot more traction now with partners and customers and the FGC platform is exciting a lot of interest. Let’s see if Fungible has composed the right product set for the market.