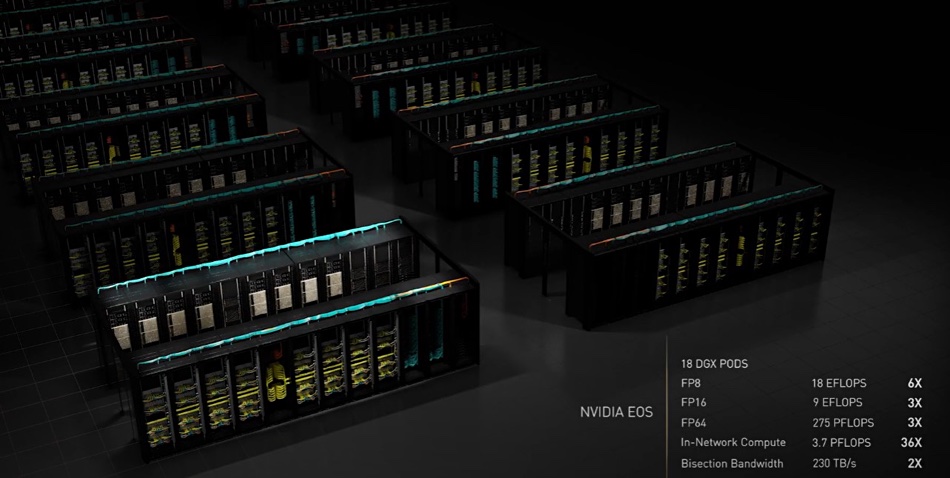

Nvidia is building an AI supercomputer called Eos for its own use in climate science, digital biology, and AI workloads. Eos should provide 18.4 exaFLOPS of AI performance and will be built using Nvidia’s SuperPOD technology. How will its data be stored?

Eos will have 576 x DGX systems utilizing 4,608 Hopper H100 GPUs. There are 8 x H100 GPUs per DGX and 32 x DGX systems per Nvidia SuperPOD, hence 18 SuperPODS in Eos. Each DGX provides 32 petaFLOPS. Eos should be four times faster that Fujitsu’s Fugaku supercomputer in terms of AI performance and is expected to provide 275 petaFLOPS of performance for traditional scientific computing.

This will need a vast amount of data storage to feed the GPU processors fast enough to keep them busy. It needs a very wide and swift data pipeline.

Wells Fargo analyst Aaron Rakers writes: “Each of the DGX compute nodes will incorporate two of NVIDIA’s BlueField-3 DPUs for workload offloading, acceleration, and isolation (i.e. advanced networking, storage, and security services).”

We can expect that the storage system will also use BlueField-3 DPUs as front-end NICs and storage processors.

We envisage that Eos will have an all-flash datastore providing direct data feeds to the Hopper processors’ memory. With promised BlueField-3 support, VAST Data’s scale-out Ceres enclosures with NVMe drives and its Universal Storage software would seem a likely candidate for this role.

Nvidia states: “The DGX H100 nodes and H100 GPUs in a DGX SuperPOD are connected by an NVLink Switch System and NVIDIA Quantum-2 InfiniBand providing a total of 70 TB/sec of bandwidth – 11x higher than the previous generation.”

Rakers writes that data will flow to and from Eos across 360 NVLink switches, providing 900GB/sec bandwidth between each GPU within the DGX H100 system. The systems will be connected through ConnectX-7 Quantum-2 InfiniBand networking adapters running at 400Gb/sec.

This is an extraordinarily high level of bandwidth and the storage system will need to have the capacity and speed to support it. Nvidia says: “Storage from Nvidia partners will be tested and certified to meet the demands of DGX SuperPOD AI computing.”

Nvidia storage partners in its GPUDirect host server CPU-bypass scheme include Dell EMC, DDN, HPE, IBM (Spectrum Scale), NetApp, Pavilion Data, and VAST Data. Weka is another supplier but only provides file storage software, not hardware.

Providing storage to Nvidia for its in-house Eos system is going to be a hotly contested sale – think of the customer reference possibilities – and will surely involve hundreds of petabytes of all-flash capacity with, we think, a second-tier capacity store behind it. Candidate storage vendors will pour account-handling and engineering resource into this like there is no tomorrow.