Fungible has increased its NVMe/TCP storage-to-server bandwidth from 6.55 million to 10 million IOPS by replacing Mellanox NICS with its own Storage Initiator cards, and claimed a world record.

Update. Fungible comparison to Pavilion Data section added 17 Nov 2021.

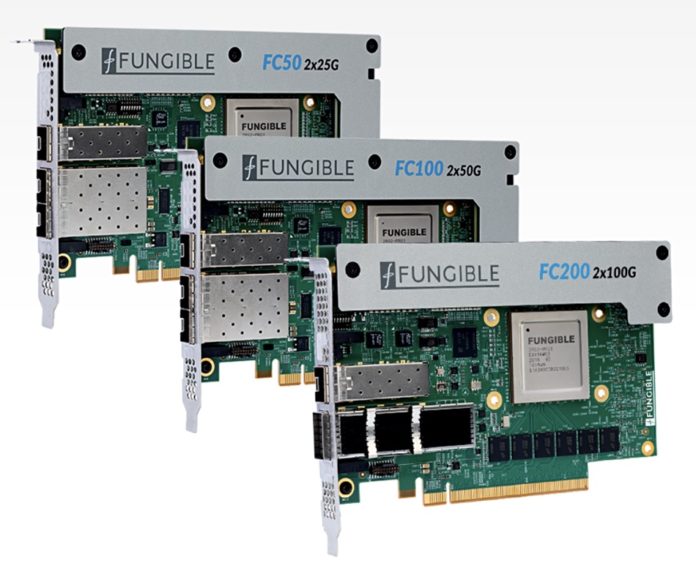

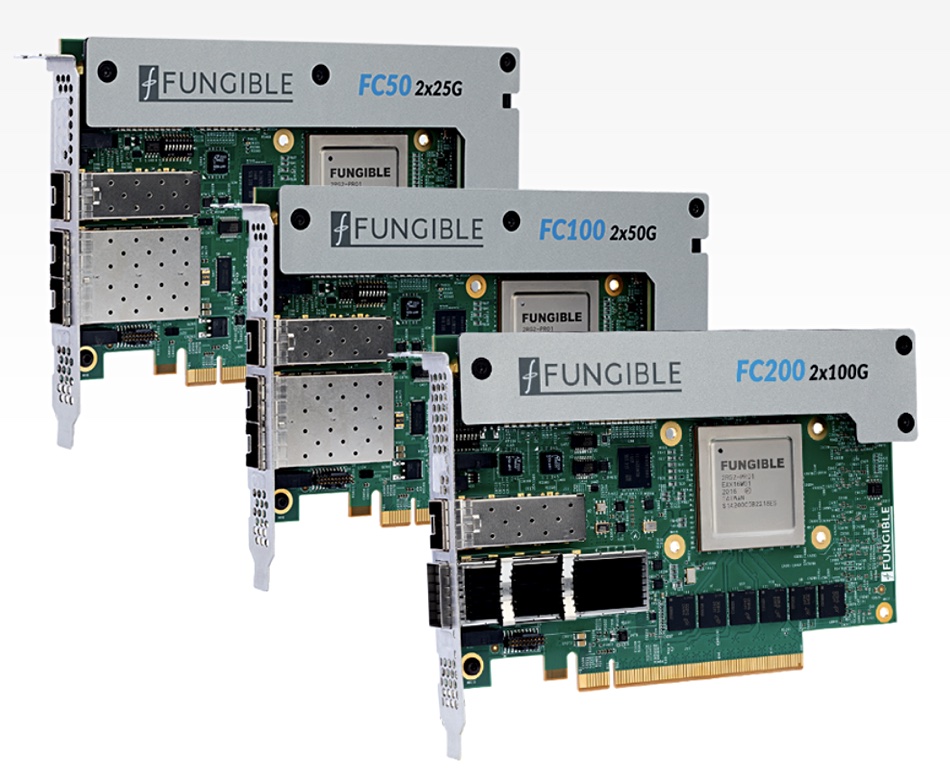

Fungible has developed its own Data Processing Unit processor chip, saying it’s better for so-called east-west communications in a datacentre than x86-based servers and standard Ethernet NICs and switches, Fibre Channel switches, etc. The FS1600 is a clusterable 2U x 24-slot NVMe SSD array with Fungible’s DPU chip and software controlling it, that produced 6.55 million IOPS when linked to a server using Mellanox ConnectX-5 NICs.

Eric Hayes, CEO of Fungible, said: “The Fungible Storage Initiator cards developed on our … Fungible DPU free up tremendous amounts of server CPU resources to run application code, and the application now has faster access to data than it ever had before.”

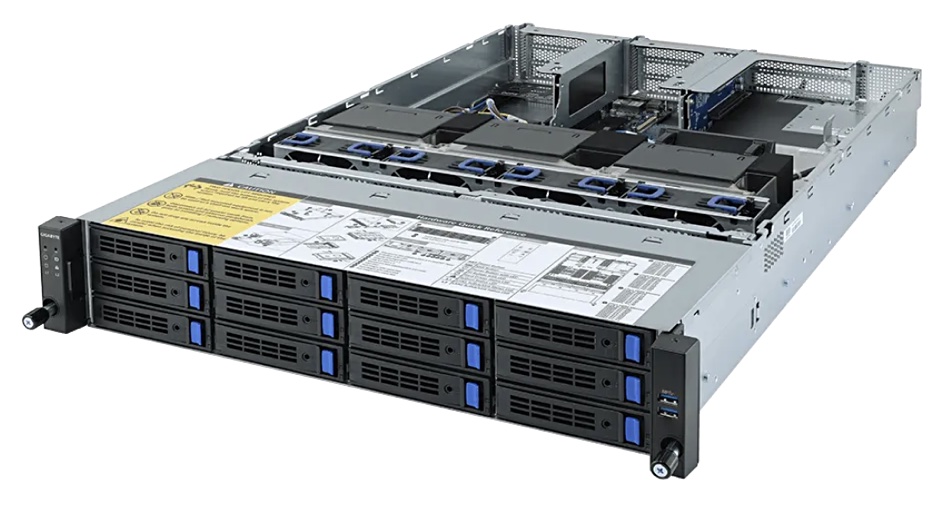

The 10 million IOPS run took place at the the San Diego Supercomputer Center and used a Gigabyte R282-Z93 server with a dual 64-core AMD EPYC 7763 processor, 2TB of memory, and five PCIe 4 expansion slots.

The slots were filled with five Fungible S1 cards and these linked to an unrevealed amount of capacity and unrevealed number of FS1600 Fungible storage target arrays across an Ethernet LAN of unrevealed bandwidth. Unlike the system using Mellanox NICs, which almost saturated the host server’s CPUs, this one, running Fungible’s NVMe/TCP Storage Initiator (SI) software, took up only 63 per cent of the Gigabyte server’s cores — meaning 47 of them could run application code.

John Graham, a principal engineer with appointments at SDSC and the Qualcomm Institute at UC San Diego, said: “The Fungible solution has set a new bar for storage performance in our environment. The results are potentially transformational for large-scale scientific cyber-infrastructure such as the Pacific Research Platform (PRP) and its follow-on, the National Research Platform (NRP).”

Fungible provided, he said: “A high-performance storage solution that achieves our planned density and cost requirements.”

Comment

If we say each Fungible S1 card delivered 2 million IOPS from an FS1600 then 10 of them could provide 20 million IOPS and thus equal the Pavilion HyperParallel Flash Array’s 20 million IOPS system performance, in theory. That could be distributed across ten servers in a datacentre. If Fungible has an equally compelling price/performance message to back up its performance message then we have a new entrant in the enterprise storage array market as well as in the supercomputing and high-performance computing market.

One aspect of its set-up though, is that its storage initiator cards are proprietary — they are effectively not fungible, as other Ethernet NICs cannot sustain the 2 million IOPS performance number. Of course, once Fungible’s composability software is fully available then the cards will be fully fungible within its composable datacentre infrastructure scheme.

Fungible vs Pavilion

We are told by a source familiar with the situation that it takes Pavillion 20 x 86 processors, and 40 x 100Gbit ethernet ports in their standard enclosure to get its 20 million IOPS number. Fungible’s 10 million IOPS came from a single FS1600 node with 2 x DPU chips, drawing 750w. That’s a considerable difference in power draw right there.

We understand that there’s no data resilience in the single server-single node Fungible 10 million IOPS set up; it’s been built just for raw performance. If we considered a theoretical 20 x DPU based system, the theoretical limit in terms of IOPS would be 400 million. With Fungible’s current design there are only 6 x 100Gbit ports to the DPU, which means you top out at around 6.5 million IOPS per DPU.

We hear that Fungible’s DPU was designed to support around 20 million IOPS per processor, but it’s not economically prudent to include the amount of networking per box to support that performance profile.