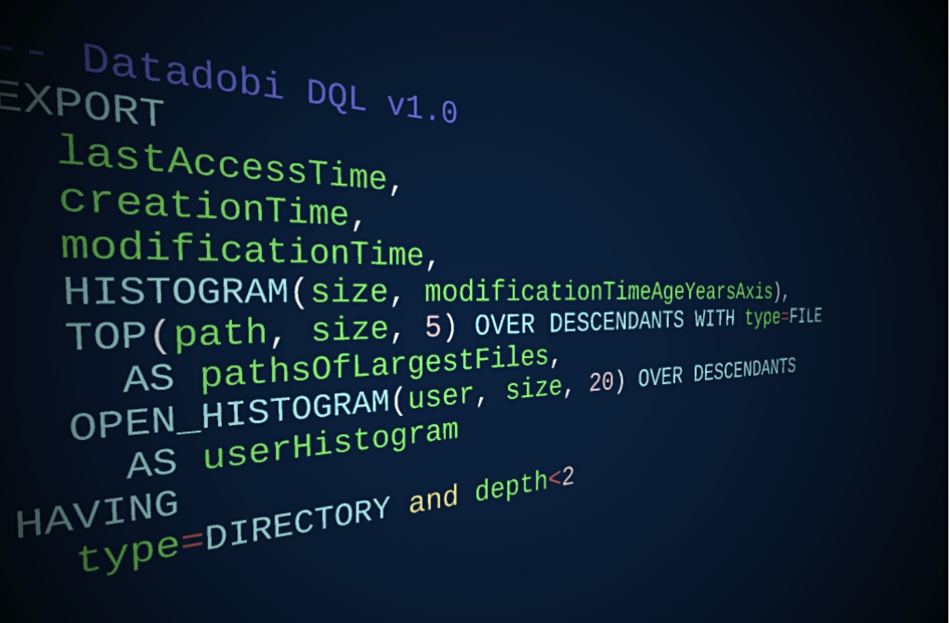

Datadobi has opened up its petabyte-scale file mapping technology to customers with a Dobi Query Language.

Datadobi is a data migration technology supplier, with DobiMigrate moving NAS files and objects, and DobiProtect protecting and recovering them. Both products rely on DataDobi’s so-called Data Mobility Engine and are designed to scan large file systems (data lakes) containing billions of files. This produces a catalogue containing huge lists of file paths and their metadata in proprietary format.

Historically, these scan files were only used for doing data migration or protection by Datadobi, but now customers can query them directly using Dobi Query Language (DQL) as part of a file assessment service.

Carl D’Halluin, Datadobi’s CTO, writes in a blog: “The volume of data is only expected to grow over the next few years. IT administrators need a data management solution that can transform data into digestible material in order to allow curated decisions on storage options for migration and protection to be made.”

Step forward DQL. It is a query framework that can look for inside data lakes to:

- Identify cold data sets — data that is infrequently accessed;

- Identify old data sets — data that was created or modified some time ago;

- Identify data sets owned by a specific user or group, e.g. by users who no longer work at the company;

- Identify shares, exports or directory trees that are homogeneous (cold, old, owner, file types) and can be handled as one data set, e.g. upon which to take specific lifecycle actions.

Datadobi created the file system assessment offering last year as a customer service to help plan a data migration or reorganisation. DQL allows these assessments to be customisable.

DQL is a form of file system analytics. It opens up a door to future possibilities such as an alerting software layer that can run DQL to find out file system and subset status, and suggest actions — such as a file/object subset move to faster or slower/cheaper storage based on its activity level. A step beyond that would be to set up policies and then have an additional layer of software take automatic actions if threshold values were reached.

For example, move all files or objects of a particular type to archival or cold storage if certain access rate requirements were met (say, if the data were not accessed within 60 days). Datadobi has interesting possibilities here.