There are granularity consequences of the network fabric choices made by composable systems suppliers. Go the PCIe route and you can compose elements inside a server as well as outside. Go the Ethernet route and you’re stuck outside.

This is by design. PCIe is designed for use inside servers as well as outside, so it can connect to components both within and without. Ethernet and InfiniBand aren’t designed to work that way, so they don’t. You can only compose what you can connect to.

The composability concept is that an individual server, with defined CPU, network ports, memory and local storage, may not be perfectly sized in capacity and performance terms for the application(s) it runs. So applications can run slowly or server capabilities — DRAM, CPU cores, storage capacity, etc. — can be wasted, stranded and idle.

If only excess capacity from one server could be made available to another server, then the resources would not be wasted and stranded but become productive. A composable system dynamically sets up — or composes — a bare metal server from resource pools so that it is better sized for an application, and then decomposes this server when the application run is over. The individual resources are returned to the pool, for re-use by other dynamically-organised servers later.

HPE more or less pioneered this with its Synergy composable systems, organised around racks of its blade servers and storage. A set of startups followed in its footsteps — such as DriveScale, bought by Twitter, as well as GigaIO and Liqid. Western Digital joined the fray with its OpenFlex scheme, and then Fungible entered the scene, with specifically designed Data Processing Unit (DPU) chip-level hardware. Nvidia is making composability waves also, with its BlueField-2 DPU or SmartNIC and partnership with VMware.

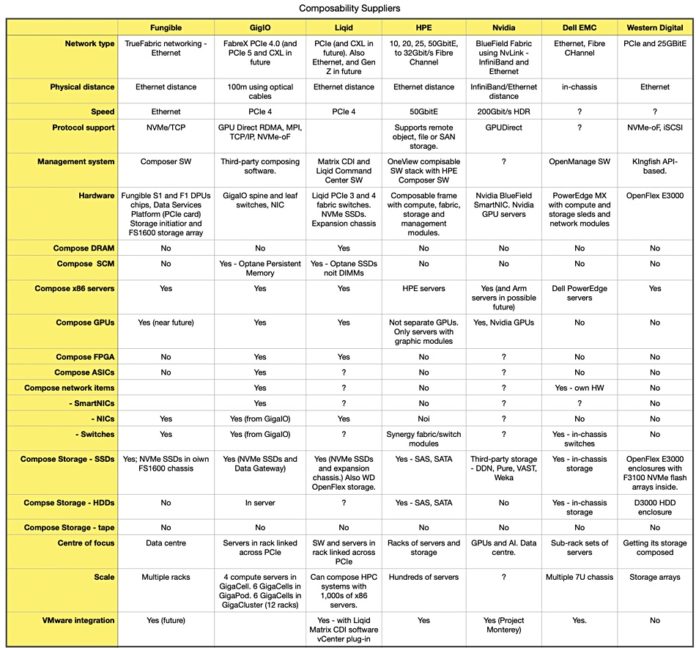

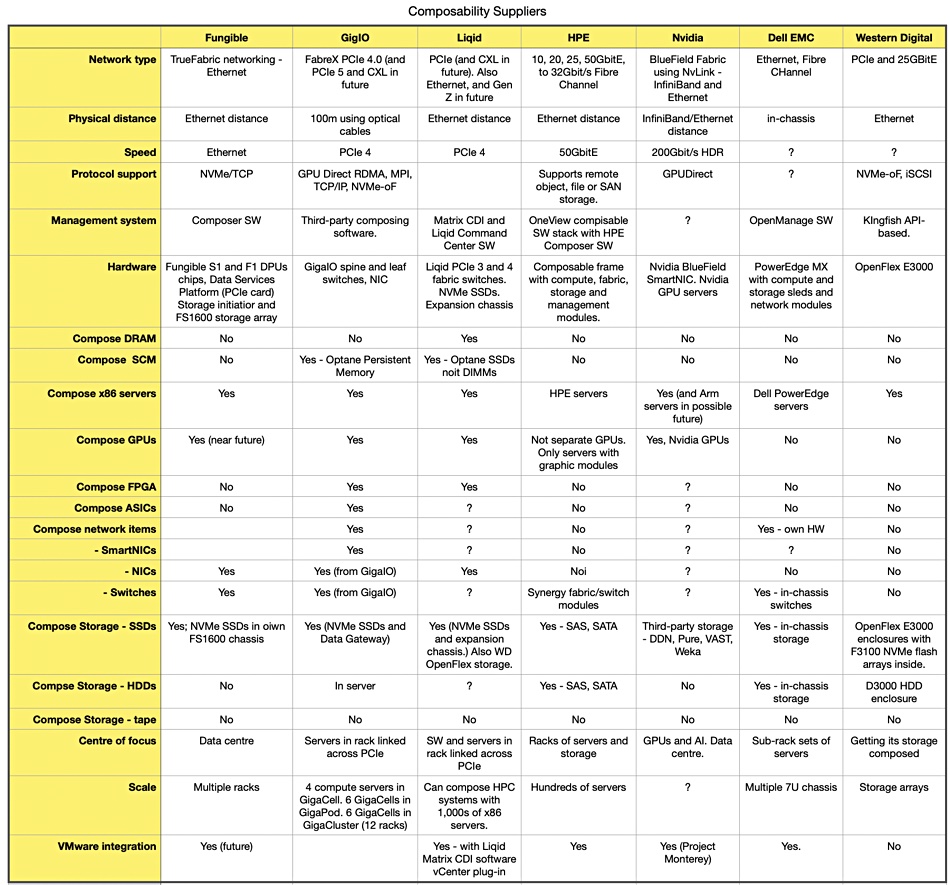

These suppliers can be divided into pro-Ethernet and pro-PCIe camps. A table shows this and summarises their different characteristics:

It notes that PCIe is evolving towards the CXL interconnect which can link pools of DRAM to servers.

Composing and invisibility

In order to bring an element into a composed system you have to tell it and the other elements that they are now all inside a single composed entity. They then have to work together as they would if they were part of a fixed physical piece of hardware.

If the hardware elements are linked across Ethernet, then some server components can’t be seen by the composing software because there is no direct Ethernet connection to them. The obvious items here are DRAM and Optane Persistent Memory (DIMMs), but FPGAs, ASICS and sundry hardware accelerators are also invisible to Ethernet.

Fungible, HPE and Nvidia are in the Ethernet camp. GigaIO and Liqid are in the PCIe camp. The Fungible crew firmly believe Ethernet will win out, but the founders do come from a datacentre networking background at Juniper.

Nvidia has a strong focus on composing systems for the benefit of its GPUs and is still exploring the scope of its composability, with VMware’s ESXi hypervisor running on its SmartNICs and managing the host x86 server into which they are plugged. Liqid and GigaIO believe in composability being supplied by suppliers who are not server or GPU suppliers, as does Fungible.

Liqid has made great progress in the HPC/supercomputer market, while the rumour is that Fungible is doing well in the large enterprise/hyperscaler space. GigaIO says it owns the IP to make native PCIe into a routable network enabling DMA from server to server throughout the rack. It is connecting servers over PCIe, to compose masses and multiple types of accelerators to multiple nodes to get around BIOS limitations and so getting compute traffic off the congested Ethernet storage network.

Dell EMC has its MX7000 chassis with servers composed inside it, but this is at odds with Dell supporting Nvidia BlueField-2 SmartNICs in its storage arrays, HCI systems and servers. We think that there is a chance the MX7000 could get BlueField SmartNIC support at some point.

The composability product space is evolving fast and customers have to make fairly large bets if they decide to buy systems. It seems to us here at Blocks & Files, that the PCIe or Ethernet decision is going to become more and more important and we can’t see a single winner emerging.