Composability supplier GigaIO has updated its FabreX software to group its rackscale pooled accelerator resource units into pods of six cells and clusters of six pods, making up a 12-rack monster composable resource for high-end HPC and AI workloads.

GigaIO extends a PCIe gen 4 bus out from servers, and groups accelerators – FPGAs, ASICS, GPUs and DPUs – and Optane and other NVMe SSD storage into resource pools – GigaCells – usable by any or all the servers hooked up to the PCIe 4 link.

Alan Benjamin, GigaIO’s CEO, said in a statement: “With our revolutionary technology, a true rack-scale system can be created with only PCIe as the network. The implication for HPC and AI workloads, which consume large amounts of accelerators and high-speed storage like Intel Optane SSDs to minimise time to results, is much faster computation, and the ability to run workloads which simply would not have been possible in the past.”

Components such as Intel Optane SSDs continue to communicate over native PCIe (and CXL in the future), as they would if they were still plugged into the server motherboard, for the lowest possible latency and highest performance.

Instead of running several networks and having a so-called sea of NICs, HPC and AI data centre managers use the single PCIe Gen 4 fabric, composed of switches and PCIe NICs from GigaIO, to interconnect servers and the pooled resources.

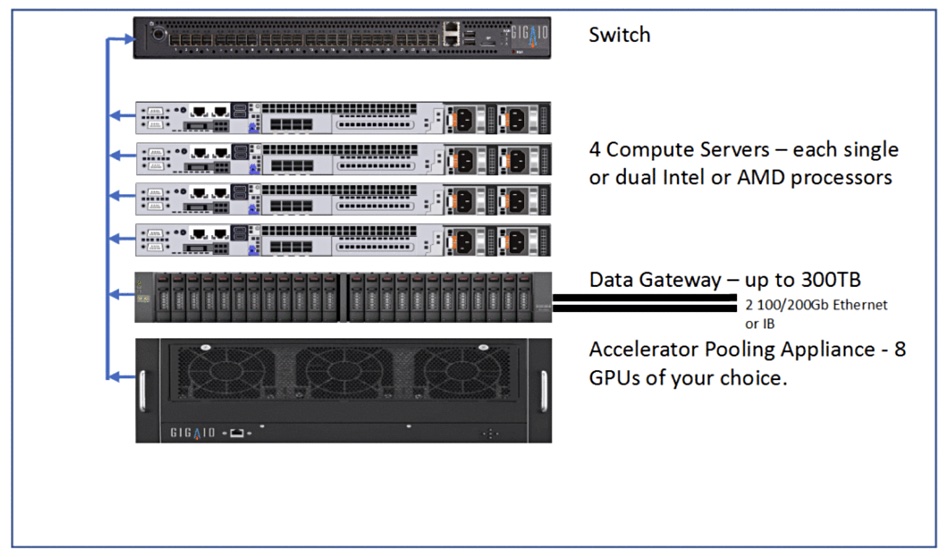

The basic element in GigaIO’s scheme is a GigaCell, which consists of third-party compute servers, a top-of-rack GigaIO switch, a storage unit (called a Data Gateway in the diagram below), an Accelerator Appliance containing GPUs, FPGAs, ASICs or DPUs, or even a GPU server such as Nvidia’s HGX A100.

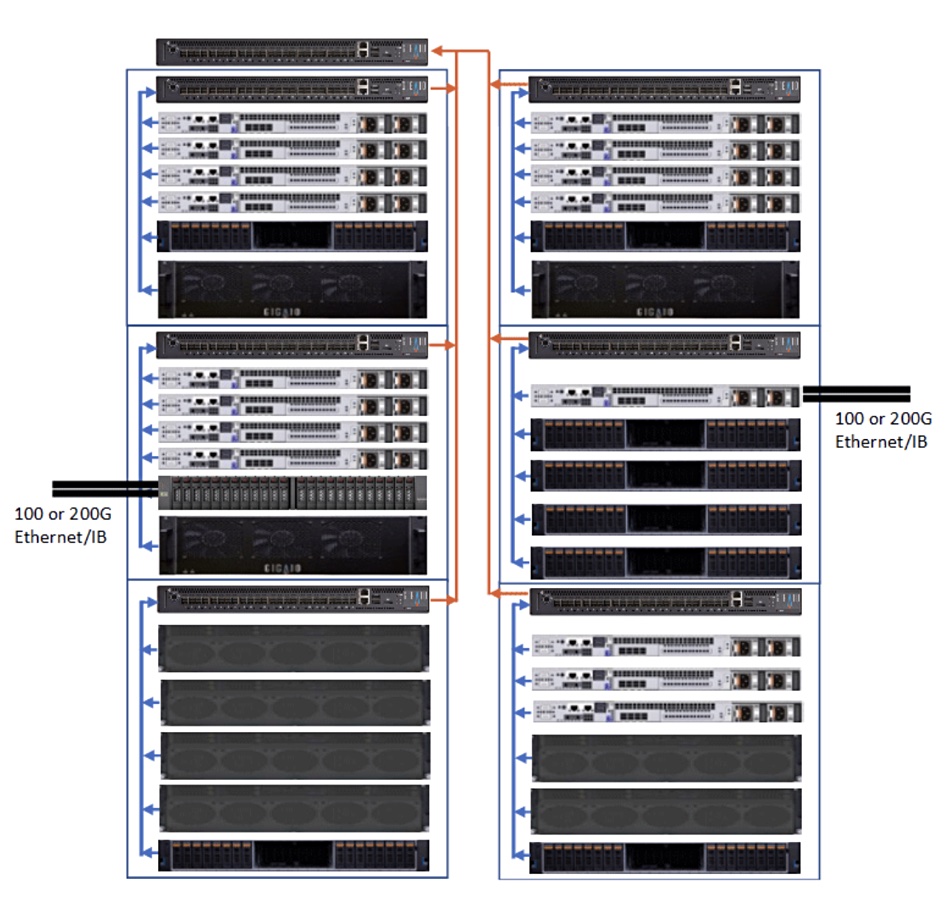

V2.2 Fabrex enables up to six GigaCells to be grouped into a GigaPod. GigaIO said all the resources inside the entire GigaPod are connected by the FabreX universal fabric, transforming the entire GigaPod into what GigaIO calls one, composable, unit of compute.

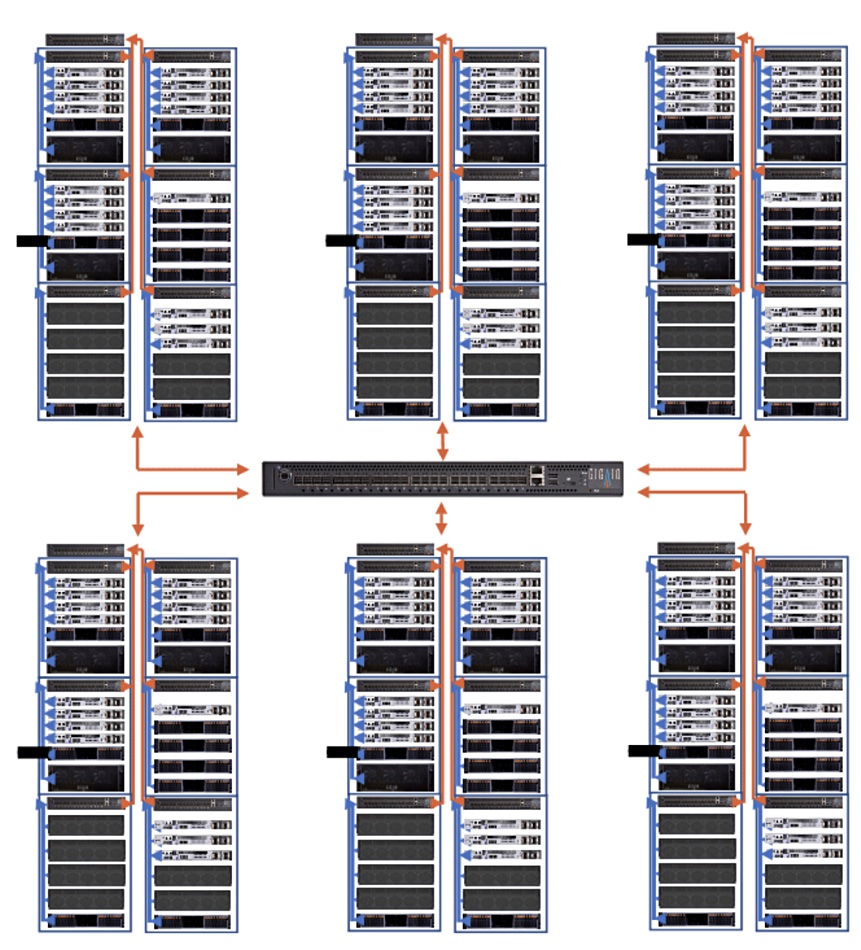

It can be enlarged for even bigger workloads, up to six GigaPods can be aggregated into a GigaCluster, with cascaded and interleaved switches. Such a cluster can run to 12 x 42-inch racks within a 100m distance using optical cables.

The PCIe Gen 4 fabric is built with GigaIO spine and leaf switches and the actual system composing is carried out by third-party off-the-shelf software options available from a number of vendors to avoid software lock-in.

Workloads run faster, as if they were using components inside one server, but harness the power of many nodes, all communicating within one seamless universal fabric. Leaf and spine, dragonfly and other scale-out network topologies are fully supported.

GigaIO quotes Addison Snell of Intersect360 Research as confirming the need for a composable universal fabric: “With analytics, AI, and new technologies to consider, organisations are finding their IT infrastructure needs to span new dimensions of scalability: across different workloads, incorporating new processing and storage options, following multiple standards, at full performance. The data-centric composability of FabreX is aimed at solving this challenge, now and into the future.”

Comment

GigaIO is competing in the data centre composability stakes with Dell EMC (MX7000), Fungible, HPE (Synergy), Liqid, and Nvidia. In our view GigaIO and Liqid are contenders in this space as both their offerings centre on the PCIe bus.

GigaIO addresses composability from an HPC and networking point of view, with customers buying into its switches and NIC, although it requires third-party composability software to be sourced by customers. It may need system integrator partners to sell complete systems to enterprises.