Samsung has announced a high bandwidth memory (HBM) chip with embedded AI that is designed to accelerate compute performance for high performance computing and large data centres.

The AI technology is called PIM – short for ‘processing-in-memory’. Samsung’s HBM-PIM design delivers faster AI data processing, as data does not have to move to the main CPU for processing. According to the company, no general system hardware or software changes are required to use the technology.

Samsung Electronics SVP Kwangil Park said in a statement: “Our… HBM-PIM is the industry’s first programmable PIM solution tailored for diverse AI-driven workloads such as HPC, training and inference. We plan to build upon this breakthrough by further collaborating with AI solution providers for even more advanced PIM-powered applications.”

A number of AI system partners such as Argonne National Laboratory are testing Samsung’s HBM-PIM inside AI accelerators, and validations are expected to complete by July.

Rick Stevens, Argonne Associate Laboratory Director, said in prepared remarks: “HBM-PIM design has demonstrated impressive performance and power gains on important classes of AI applications, so we look forward to working [with Samsung] to evaluate its performance on additional problems of interest to Argonne National Laboratory.”

High Bandwidth Memory

Generally, today’s servers have DDR4 memory channels that connect memory DIMMs to a processor. This connection may be a bottleneck in memory-intensive processing. High bandwidth memory is designed to avoid that bottleneck.

High Bandwidth Memory involves layering memory dies in a stack above a logic die, and connecting the stack+die to a CPU or GPU through an ‘interposer’. This is different from a classic von Neumann architecture setup, which separates memory and the CPU. So how does this difference translate into performance?

Let’s compare DDR4 DIMM with Samsung’s Aquabolt 8GB HBM2, launched in 2018, which incorporates eight stacked 8Gbit HBM2 dies. DDR4 DIMM capacity is 256GB and the data rate is up to 50GB/sec. The Aquabolt 8GB HBM provides 307.2GB/sec bandwidth – six times faster than DDR4 – and 2.4Gbit/s pin speed.

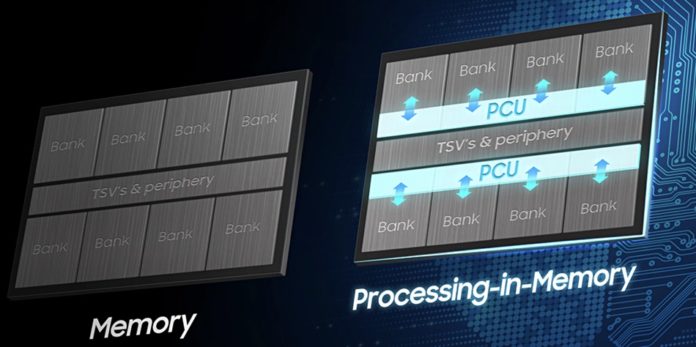

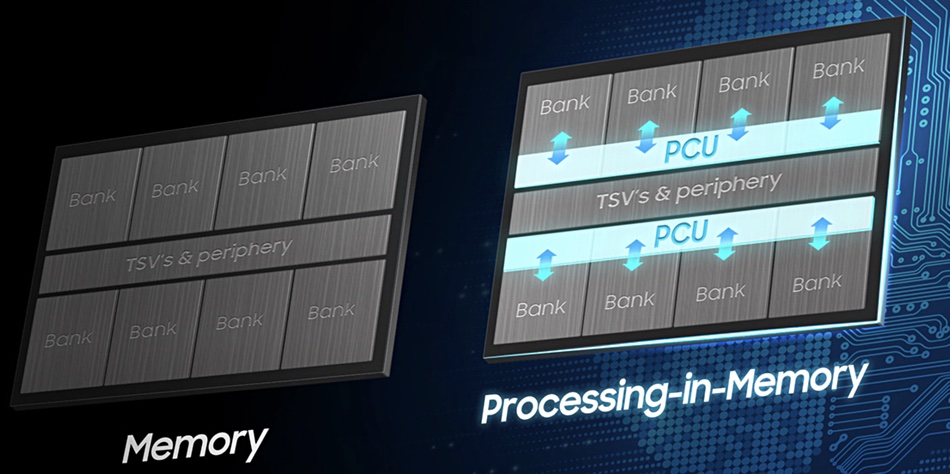

Samsung’s new HBM-PIM is faster again. This design embeds a programmable computing unit (PCU) inside each memory bank. This is a “DRAM-optimised AI engine that forms a storage sub-unit, enabling parallel processing and minimising data movement”. The engine performs half-precision binary floating point computations and the memory die loses some capacity due to each bank having an embedded PCU. This takes up space on the die.

An Aquabolt design incorporating HBM-PIM tech delivers more than twice HBM2 performance for image classification, translation and speech recognition, while reducing energy consumption by more than 70 per cent. (There are several HBM technology generations: HBM1, HBM2, which comes in versions 1 and 2, and also HBM2 Extended (HBM2E.)

A Samsung paper on the chip, “A 20nm 6GB Function-In-Memory DRAM, Based on HBM2 with a 1.2TFLOPS Programmable Computing Unit Using Bank-Level Parallelism, for Machine Learning Applications,” will be presented at the February 13-22 virtual International Solid-State Circuits Conference.