GPU maker Nvidia is buying Arm Holdings from Softbank for $40bn, according to numerous reports, and so getting into the Data Processing Unit (DPU) business.

It is likely Nvidia will accelerate Arm’s push into server chips. We can expect to see a stronger presence of Arm CPUs in storage arrays and HCI systems in the future. This move gives Nvidia a presence in the storage hardware business and provides future competition for Fungible, Pensando and other DPU suppliers.

Wells Fargo senior analyst Aaron Rakers told subscribers: “We believe the combination of Nvidia and Arm will leave investors to consider the possibility that this combination could meaningfully reshape the semiconductor landscape over the next decade (especially in terms [of] the data centre market).”

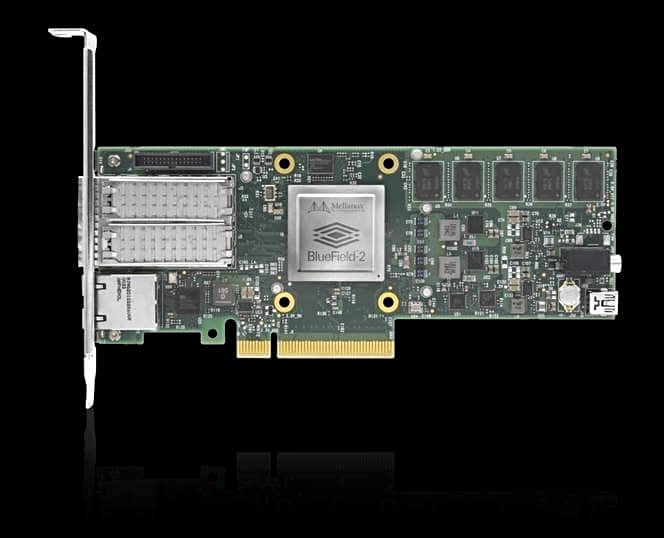

Rakers added this: “Nvidia has highlighted the view that the industry is moving toward a three layered compute architecture – Centralized Processing Units (CPUs), Accelerators (GPUs, FPGAs, custom ASICs, etc.), and Data Processing Units (DPUs). DPUs, which NVIDIA views as a programmable processor or SoC that ‘combines industry standard, high-performance, software programmable, multi-core CPU, typically based on the widely-used Arm architecture, tightly coupled with other SoC components’.” (Rakers emphasis.)

Three major pillars of computing

Nvidia stated in a blog earlier this year: “The DPU, or data processing unit, has become the third member of the data centric accelerated computing model. ‘This is going to represent one of the three major pillars of computing going forward,’ Nvidia CEO Jensen Huang said during a talk earlier this month.”

Nvidia sees the DPU as a data mover: “The CPU is for general purpose computing, the GPU is for accelerated computing and the DPU, which moves data around the data center, does data processing.”

The DPU features “a rich set of flexible and programmable acceleration engines that offload and improve applications performance for AI and Machine Learning, security, telecommunications, and storage, among others.”

DPUs should not be used for compute, even, by extension, storage-specific compute. Some DPU suppliers “make the mistake of focusing solely on the embedded CPU to perform data path processing. … This isn’t competitive and doesn’t scale, because trying to beat the traditional x86 CPU with a brute force performance attack is a losing battle.”

In fact the embedded CPU “should be used for control path initialisation and exception processing, nothing more.”