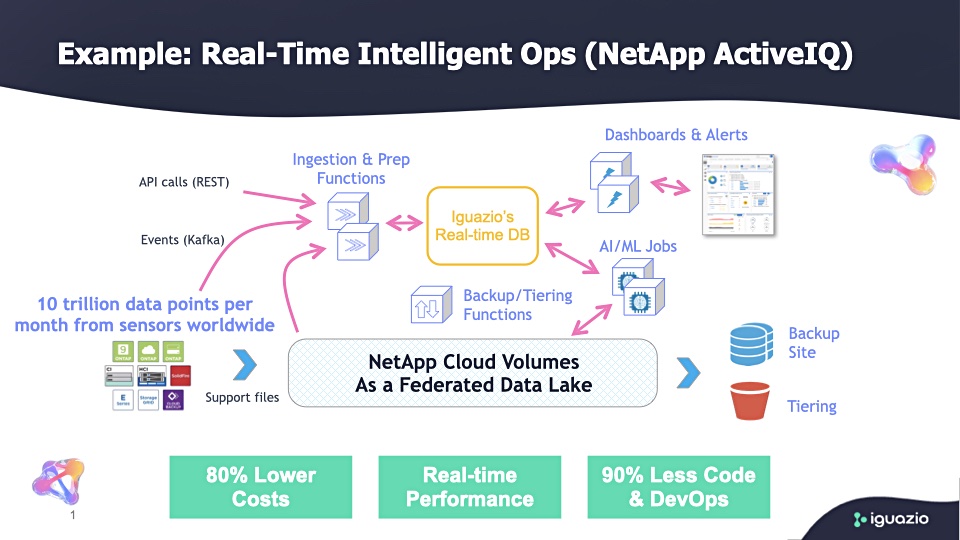

Each month, NetApp’s Active IQ handles up to 10 trillion data points, fed by storage arrays deployed at customer sites. Data volumes are growing.

Active IQ uses various AI and machine learning techniques to analyse the data and sends predictive maintenance messages to the arrays. The service has a lot of hardware capacity at its disposal, including ONTAP AI systems, twin Nvidia GPU servers, fast Mellanox switches and ONTAP all-flash arrays. But what about orchestrating and manage the AI data pipeline in those arrays? This is where Iguazio comes in.

NetApp is using Iguazio software for end-to-end machine learning pipeline automation. According to Iquazio, this enables real-time MLOps (Machine Learning Operations), using incoming data streams.

The Iguazio-based Active IQ system has led to 16x storage capacity reduction, 50 per cent reduction in operating costs, and fewer compute nodes, NetApp says. Also new AI services for Active IQ are developed at least six times faster.

Unsurprisingly, NetApp people are enthusiastic. Shankar Pasupathy, NetApp chief architect for Active IQ, said in a prepped quote: “Iguazio reduces the complexities of MLOps at scale and provides us with an end-to-end solution for the entire data science lifecycle with enterprise support, which is exactly what we were after.”

NetApp has now partnered with Iguazio to sell their joint data science ONTAP AI solution to enterprises worldwide. Iguazio also has a co-sell deal with Microsoft for its software running with Azure, and a reference architecture with its software on Dell EMC hardware. It is carving out a leading role as an enterprise AI pipeline management software supplier.

Let’s take a closer look at the Active IQ setup.

Hadoop out of the loop

NetApp needs real-time speed, scalability to cope with the massive and growing streams of data, and the ability to run Active IQ on-premises and in the cloud. It also wants Active IQ to learn more about customer array operations and get better at predictive analytics.

NetApp’s initial choice for handling and analysing the incoming Active IQ data was a data warehouse and Hadoop data lake. But the technology was too slow, too complex and scaling was difficult.

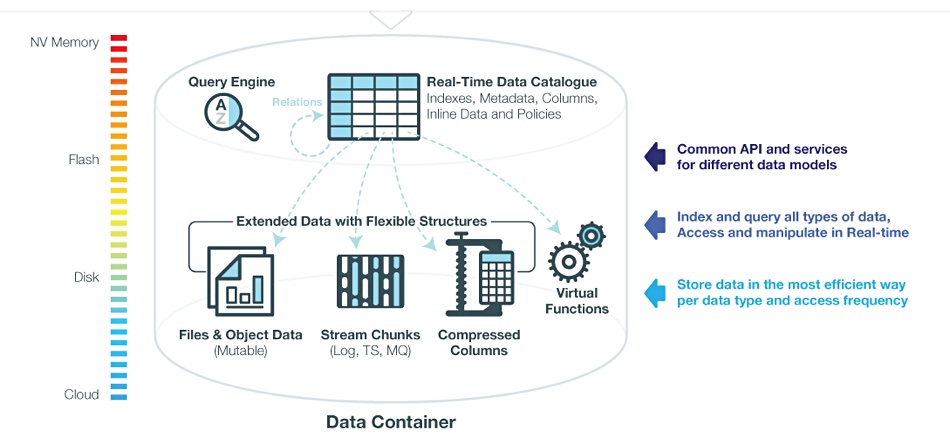

The fundamental issue is that real-time processing involves multiple stages with many interim data sets and types, and various kinds of processing entities such as containers and serverless functions.

This complexity means careful data handling is required. Get it wrong and IO requests multiply and multiply some more, overwhelming the fastest storage media.

AI pipeline

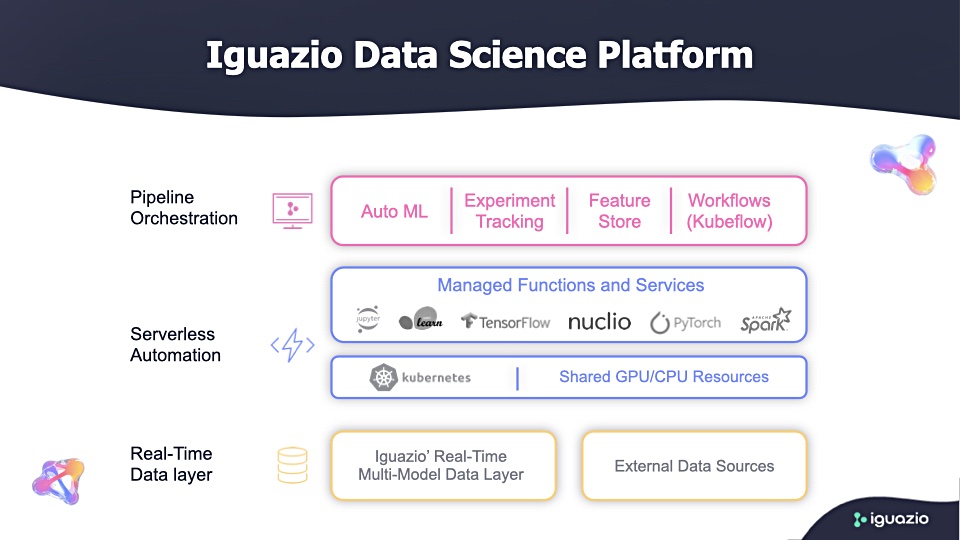

Iguazio’s software speciality is organising massive amounts of data and metadata in such a way as to make applying AI techniques in real time possible. Its software provides a data abstraction layer on which these processing entities can run.

An AI pipeline involves many stages:

- Raw data ingest (streams),

- Pre-processing (decompression, filtering and normalization)

- Transformation (aggregation and dimension reduction)

- Analysis (summary statistics and clustering)

- Modeling (training, parameter estimation and simulation)

- Validation (hypothesis testing and model error detection)

- Decision making (forecasting and decision trees)

There are multiple cloud-native applications, stateful and stateless services, multiple data stores, and data selection and filtering into subsequent stores involved in this multi-step pipeline.

NetApp and Iguazio software

Iguazio’s unified data model is aware of the pipeline stages and the need to process metadata to speed data flow. The software runs in a cluster of servers, with DRAM providing an in-memory metadata database, and NVMe drives holding interim data sets.

NetApp uses Trident, a dynamic storage orchestrator for container images integrated with Docker and Kubernetes and deployed using NetApp storage. Iguazio integrates with the Trident technology, linking a Kubernetes cluster and serverless functions to NetApp’s NFS and Cloud Volumes Storage. Iguazio is compatible with the KubeFlow 1.0 machine learning software that NetApp uses.

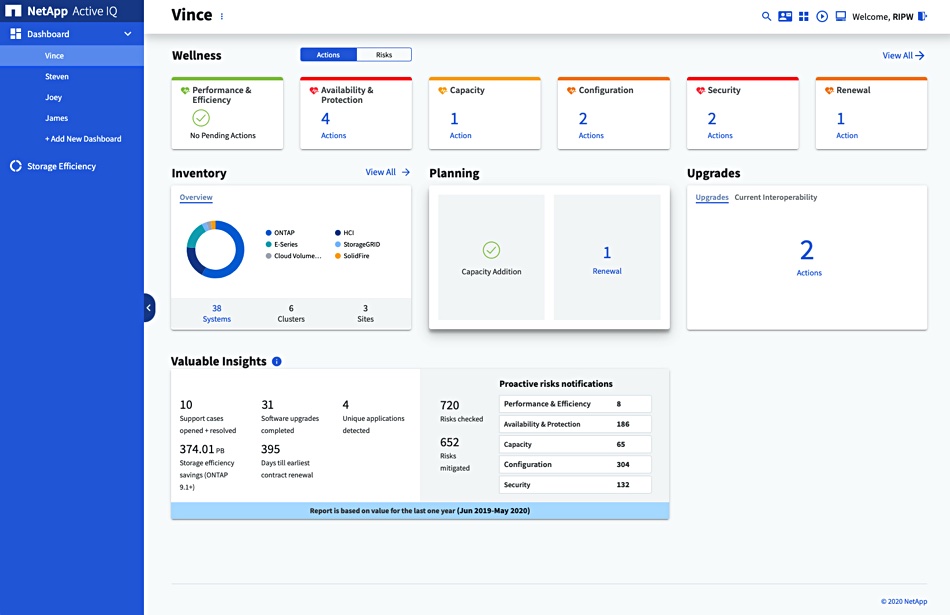

Iguazio detects patterns in the data streams and relates them to specific workloads on specific array configurations. It identifies actual and potential anomalies, such as a performance slowdown, pending capacity shortage, or hardware defect.

Then it generates actions, such as sending alert messages, to the originating customer system and all customers with systems likely to experience similar anomalies. It does this in real time, enabling NetApp systems to take automated action or sysadmins to take manual action.