Lightbits Labs, an all-flash array startup, claims server systems need external SANs to use flash memory properly. Users may only get only get 15 to 25 per cent flash capacity utilisation and 50 to 85 per cent of their flash spend is wasted.

—-

Update 9 April, 2020; Lightbits has contacted us since publication of this article to say it was making a general point about servers and that its observations do not apply to VMware vSAN. A spokesperson said: “During the IT Press Tour call, Kam Eshghi, our Chief Strategy Officer, stated that DAS is severely under-utilized. Your logical conclusion was that if DAS is under-utilized, and HCI uses DAS, that it makes HCI under-utilized. While we don’t disagree with the logic, it is essentially apples and oranges for the point Kam was making.

“To clarify, HCI software (sharing both CPU and storage [DAS] resources across a cluster) was NOT included in Kam’s statement about utilization. This is a very important distinction: Kam was referring to DAS with no software layer on top to allow for sharing. Where there is no software sharing layer, the very serious underutilization of DAS applies. With the sharing layer like HCI, utilization is greatly improved.

“Kam’s statement was only about DAS in a non-shared environment, vs. a shared disaggregated solution (such as Lightbits). This incident shows us we need to do better in explaining this important subtlety as we can see now how the wrong conclusions can be drawn if we do not spell it out clearly.”

—-

VMware is developing a TCP driver which will enable Lightbits’ SAN array to integrate with vSAN.

At a press briefing last week, Kam Eshghi, VP of strategy and business development at Lightbits Labs, told us: “We can be disaggregated storage for HCI … and can serve multiple vSAN clusters.”

“VMware is developing in-line TCP drivers. [They’re] not in production yet. … More to come… The same applies to other HCI offerings. More details this summer.”

Lightbits Labs technology

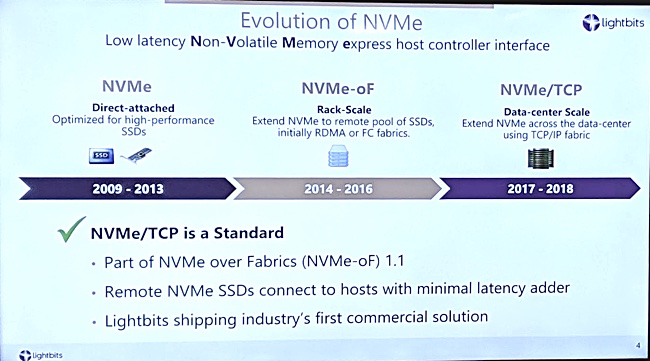

Lightbits has built an NVMe-oF all-flash array that uses TCP/IP as the transport medium, and runs LightOS, its own operating system.

Eshghi said the first NVMe-over Fabric systems used RDMA over converged and costly lossless Ethernet (ROCE). With NVMe-over TCP, existing TCP/IP cabling can be used to save costs. This affords NVMe-oF performance and latency, albeit at a few microseconds slower than ROCE.

Accessing servers need an operating system with an NVMe/TCP driver and the ability to talk to the SAN as if it is directly-attached storage. For the fastest IO response, Lightbits servers can be fitted with a LightField FPGA accelerator card, using a PCIe slot.

HCI architecture does not like SANs

Why does VMware’s vSAN need Lightbits Lab’s SAN array – or indeed any external SAN? The whole point of hyperconverged infrastructure (HCI) is to remove the complexity of external – aka ‘disaggregated’ – SAN storage and replace it with simpler IT building blocks. Clustered HCI systems combine server, hypervisor, storage and networking into single server-based boxes, and performance and capacity scale out by adding more boxes to the HCI cluster.

As performance needs have grown, HCI cluster nodes have started using flash SSD storage. In the industry’s ceaseless quest to gain more cost-effective and higher-density flash, SSD technology in recent years has progressed from MLC (2bits/cell) flash to TLC (3bits/cell) and QLC (4bits/cell).

According to Lightbits, TLC and QLC flash are problematic used in bare metal servers, because SSD endurance – capacity for repeated writes – is much lower than the earlier generation MLC. Flash capacity must be managed carefully to prevent needless writes that diminish the SSD’s working life.

SSD controllers incorporate Flash Translation Layer (FTL) software which translates incoming server IO requests such as logical block addresses into concepts that the SSD can manage, such as pages, etc. The FTL formats outgoing data into terms that the server can understand and manages the drive’s capacity to minimise writes.

Global Flash Translation Layer

Eshghi said Lightbits arrays manage drive capacity more efficiently by using a global FTL that works across all the SSDs. To preserve the life of smaller drives, IOs can be redirected to drives with the biggest capacity.

In a bare metal server or cluster, the SSDs are all directly attached to individual servers, according to Lightbits. The company argues the servers cannot afford the CPU cycles required to run FTL endurance enhancing routines across all drives. Also, it is impractical to operate a global FTL across all the server nodes’ directly-attached flash storage in a cluster.

Therefore, the only way to manage the flash properly is to put a bunch of it in a disaggregated SAN that is linked to the bare metal server nodes.

Update; This does not apply in the HCI situation, which has a software sharing layer. As Lightbits’ statement above says; “Where there is no software sharing layer, the very serious under-utilization of DAS applies. With the sharing layer like HCI, utilization is greatly improved.”

Eshghi said Lightbits technology works particularly well with cloud-native apps and also NoSQL, in-memory and distributed applications such as Cassandra, mongoDB, MySQL, PostgreSQL, RocksDB and Spark. These apps can all suffer from poor flash utilisation, long recoveries from failed drives and flash endurance issues.

Working with VMware

Lightbits Labs does not claim its fast NVMe-oF storage is necessarily faster or superior than its competitors. It argues instead that its global FTL is so good that it is worth breaking the general HCI rule – ‘no external storage’ in certain circumstances.

The argument Lightbits makes is strong enough for VMware to work with the company to make Lightbits’ disaggregated SAN work with vSAN.

Eshghi pointed out that the company already has a relationship with VMware parent Dell Technologies, which offers a PowerEdge R740xd server preconfigured with Lightbits software. He said the relationship was strengthening so that Lightbits’ SAN could integrate with VMware’s vSAN.

He also referred us to a demo at VMworld in August 2019 where Lightbits showed how its technology could integrate with vSAN.

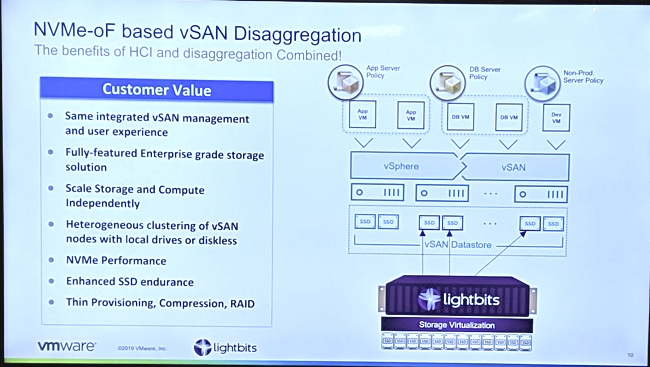

He said VMware is developing an in-line TCP/IP driver for vSAN and showed a slide (below) highlighting Lightbits SAN and its integration with vSAN.

From this we infer that Lightbits NVMe-oF TCP array will hook directly into a vSAN cluster and provide flash storage for the vSAN nodes.

Lightbits said vSAN users will be able disaggregate their hyperconverged infrastructure where necessary, and scale storage and compute independently with no change to the vSAN management or user experience. vSAN users could also benefit from Lighbits’ features such as NVMe-oF performance, enhanced SSD endurance, thin provisioning, wirespeed compression and erasure coding for fault tolerance.

Blocks & Files thinks Lightbits is positioning itself alongside HPE Nimble (dHCI), Datrium and NetApp as a disaggregated SAN supplier for HCI schemes. Lightbits implies its edge is TCP/IP integration with VMware’s vSAN and other HCI systems, and its clustered NVMe-oF TCP nodes.