Storage software supplier Minio reckons that the public cloud and fast object storage will ‘squeeze the life out of traditional file and block storage suppliers’.

Chief Marketing Officer Jonathan Symonds blogged; “MapR’s extinction is just the beginning. Cloudera is likely next. There will be others in the coming years as the lifeblood of file and block – data – ends up in object storage buckets.”

For the record, Symond is anticipating MapR’s extinction. Although the ailing Hadoop software supplier has been silent since missing its self-set July 3 deadline for selling the company or going bust, it has not yet officially crashed and burned.

Minio

Minio was set up in 2014 by CEO Anand Babu Periasamy, Harshavardhana and CFO Garima Kapoor, to develop open source object storage software.

Its S3-compatible software has been downloaded 240 million times, making it the world’s most widely-used object storage software.

Symonds was appointed Minio CMO March 2019, and the same month Minio tweaked branding with a simpler logo and its marketing with a focus on software as a high-performance data feed for big data analytics and AI workloads.

Minio software’s high performance

According to a June 2019 blog by Minio engineer Sidhartha Mani, long-term retention was the main use case of object storage. This was “a function of the performance characteristics of legacy object storage.” It was slow at data access compared to file and block storage in other words.

He said that situation has changed because object storage has become faster, with Minio’s software as his example.

Ok, so Minio’s object storage exhibits high-performance. What does that mean? Storage performance can be measured by latency, IOPS and bandwidth. Minio is concentrating on bandwidth, not latency or IOPS, and has blogged/publicised four tests in the last four weeks:

- Minio S3 with hard disk drive storage,

- Minio S3 with NVMe flash drive storage,

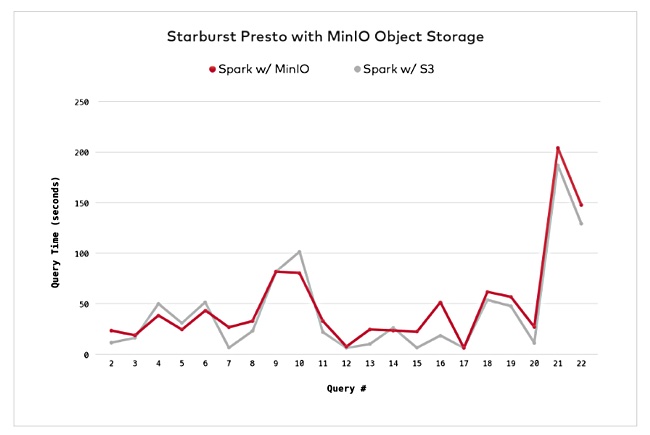

- Presto on Minio vs Amazon S3,

- Apache Spark with Minio back-end vs AWS S3.

The HDD test showed a 16-node Minio cluster achieving 10.81 GB/sec read and 8.57 GB/sec write performance using AWS bare-metal, storage-optimised instances with 25GbitE networking. Minio did not reveal the disk drives used but did say the drives were working full tilt and unable to saturate the network.

The NVMe test was based on the HDD setup but used an 8-node cluster, local NVMe drives and 100GbitE networking. It achieved 38.8 GB/sec read and 36.9 GB/sec write performance, saturating the 100GbitE network.

Presto, Spark and TPC-H

Presto is an open-source, distributed SQL query engine that can combine data from multiple sources. Minio’s Presto test compared Amazon’s S3 and MinIO’s object storage software as the data source. Once again the test was run on AWS using bare metal, storage-optimised instances with NVMe drives and 100GbitE networking.

The TPC-H decision support benchmark was used with a 1,000 GB scaling factor and there were two runs; one using Presto-Minio and the other Presto-AWS S3.

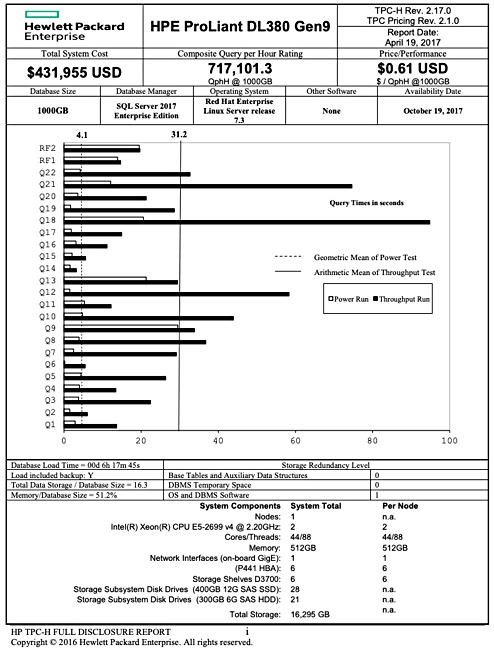

This benchmark has generally been used to test server performance, not the performance of a storage back-end. The newest results date from 2017 and it is gradually falling out of favour as distributed system analytics are favoured more than single server analytics/decision support.

S3 was slightly better than Minio as a Presto data source. These results are not presented in the standard TPC-H style – witness this HPE ProLiant DL380 Gen 9 full disclosure. Nor are they available on the TPC-H results website. It means, annoyingly, we can’t compare this Minio run to other TPC-H results.

Minio did better with the Spark test, using Minio and then AWS S3 as a Spark back-end store, using TPC-H again and presenting the results with more detail. This time Minio did better than AWS S3 overall.

It claimed “both [Minio and AWS S3] offer a level of performance that was previously considered beyond the capabilities of object storage,” without giving examples of the previously inadequate object storage results.

Comment

Blocks & Files thinks that using an industry-stand benchmark intended for cross-vendor product comparisons, while not supporting cross-vendor product comparisons on the benchmark website is a pain in the rear quarters area.

So too is the lack of quantitative comparisons with other object storage products. However…

Blocks & Files found a NetApp StorageGRID object storage test result. It used five nodes, each with 5 x 10TB SATA disk drives, and each node using 2 x 10GbitE links. There were three runs with differing sizes and numbers of objects (files) requested:

- 20,000 x 10KB files – 28.53 MB/sec,

- 2,000 x 10MB files – 749.8 MB/sec,

- 1,000 x 100MB files – 797.3 MB/sec.

This makes Minio’s S3 software, when used in the disk drive test above, look very fast indeed.