The world of hyperconverged (HCI) and converged infrastructure has become utterly confusing with marketing droids causing hyperactive uncontrolled growth of marketing terminology.

For instance: HCI, Disaggregated HCI, Distributed HCI, Composable Infrastructure, Composable / Disaggregated Infrastructure, Converged Infrastructure, Open Convergence, Hybrid HCI, HCI 2.0, Hybrid Converged Infrastructure and Hybrid Cloud Infrastructure.

Let’s see if we can make sense of it all. But first, a brief discussion of life before converged infrastructure.

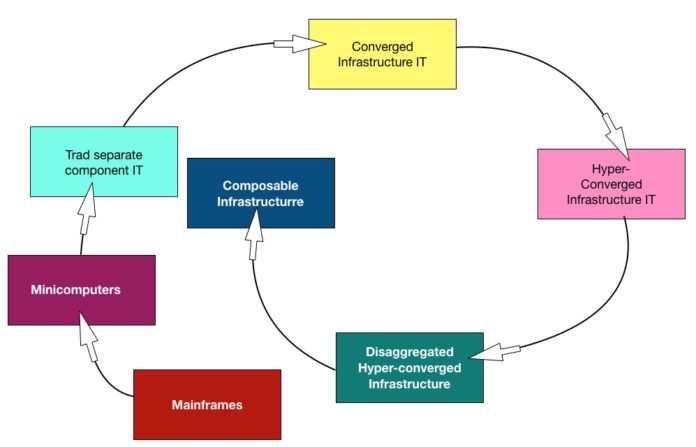

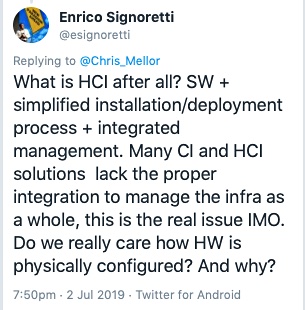

We can see a progression, starting with mainframes and passing through minicomputers, (decomposed) component-buying IT, converged infrastructure (CI), hyperconverged infrastructure (HCI), disaggregated HCI (dHCI) to composable systems.

It was the mainframe wot started it

In the 1950s IBM created mainframe systems, made from tightly integrated processors and memory (which became servers), network connecting devices, storage units and system software. These were complex and expensive.

Savvy engineers like Ken Olsen at Digital Equipment and other companies got to work in the 1960s and created minicomputers. These smaller and much cheaper versions of mainframes used newer semiconductor technology, Ethernet, tape and then disk storage, and system software.

IBM launched its first personal computer on August 12, 1981, following pioneering work in the 1970s by Altair, Apple, Commodore and Tandy. PCs used newer semiconductor technology, commodity disk drives and network connectivity and third-party system software such as CPM and DOS.

In due course, the technology evolved into workstations and servers. These servers ran Windows or Unix and displaced minicomputers. The server market grew rampantly, pushing mainframes into a niche.

Three-tier architecture came along from the mid-90s onwards, with presentation, application and data tiers of computing. The enterprise purchase of systems became complex, involving racks filled with separately bought servers, system software, storage arrays, and networking gear. It required the customer or, more likely, a services business to install and integrate this intricately connected set or blocks of components.

Customers buying direct from suppliers certainly did not enjoy having support contracts with each supplier and no one throat to choke when things went wrong.

Converged infrastructure

Cisco entered the server market in 2009 and teamed up with EMC to develop virtual blocks, racks of pre-configured and integrated server, system software, networking and external storage array (SAN) components. It was called a converged infrastructure approach.

EMC manufactured virtual blocks – branded vBlocks – through its VCE division. These were simpler to buy than traditional IT systems and simpler to install, operate and manage, and support, with VCE acting as the support agency.

NetApp had no wish to start building this stuff but joined in the act with Cisco in late 2010. Together they developed the FlexPod CI reference architecture, to enable their respective channel partners to build and sell CI systems.

CI systems multiplied with application-specific configurations. Other suppliers soon produced reference architectures, for example, IBM with VersaStack and Pure Storage with FlashStack, both in 2014.

All these CI systems are rack-level designs, coming in industry standard 42-inch racks.

Hyperconverged infrastructure

Around 2010 a new set of suppliers, such as Nutanix, SimpliVity and Springpath, saw that equivalent systems could be built from smaller IT building blocks, by combining server, virtualization system software, directly-attached storage into a single 2U or 4U rack enclosure or box, with no external shared storage array.

The system’s power could be scaled out by adding more of these pre-configured, easy-to-install boxes or nodes, and pooling each server’s directly-attached storage into a virtual SAN.

To distinguish them from CI systems these were called hyperconverged infrastructure (HCI) systems.

EMC got into the act in 2016 with VxRAIL, using VMware vSAN software. It became the dominant supplier after its acquisition by Dell that year, followed by Nutanix, which remains in strong second place. The chasing pack – or perhaps also-rans – include Cisco-Springpath, Maxta, Pivot3 and Scale Computing.

Hybrid or disaggregated HCI

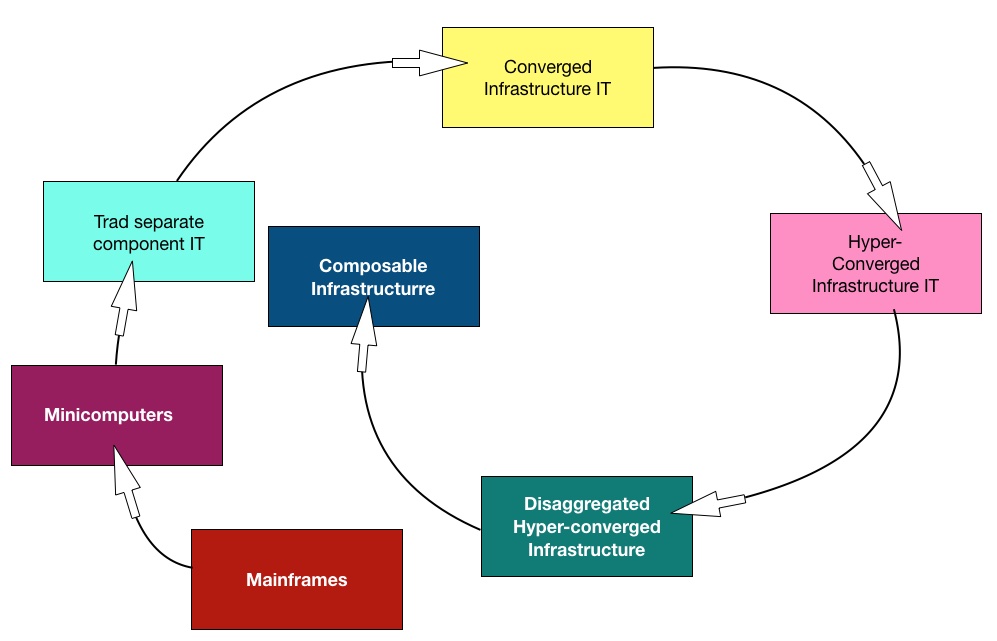

A feature of HCI is that the systems scale by adding pre-configured boxes combining compute, storage and networking connectivity. You add another box if there is too little compute or too little storage. This means that compute-intensive workloads could get too much storage and storage-intensive workloads could get too much compute. This wastes resources and strands unused storage or compute resource in the HCI system. It can’t be used anywhere else.

In response to the stranded resource problem, ways of separately scaling compute and storage within an HCI environment were developed around 2015-16. In effect the HCI virtual SAN was re-invented as a physical SAN, by using separate compute-optimised and storage-optimised nodes or by making the HCI nodes share access to a separate storage resource.

Datrium was a prime mover of this solution to the HCI stranded resource problem. Founded in 2012, the company brought product to market in 2016. It argued hyperconverged was over-converged, and said its system featured an open convergence design.

Near HCI

Fast forward to June 2017 and NetApp introduced its HCI product, called NetApp HCI. Servers in the HCI nodes accessed a SolidFire all-flash array, and the system could scale compute and storage independently.

And now, in 2019, HPE has introduced the Nimble dHCI, combining ProLiant servers with Nimble storage arrays. The ‘d’ in dHCI stands for disaggregated.

Logically these second-generation HCI systems, called disaggregated or distributed HCI, are less converged than HCI systems but more converged than CI systems. They are more complicated to order, buy and configure than gen 1 HCI systems but are claimed by their suppliers to offer a gen 1 HCI-like experience. A more accurate term for these products might be “near-HCI”.

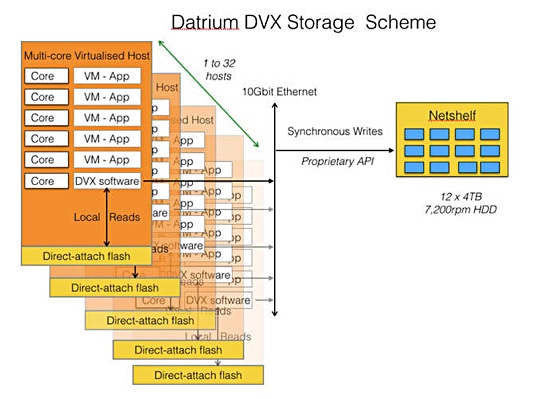

Enrico Signoretti, GigaOm consultant, said HCI is about how a system looks from the outside to users. In a July 2, 2019 tweet he wrote: “HCI is about infrastructure simplification, a box that gives you the entire stack. We can discuss about what’s in the box, but first of all you want it to look integrated from the outside.”

He developed this thought in a follow-up tweet: “What is HCI after all? SW + simplified installation/deployment process + integrated management. Many CI and HCI solutions lack the proper integration to manage the infra as a whole, this is the real issue IMO. Do we really care how HW is physically configured? And why?”

That may be so but it is best to avoid confusing and misleading IT product category terms.

Disaggregated HCI

Saying a disaggregated hyperconverged system is a hyperconverged system is an oxymoron.

Trouble is, there is no definitive definition of HCI. Confusion reigns. For instance, NetApp, in a recent blog, stated: “One of the challenges of putting out an innovative product like NetApp HCI is that it differs from existing market definitions.”

The NetApp blogger, HCI product specialist Dean Steadman, said a new category has landed. “To that end, IDC has announced a new “disaggregated” subcategory of the HCI market in its most recent Worldwide Quarterly Converged Systems Tracker. IDC is expanding the definition of HCI to include a disaggregated category with products that allow customers to scale in a non-linear fashion, unlike traditional systems that require nodes running a hypervisor.”

Steadman wrote “NetApp HCI is a hybrid cloud infrastructure”, which means containers running in it can be moved to the public cloud and data stored in it can be moved to and from the public cloud.

As a term ‘dHCI’ is useful – to some extent. It separates less hyperconverged systems – Datrium, DataCore, HPE dHCI and NetApp Elements HCI – from the original classic HCI systems – Dell EMC VxRail, Cisco HyperFlex, HPE SimpliVity, Maxta, Nutanix, Pivot3 and Scale Computing.

Where do we go from here?

CI, HCI and dHCI systems are static. Applications can use their physical resources but the systems cannot be dynamically partitioned and reconfigured to fit specific application workloads better.

This is the realm of composable systems, pioneered by HPE with Synergy in 2015, where a set of processors, memory, hardware accelerators, system software, storage-class memory, storage and networking is pooled into resource groups.

When an application workload is scheduled to run in a data centre the resources it needs are selected and composed into a dynamic system that suits the needs of that workload. There is no wasted storage, compute or network bandwidth.

The remaining resources in the pools can be used to dynamically compose virtual systems for other workloads. When workloads finish, the physical resources are returned to their pools for re-use in freshly-composed virtual systems.

The idea is that if you have a sufficiently large number of variable resource-need workloads, composable systems optimise your resources best with the least amount of wasted capacity in any one resource type.

Suppliers such as HPE, Dell EMC (MX700), DriveScale, Kaminario, Liqid and Western Digital are all developing composable systems.

Composability injections

Blocks & Files thinks CI, HCI, dHCI and composable systems will co-exist for some years. In due course composable systems will make inroads in to the CI category, with CI systems becoming composable. HCI and dHCI systems could also get a composability implant. It’s just another way of virtualizing systems, after all.

Imagine a supplier punting a software-defined, composable HCI product. This seems to be a logical proposition and HPE has probably started along this road by making SimpliVity HCI a composable element in its Synergy composable scheme.

Get ready for a new acronym blizzard. CCI – Composable CI, HCCI – Hyper-Composable Converged Systems or Hybrid Converged Composable infrastructure, Even Hybrid Ultra Marketed Block Uber Generated Systems – HUMBUGS.