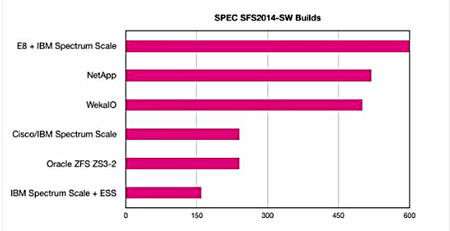

Opinion: The SPEC SFS 2014 benchmark shows which system vendor are fastest at a set of file system performance tasks, but there are no price/performance numbers. This means you either win the benchmark or you don’t and there is no opportunity for showing the relative value of your result.

The SPEC SFS 2014 benchmark resolves the shortcomings of an earlier version, which meant that you could not compare NFS and SMB/CIFS results. It is also an end-to-end system and not a component-level test. For example, it looks at disk-level performance rather than end-to-end system level performance.

There are five subtests:

- Number of simultaneous builds that can be done (software Builds)

- Number of video streams that can be captured (VDA)

- Number of simultaneous databases that can be sustained (Database)

- Number of virtual desktops that can be maintained (VDI)

- Electric Design Automation workload (EDA)

So far, vendors have runs several software build tests but have shied away from others such as VDA, Database, VDI and EDA. Effectively, this is an all-or-nothing software build test where you aim score the highest number of builds and the lowest overall response time (ORT).

There are sub tests at varying software build levels but the basic idea is still to complete as many builds as possible in the shortest possible time.

For example, in the chart above the Oracle ZFS system could be one quarter the cost of higher-scoring systems. If so that would make it a serious price/performance contender. But the benchmark gives us no means of telling.

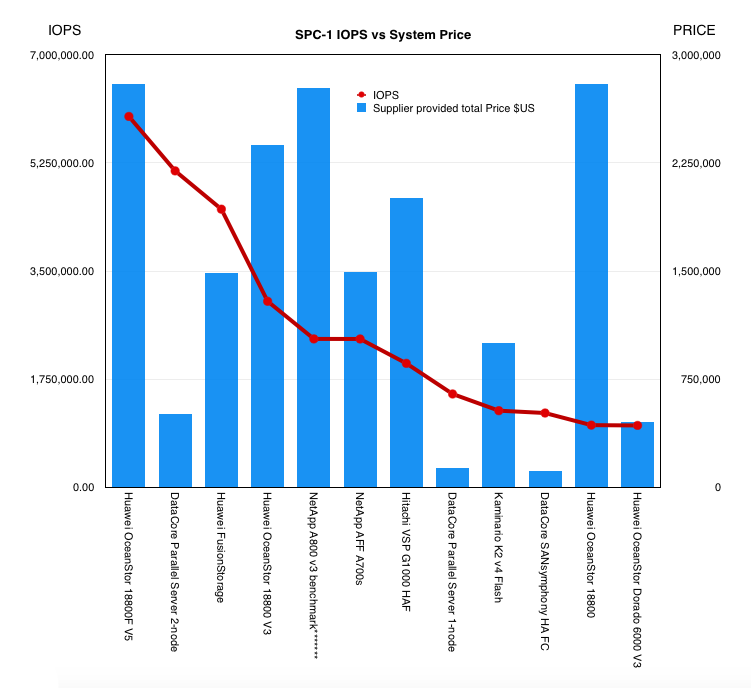

Compare this with the SPC-1 benchmark which looks at a storage array’s response to IO requests and has a price/performance element.

That means that you can win the top SPC-1 IOPS results but also you can win the best results in a system price range – $1m to $2m, for example. That gives smaller and mid-range suppliers valid reasons to use the SPC-1 IOPS benchmark. They have no fear of getting trampled by a massive competitor with, say, a $4m+ array throwing SSDs and controllers at the storage IOPS challenge to batter it into submission.

To summarise, SPEC SFS 2014 is needlessly restricted to an all-nothing high score/low ORT niche. Customers need a benchmark that can help them assess the gamut of suppliers, large and small.