NetApp and Hammerspace have recently begun talking about ‘storageless storage’. No, it’s not an oxymoron, but a concept that takes a leaf from the serverless compute playbook.

For NetApp, storageless, like serverless, is simply a way of abstracting storage infrastructure details to make the lives of app developers simpler. Hammerspace envisages storageless as a more significant concept.

In a soon to be published blog, Hammerspace founder and CEO David Flynn said: “Storage systems are the tail that wags the data centre dog.” He declares: “I firmly believe that the move away from storage-bound data to storageless data will be one of the largest watershed events the tech industry has ever witnessed.”

Ten top tenets of storageless data, according to Hammerspace

- It must be software-defined and not be dependent on proprietary hardware.

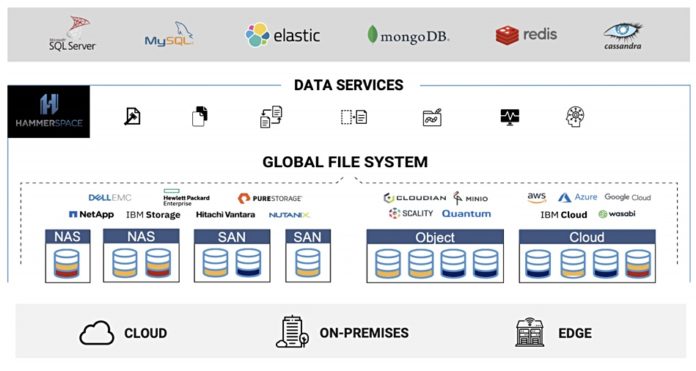

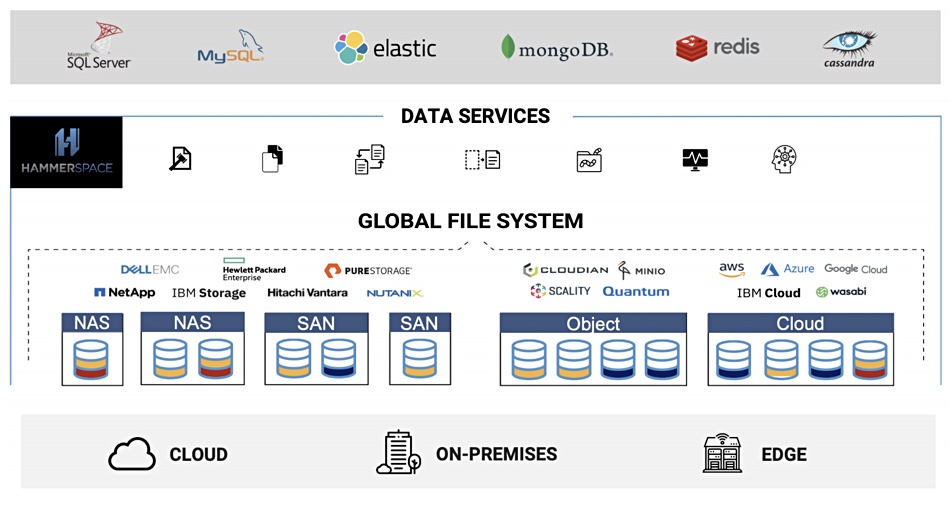

- It must be able to supply all forms of persistence; block, file, and cloud or object; read-only, read/write; single or shared access.

- It must be able to use all forms of storage infrastructure; block, file, or object protocol-based; systems or services, and on-premises, in the cloud, or at the edge.

- Universal access across Kubernetes clusters, data centres, and clouds.

- Have objective-based orchestration.

- Possess a full suite of data services.

- Have performance and scalability via a parallel architecture, direct data access and RDMA-capable.

- Intrinsic reliability and data protection.

- Ease of use with Instant assimilation of any networked filesystem, any existing persistent volume with files, zero copy of data, self-service, and auto scale-up/down.

- Easy to discontinue using – export data without any need to copy.

Storageless data orchestration

Hammerspace claims it overcomes data gravity or inertia by untethering data from the storage infrastructure to provide dynamic and efficient hybrid cloud storage as a fully automated, consumption-based resource. Users self-service their persistent data orchestration to enable workload portability from the cloud to the edge.

Flynn told Blocks & Files in a phone interview: “Storage is in charge while data is effectively inert. Data doesn’t even exist outside of the storage holding it. You don’t manage data, you manage storage systems and services while data passively inherit the traits (performance, reliability, etc.) of that storage; it doesn’t have its own. Essentially, data is captive to storage in every conceivable way.”

Data owners are compelled to organise data to suit how it is to be broken up and placed onto and across different storage systems (infrastructure-centric), Flynn says, instead of how they want to use it (data-centric). Data is stored in many silos and is hard to move.

It seems impossible to get consistent and continuous access to data regardless of where it resides at any given moment, regardless of when it gets moved, or even while it is being moved, since moving it can take days, weeks, or even months, according to Flynn. He thinks it is an absurdity to manage data via the storage infrastructure that encloses it.

Storageless data means that user do not have to concern themselves with the specific physical infrastructure components, he argues. Instead, an orchestration system maps and manages things automatically onto and across the infrastructure. This allows the infrastructure to be dispersed, to be diverse, to scale and do so without compounding complexity.

Kubernetes

He declares that Kubernetes in the serverless compute world is the orchestration system that places and manages individual containers onto the server infrastructure. In the storageless data world, Hammerspace is the orchestration system that places and manages individual data objects onto the storage infrastructure. Both do it based on the requirements, or objectives, specified by users in metadata.

Flynn notes that we are hitting the end of Moore’s Law and microprocessors are not growing in performance at rates like they used to. So, he argues, there is nowhere to go but to scale applications out across many servers.

Serverless compute, made possible by Kubernetes, is the only way to manage the complexity that scaling out creates, but it suffers from a data problem. Flynn said: “Infrastructure-bound data is the antithesis of the scalability, agility, and control that Kubernetes offers. Storageless data is the answer to the Kubernetes data challenge.”

NetApp and Spot

NetApp was spotted in October using the term ‘storageless storage’ at the virtual Insight conference in October in reference to Spot, the company’s containerised application deployment service.

NetApp said Spot brings serverless compute and storageless volumes together for high performing applications at the lowest costs. Serverless compute means a cloud-native app is scheduled to run and the server requirements are sorted out by the cloud service provider when the app is deployed. The developer does not have to investigate, define, and deploy specific server instances, such as Amazon’s EC2 c6g, medium EC2 instances or others.

As Spot is both serverless and storageless, the developer does not need to worry about either the specific server instance or the storage details. In both cases, the containerised app needs a server instance and a storage volume provisioned and ready to use. But the app developer can forget about them, concentrating on the app code logic in what, from this viewpoint, is effectively a serverless and storageless world.

Anthony Lye, general manager for Public Cloud at NetApp, blogged in September: “To get a fully managed platform for cloud-native, you need serverless AND storageless.” [Lye’s emphasis.]

In a November blog post, Shaun Walsh, NetApp director for cloud product marketing, claimed: “NetApp has the first serverless and storageless solution for containers. Just as [Kubernetes] automates serverless resources allocation for CPU, GPU and memory, storageless volumes, dynamically manage storage based on how the application is actually consuming them, not on pre-purchased units. This approach enables developers and operators to focus on SLOs without the need to think about storage classes, performance, capacity planning or maintenance of the underlying storage backends.”

Developers can run containers with self-managing, container-aware storage.