Intel today announced its Cascade Lake Advanced Performance AP Xeon processor with Optane memory support.

The high-end server chip ships at the end of the year and is generally available in 2019. We expect Optane DIMM-enhanced application run-times to be popularised in the first half of 2019.

Intel will reveal more about the Cascade Lake AP at SuperComputing 2018 this month (but do check out The Register’s short piece about this upcoming high-end processor). In the meantime we shall use this opportunity to take a peek at Cascade Lake AP’s Optane DIMM support.

Obtain Optane

Cascade Lake is an iteration of Intel’s 14nm Xeon CPU line, with an optimised cache hierarchy, security features, VNN deep learning boost, optimised frameworks and libraries.

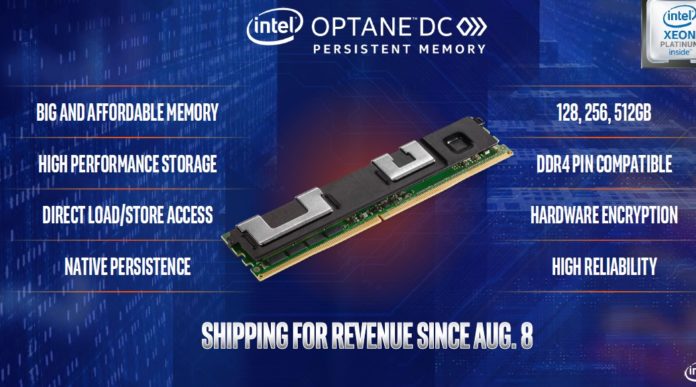

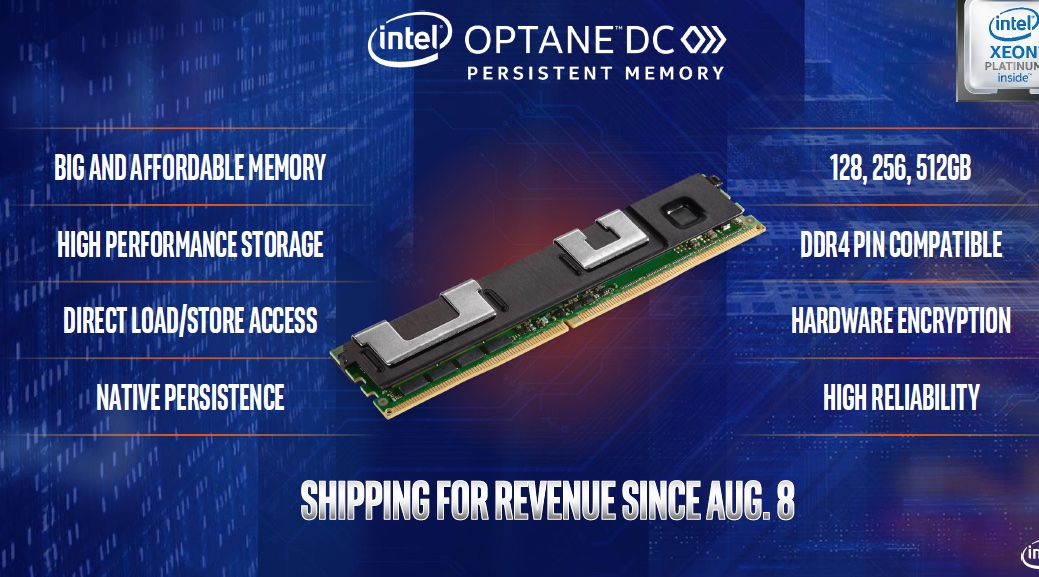

Optane is Intel’s 3D XPoint non-volatile, storage-class memory technology. We understand it is based on a form of phase-change memory.

Jointly-developed by intel and Micro, the technology is available only in Intel’s Optane-branded products. There are NMVe SSDs and also Optane DIMMs which connect directly to the memory bus and offer faster access speeds than the Optane SSDs.

These DIMMs are accessed as memory, with load and store instructions instead of using a standard storage IO stack. Hence the faster access speed. They come with up to 512GB capacity and a server could have 3TB of Optane capacity with six such DIMMs.

Price points

Typically, applications running in a server use DRAM and external storage – SSDs and disk drives. DRAM is very fast, SSDs are medium fast and disks are slow. Optane is slower than DRAM but faster than NAND SSDs.

The more of an application’s working set (data + code) is in a faster medium the faster it will execute. But faster can come with a hefty price tag.

DRAM is expensive and servers can only have so much. So, hypothetically, a server might provide 1TB DRAM and 15TB of SSD to an application which can then handle 100 web transactions/minute.

If it could all fit in DRAM it would handle, say, 2,000 transactions/minute.

If you provide 1TB DRAM, 2TB of Optane and the 15TB of SSDs it might run at 800 – 1,000 transactions/minute or more; much better than just the 100 transactions/minute with1TB DRAM + 15TB SSDs

48-core

However, they require special support from the server processor – and that is delivered by the Cascade Lake AP CPUs.

The processor comes in a 1- or 2-socket multi-chip package. It incorporates a high-speed interconnect, up to 48 cores/CPU and support for 12 x DDR4 channels/CPU – more memory channels than any other CPU, according to Intel.

Intel’s Optane SSD 905P has <10 μs read/<11 μs write latency. The Optane DIMM operates down at a 7μs latency level.

Servers with Optane-enhanced memory capacity can run larger in-memory applications or have larger working sets in memory. This enables the server to execute the application much faster than when using a typical DRAM and SSD combination.

Light the DIMMs

NetApp’s MAX Data uses Optane DIMM caches in servers which are read and write data from/to an attached ONTAP flash array in single digit microseconds. Think 5 microseconds, compared to an NVMe SSD.

A Micron 9100, NVMe-accessed SSD has a 120 microsecond read access latency and a 30 microsecond write access latency.